ML in Cloud Computing: Solving Business Challenges

The cloud shifts the business paradigm from mere data storage to actionable data utilization. ML algorithms can dynamically automate systems, improve financial forecasting accuracy, and provide real-time customer personalization.

The process is quite simple. Cloud computing supplies the necessary resources and infrastructure for machine learning. In return, machine learning provides the advanced business process automation and decision-making that help orgs improve operations and foster better results.

Let me elaborate on it.

MLaaS in the Cloud

Machine Learning as a Service (MLaaS) might be the most valuable form of AI democratization in business history. The essence of MLaaS is training algorithms on large datasets, automating pattern recognition and prediction, and delivering it at scale in the cloud. Instead of building deep in-house infrastructure and recruiting skilled teams, cloud-based ML services and platforms allow companies to do so much more in so much less time.

Comprehensive MLaaS offerings from cloud providers include pre-trained models, automated machine learning tools, and managed infrastructure. These offerings address barriers to ML adoption in the following ways:

- Infrastructure requirements: Organizations no longer need to purchase costly hardware or manage complex software setups. MLaaS provides immediate access to high-performance, scalable cloud computing resources that adapt to user demand.

- Technical gaps: Complex ML models can now be implemented by organizations with little to no data science expertise thanks to MLaaS. Drag-and-drop systems and automated feature creation simplify the technical side of ML projects.

- Value delivery time: MLaaS provides managed services and ready components, significantly reducing the time needed to execute a project. Deliverables that once required months of work can be put together in only weeks or even days.

- Predictable cost: Usable ML systems can be tested by organizations with minimal upfront investment due to pay-per-use pricing. This provides an inexpensive means of testing and scaling services that can be implemented based on verified results.

The democratizing effect of MLaaS does not only apply to large organizations. New and small companies, even individual software developers, can access innovative ML systems and services. This powerful potential stimulates cross-industry and cross-organizational innovation.

Managed Platforms vs. Custom Solutions

When it comes to MLaaS, organizations must decide between managed platforms and custom ML pipelines. Managed platforms, such as AWS SageMaker and Google Vertex AI, provide integrated tools for all stages of the machine learning project lifecycle. They deliver pre-built environments, auto-scaling infrastructure, and experiment tracking, all of which streamline operational overhead and accelerate time-to-value.

For those organizations with specific requirements that managed platforms do not fully address, custom ML pipelines can provide the needed flexibility and control. Custom solutions are generally developed using combinations of open-source tools such as MLflow, Airflow, or Kubeflow alongside cloud-native services. This decision is guided by your organization's technical expertise, regulatory constraints, and long-term strategy.

Practical Applications Across Industries

The true value of MLaaS starts showing itself with its use cases across various business functions:

- Predictive analytics for business intelligence: Organizations leverage cloud-based ML services to study historical data and predict future trends. This helps companies anticipate market changes, fine-tune inventory levels, and improve service delivery to consumers.

- Automation of IT operations (AIOps): AI-driven IT operations leverage ML to automate cloud infrastructure management, troubleshooting, and resolving problems, optimizing resources, and enhancing the systems' functioning in a self-sustained manner without human help.

- Natural language processing and chatbots: Organizations use various NLP services to automate customer assistance, evaluate client feedback, and analyze vast amounts of unstructured text data to derive business intelligence.

- Image and video recognition: Computer vision services automate the analysis of images and videos for various purposes. It has use cases in quality assurance in manufacturing, security surveillance, and other situations where manual processing of the visual content is impractical.

How Do Cloud Solutions for Machine Learning Work?

At the hardware level, these cloud solutions operate by installing graphics cards (GPUs) into the host servers. The power of these GPUs is then virtualized (vGPU) and distributed among different users. Key parameters that define a GPU's capability include:

- Performance: Measured in teraflops (TFLOPs), this indicates the trillions of operations the GPU can execute per second.

- Video RAM (VRAM): This is the dedicated memory used for processing the GPU's graphical data.

- Memory bandwidth: This measures the rate at which data is transferred from memory to the processor, typically in terabytes per second.

A critical component of machine learning is the processing and storage of data, as AI development relies on constant data manipulation and the creation of new information from existing datasets. It offers tools that assist data analysis and the required data pre-processing and standardization for the rapid handling of large datasets. It can also integrate with Tableau or Apache to host big data processing tools.

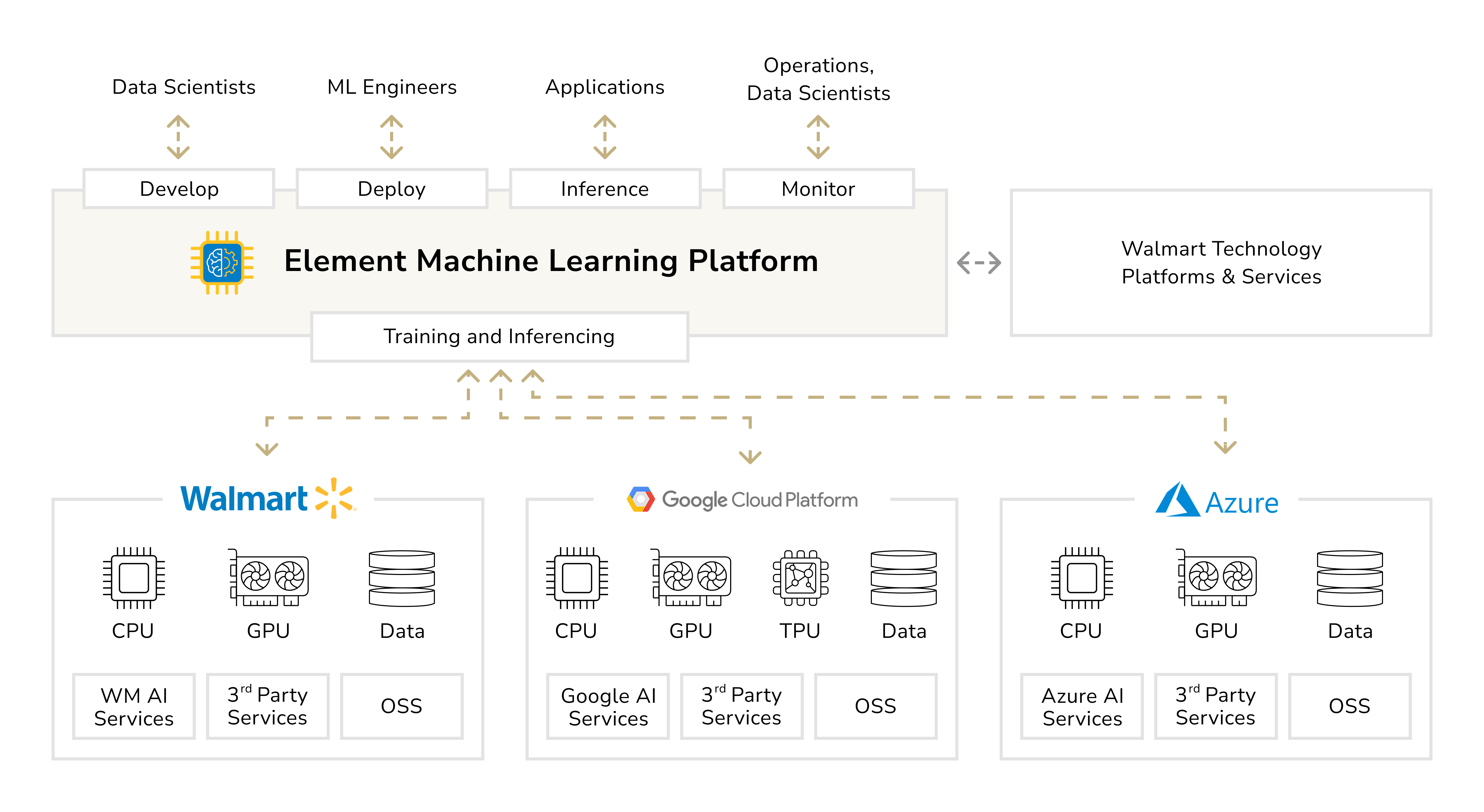

In a multicloud strategy, the capabilities of cloud ML can reach new heights through workload distribution. For instance, Walmart's machine learning platform, Element, is distributed across various operational and storage layers (also including its own regional data center servers), adding a wealth of hundreds of GPUs. This enables the company to perform real-time data collection, market analysis, inventory control, and online product search personalization.

Competitive Advantage Through AI/ML-Powered Analytics

Leveraging AI and machine learning in cloud services is a key strategic priority for firms in telecom, e-commerce, and fintech to stay ahead of their competitors. As an example within the telecom industry, a large proportion of companies are investing in AI, with 90% of telecoms in the US piloting or implementing AI solutions. There is a perception within the industry that the investment is worthwhile, with 53% of telecom executives stating AI provides a competitive edge. Telecoms are utilizing machine learning analytics to

- enhance and automate network performance

- personalize customer experiences

- streamline service delivery

- improving customer satisfaction and retention.

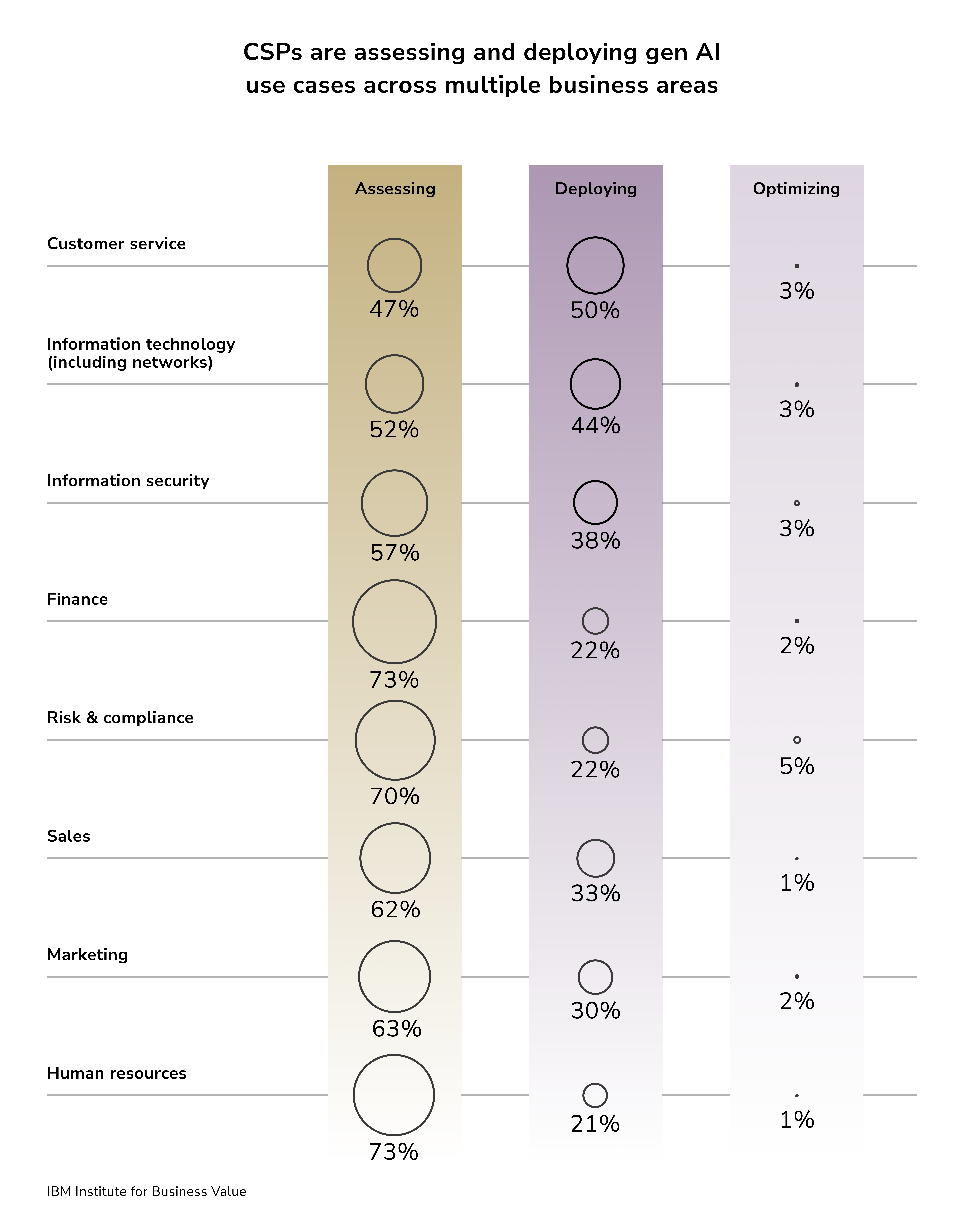

As per the TM Forum report, communications service providers (CSPs) pinpointed over 100 individual use cases placing AI in the future of the industry.

Artificial intelligence and machine learning are becoming commonplace for personalization and operational efficiency in e-commerce. In fact, around 93% of e-commerce companies identify AI technologies such as intelligent chatbots and recommendation engines as competitive tools for customer experience and conversion improvements. Personalized AI retail technology improves conversion rates and customer loyalty, allowing e-commerce companies to outpace competitors.

Artificial intelligence technologies in the cloud are transforming fraud detection, risk assessment, and management in the financial technology sector. Compared to older technologies, advanced machine learning techniques in cloud-enabled fintech applications identify fraud and credit risk far faster. Meanwhile, integrated AI chatbots and advisory tools help optimize digital customer onboarding. AI also improves operational efficiency in areas such as automated loan underwriting and compliance checks, helping fintech companies increase their operational scale.

Leveraging ML and DL for Economic Value in the Cloud Era

The integration of AI with cloud technologies empowers companies with on-demand, almost boundless computing power, translating into massive economic output and productivity gains. For example, the economic impact of cloud technology in 2023 is estimated to be over $1 trillion, with $98 billion of that attributable to AI. Cloud technologies and AI together are projected to add $13.5 trillion to global GDP by 2030. These benefits are the result of AI’s ability to

- automate operational processes at lower costs

- predict and reduce human error

- speed up processes

- reduce operational resource consumption

- reveal custom insights contained in massive datasets

- improve strategic analyses and responsiveness to market changes.

Moreover, the integration of ML and DL in the cloud empowers a cloud-driven workforce to perform higher-value tasks. Meanwhile, repetitive and lower-value functions are automated and handled by the technology. The ability of AI in the cloud to perform advanced analysis enables even small enterprises to leverage ML and DL capabilities, thus democratisizing the market.

The synergy of cloud and AI technologies ensures economic value through several strategic advantages:

- Lowering costs through automation: ML models can replace and automate many complex and high-level decision processes. It can thus significantly lower operational costs while enhancing the precision of outcomes, transforming business operations.

- Increasing revenues through ML capabilities: Advanced frameworks offered by cloud services and ML-hosted environments enable organizations to derive real economic value from big data customer analytics.

- Continuous risk control: ML fraud detection, risk assessment, and anomaly detection are advanced and diversified. Security systems can scale to the cloud, ensuring risk systems operate and cover large amounts of operational data.

- Accelerated innovation: The flexibility offered by ML and cloud-level environments enables the rapid development and innovation of the prediction model.

- Market expansion: The ML cloud services make it easier for organizations to grow and expand economically by entering new geographical locations and customer/msr segments.

Cost Control Strategies for ML Workloads

There are multiple ways to make ML workloads more budget-friendly while maintaining a desired workload performance.

- Spot instances for training jobs: Use of spot instances for interruptible training jobs can lead to savings of up to 90% per AWS. This approach works particularly well for batch processing and model training that can tolerate interruptions.

- Auto-scaling for model endpoints: Predictive scaling adjusts computational resources in real-time for model endpoints, ensuring you only pay for resources when they're actively serving predictions.

- Automatic shutdown policies: Redundant compute resources are a waste of money. Set automatic shutdown of idle compute resources, especially during non-business hours.

- Performance monitoring and optimization: Tracking performance is a best practice for eliminating waste within a system.

The Data-Driven Decision Framework

The path to achieving a competitive advantage begins with a more systematic approach to using corporate data. And while data volume is valuable, the game-changer is the ability to convert that data into operational and strategic recommendations.

Firms with the greatest success have created self-sustaining feedback loops, where machine learning models continuously learn from new data, thereby improving their accuracy and relevance over time. And there's no need to worry about the models becoming sluggish, as the cloud will effortlessly provide the computing power and speed needed to keep the models performant.

Edge AI and Hybrid Cloud: The Future of Cloud Computing

Looking ahead, one of the most significant trends in the future of cloud computing is the integration of edge AI and hybrid cloud. Edge AI refers to the ability to run AI/ML algorithms closer to the edge devices (or nearby servers) rather than solely in central cloud data centers. The ability to process data closer to the source, such as cell towers, on the factory floor, and within IoT devices, decreases latency and minimizes bandwidth requirements.

Edge AI will allow for ultra-rapid responses by processing real-time data at the edge and sending only the critical insights to the cloud. A common approach is to use the cloud for heavy model training and big-picture analytics, then deploy trained models to edge devices for on-site inference. This complementary model yields a powerful combo: Edge AI handles instant decisions on the frontlines, while cloud AI provides global learning and coordination.

For example, autonomous vehicles (edge devices) can process sensor data in milliseconds to avoid obstacles, while the AI model is continuously updated from a cloud-trained master model. Edge AI is already emerging in telecom (e.g., 5G network hubs AI for routing), in manufacturing (AI on factory sensors for safety), and in consumer tech (smart home gadgets). Industry experts see edge and cloud evolving in tandem, creating a "cloud-edge collaboration" ecosystem wherein intelligence is distributed from core to edge.

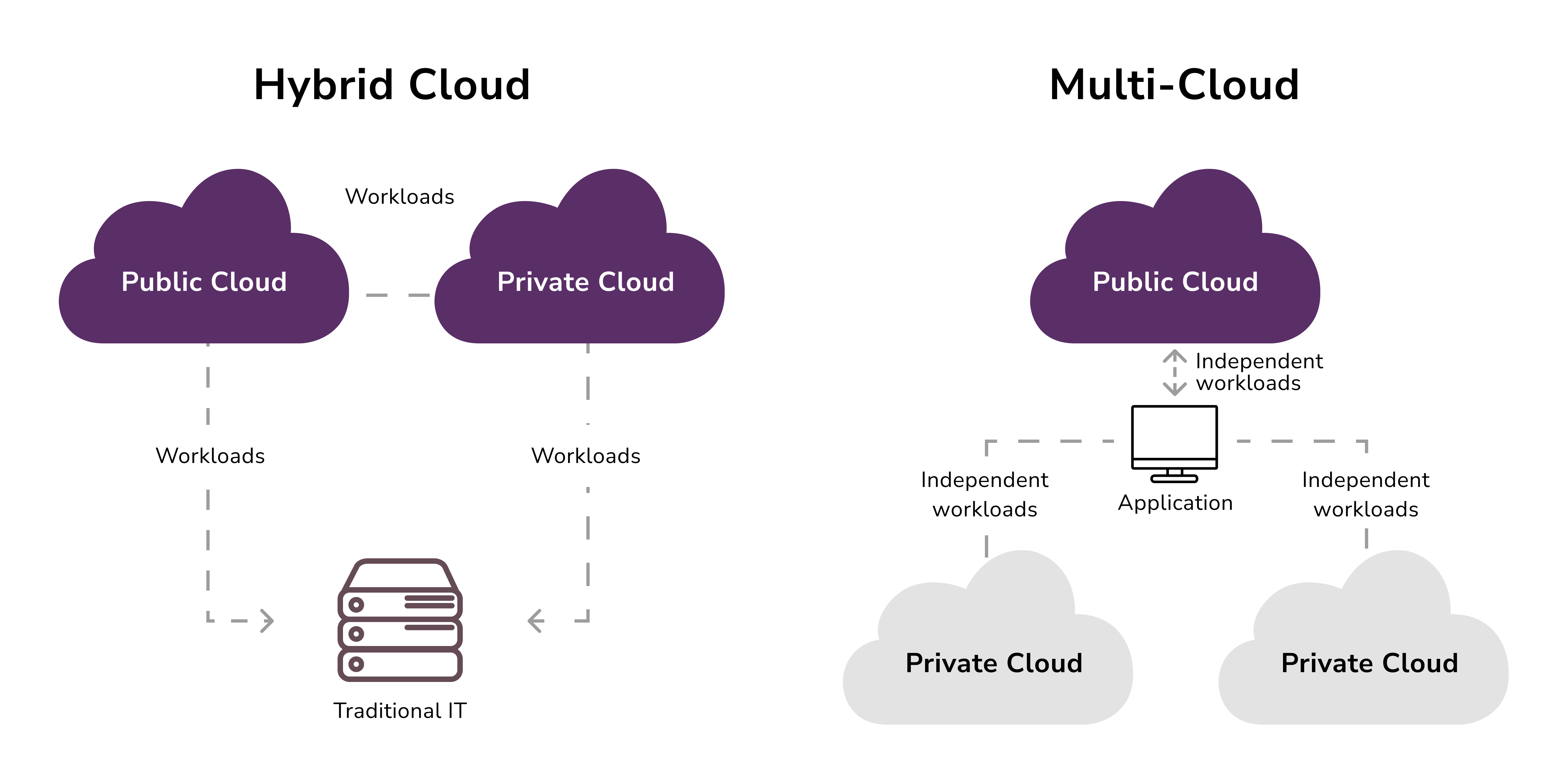

Simultaneously, organizations are seeking hybrid cloud models with a combination of public and private cloud and on-premises assets to capitalize on each cloud infrastructure's advantages. The hybrid and multi-cloud strategies provide optimum flexibility since sophisticated organizations can keep sensitive workloads and/or data on a private infrastructure or on-premises systems, then leverage the public cloud for compute resources. Cost optimization, business continuity, and elimination of single-cloud vendor drudgery are key drivers reinforcing the prediction of hybrid and multi-cloud strategies.

In the case of AI/ML, hybrid systems enable the use of a private cloud to store customer data for compliance purposes and utilize a public cloud ML service on anonymized data for model training. This approach preserves compliance while driving innovation. In the future, more cloud providers will deliver capabilities for seamless integration of on-prem resources and the cloud, enhancing the flow of AI and data to where they are needed most.

ML-Centric Resource Management in Cloud Computing

The increasing prevalence of ML workloads in the cloud has given rise to ML-centric resource management. This strategy aims to efficiently manage cloud resources (compute, memory, and energy) for either ML tasks or by utilizing ML techniques.

See, deploying ML/DL workloads, specifically deep learning, is resource-heavy. Thus, cloud vendors have added specifically designed resource management functionalities, such as auto-scaling cloud clusters to add more nodes during training, or utilizing serverless ML infrastructure management. The aim here is to provide ML applications with the required resources on demand while avoiding wastage and thereby reducing cost.

Lastly, cloud vendors use ML to manage cloud resources and operations, specifically AIOps. ML models can predict user traffic and pre-provision servers, or scale down to consolidate VMs when demand is low. Self-learning systems respond to requests and automatically allocate resources in a cost-effective and efficient manner.

For instance, an ML-driven resource manager determines optimal workload routing or server cooling by processing thousands of telemetry data points instead of relying on static allocation rules. AI implementation in infrastructure clouds has been correlated with increased uptime, decreased energy consumption, and improved predictive capacity planning. AI and cloud computing have a feedback loop. Machine learning and artificial intelligence enhance cloud infrastructure and resource management. This self-optimizing cloud provides organizations with reduced operating costs and improved dependability, as AI governs autonomously tuned cloud resources (e.g., on-demand compute provisioning at specific locations).

AI/ML Applications in Cloud for Telecom, E-commerce, and Fintech

Across telecom, e-commerce, and fintech, cloud-based ML is being applied to solve critical business challenges.

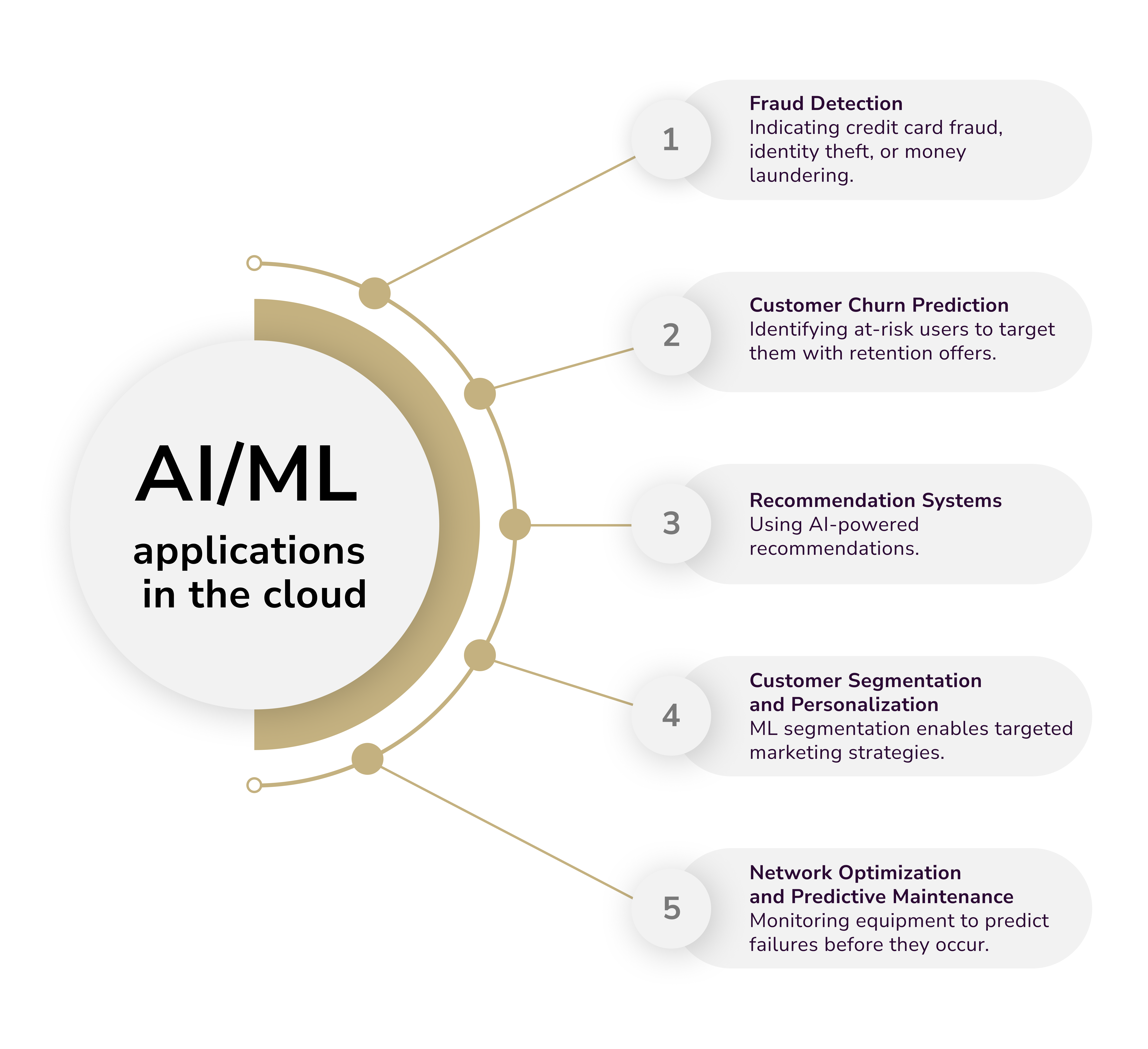

Fraud Detection

ML models flag transactional anomalies to detect and prevent fintech fraud like credit card fraud, identity theft, and money laundering in real-time. For instance, telecom uses ML models to capture SIM cloning and other forms of fraudulent access to services. Cloud AI services evaluate user behavior, identify patterns, and trigger alerts for deviation from expected behavior. Meanwhile, predictive analytics identify abnormal actions that have the potential to abuse the network. The speed of response to fraudulent activities is critical to mitigating losses. ML in cloud computing helps detect and learn new patterns of fraud in real time, thus providing a proactive and automated solution to reduce losses.

Customer Churn Prediction

Telecom companies employ ML on cloud customer data (call drop rates, support calls, usage trends) for predicting churn risk. For instance, models identify subscribers who consistently experience unfavorable network conditions and are likely to quit. In banking or e-commerce, AI models identify behavioral patterns, such as decreased engagement or adverse feedback, that often precede customer churn. Businesses can then implement targeted retention strategies for specific users to enhance retention. AI-based churn prediction models have become crucial for businesses, since keeping existing customers is significantly less expensive than acquiring new ones.

Recommendation Systems

These are probably the most well-known applications of machine learning in the field of e-commerce. Recommendation systems leverage cloud-based algorithms to personalize suggestions based on a user’s unique interests. Amazon and Netflix are among the early adopters of this advanced technology. These systems use data on customers’ purchase histories, browsing behaviors, and ratings to develop profiles based on similarity or preferences, thus predicting items that a customer may purchase or enjoy. This leads to increased customer engagement and sales because they enjoy a tailored shopping or viewing experience.

Many retailers now report that a significant portion of their revenue comes from AI-powered suggestive selling. Apart from retail, fintech has recommendation engines aimed at cross-selling related financial products. The industry uses recommendation systems to help clients alter investment strategies, offer a higher interest savings account, etc. Telecom generates recommendations for higher-value plans that have bundled services.

Customer Segmentation and Personalization

Machine Learning in the cloud allows businesses to understand, meaningfully segment, and target their customers. Marketers can personalize their approach to each segment after clustering customers using various dimensions such as behavioral, demographic, or value. An online retailer, for instance, may analyze customer profiles using ML to classify them as "bargain seekers," "brand loyalists," and "high spenders," and develop targeted promotions for each segment.

As per Google Cloud, marketers using predictive analytics to segment tiers and develop target marketing assets for each contingent allow for personalization, boosting conversions and customer satisfaction. In telecom, segmentation might inform targeted retention deals for high-value users. Segmentation also drives personalization in banking via personalized financial advice for different client segments.

Network Optimization and Predictive Maintenance (Telecom)

In telecommunications, AI/ML is extensively used to optimize network operations and maintain infrastructure proactively. Cloud-based ML models analyze network traffic patterns and performance data to optimize network configurations in real time. These ML models make on-the-fly adjustments in the bandwidth, data routing, and network capacity to aid in reduced latency and higher throughput to users. Such AI network tuning improves the service quality while lowering the cost.

Moreover, telecoms use ML for predictive maintenance of their network equipment. By monitoring signals from cell towers, routers, or data center servers, algorithms can predict failures or capacity issues before they happen. This might improve service by reducing downtime and service disruptions, which in turn reduces customer churn. The predictive maintenance models are efficient because the cloud correlates and analyzes data from thousands of devices with applied ML.

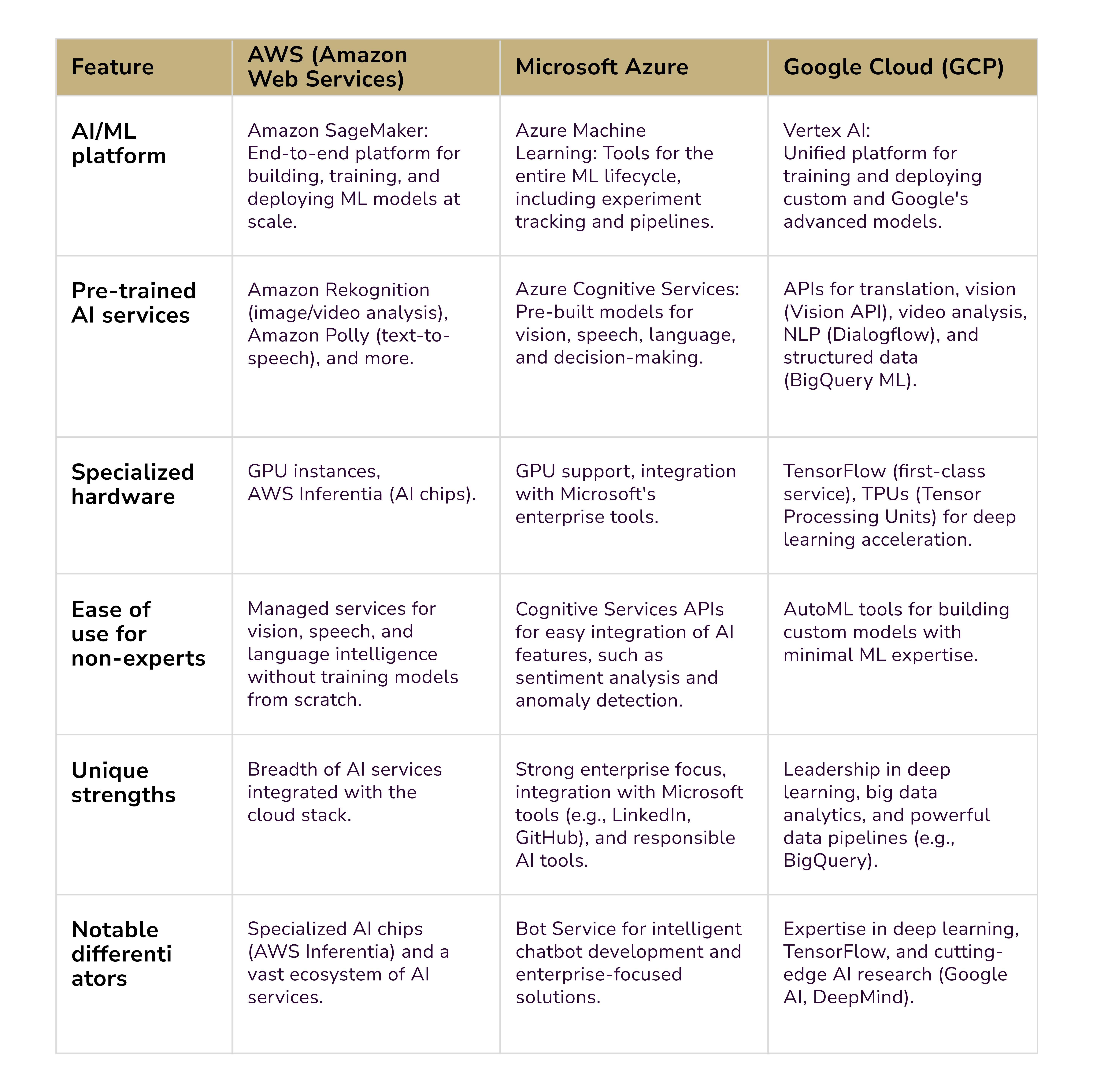

Analyzing Cloud Machine Learning Services: AWS, Azure, GCP

The top cloud service providers —Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) —offer a substantial array of AI and Machine Learning services to counteract the challenges businesses face. Each offers a similar suite of technologies and capabilities, yet each possesses its own unique offerings specific to its business strategy and market position.

AWS

Amazon has invested heavily in AI/ML as part of its cloud. A flagship service is Amazon SageMaker, an end-to-end platform for building, training, and deploying ML models. It also streamlines and automates the ML process for data science teams. In addition to ML, AWS has a number of pre-trained AI services, including Amazon Rekognition for object and facial recognition analysis in images and videos, and Amazon Polly for converting written text to natural-sounding speech. Developers can build applications that incorporate vision, speech, and language intelligence, and automation powered by these services, without developing and training the models from the ground up. AWS's extensive product offerings and services ecosystem is a key differentiator, including general-purpose and specialized machine learning and AI (AWS Inferentia), and AI services for diverse use cases.

Microsoft Azure

Azure ML assists users throughout the ML model lifecycle and includes services for developing and deploying models, experiment tracking, model management, and pipeline orchestration. Azure Cognitive Services can be leveraged in domains like vision (e.g., image recognition, OCR), speech (voice AI, speech-to-text, and translation), language understanding, and decision-making.

APIs can be used for application development with AI components of sentiment analysis and anomaly detection. Azure also has an integrated Bot Service for building and deploying smart bots and chatbots. Microsoft Azure reflects Microsoft’s strong positioning in enterprise AI with an emphasis on seamless integrations in enterprise-grade AI development. The tools and frameworks for building responsible AI (explainability and fairness) are a result of Azure's AI research for enterprise integration with Azure, LinkedIn, and GitHub.

Google Cloud (GCP)

Google's investment in AI and open source technology, like DeepMind, and others, is one of the driving factors behind the growth of Google Cloud. Google Cloud AI is unified under the Vertex AI platform, which combines the previous AI Platform and AutoML services. Vertex AI provides the infrastructure necessary for users to train and deploy models, including custom models as well as Google’s advanced models. Google's deep learning expertise is a significant differentiator. Google developed TensorFlow, which is a cloud service, and Google’s cloud also provides cloud TPU (Tensor Processing Unit) for deep learning model acceleration. GCP's AutoML tools allow users with little machine learning background to automatically train and tune custom models for vision, language, and tabular data through a simplified interface. Google also provides access via APIs to advanced models for translation. It includes Vision API, video analysis, Dialogflow for conversational AI, and BigQuery ML, among others. Google Cloud offers sophisticated data pipelines for data science, including integrated AI and analytics.

Unquestionably, all three providers have portrayed strategic pivots in generative AI advances virtually every year between 2023 and 2025. Choosing between them may come down to existing ecosystem preference, specific tool maturity, or pre-existing data/workloads on a cloud. If you need help in determining the platform that suits your needs best, contact Impressit for a quick discussion on your AI opportunities.

Roman Zomko

Other articles