Voice AI for Enhanced User Experience

Voice AI has started as merely an input method. Now, it has changed the entire landscape of user experience design. It eliminates traditional barriers between humans and technology, creating more natural, accessible, and efficient interactions.

Modern voice AI solutions leverage advanced natural language processing, machine learning, and real-time speech synthesis to create seamless, conversational interactions that feel remarkably human. These systems can understand context, detect emotions, and adapt responses to individual user preferences, transforming traditional customer service models and operational workflows. For businesses, custom AI development of voice solutions and embedding conversational AI may become an integral part of their strategy toward enhanced UX.

Key Application Areas for Voice AI

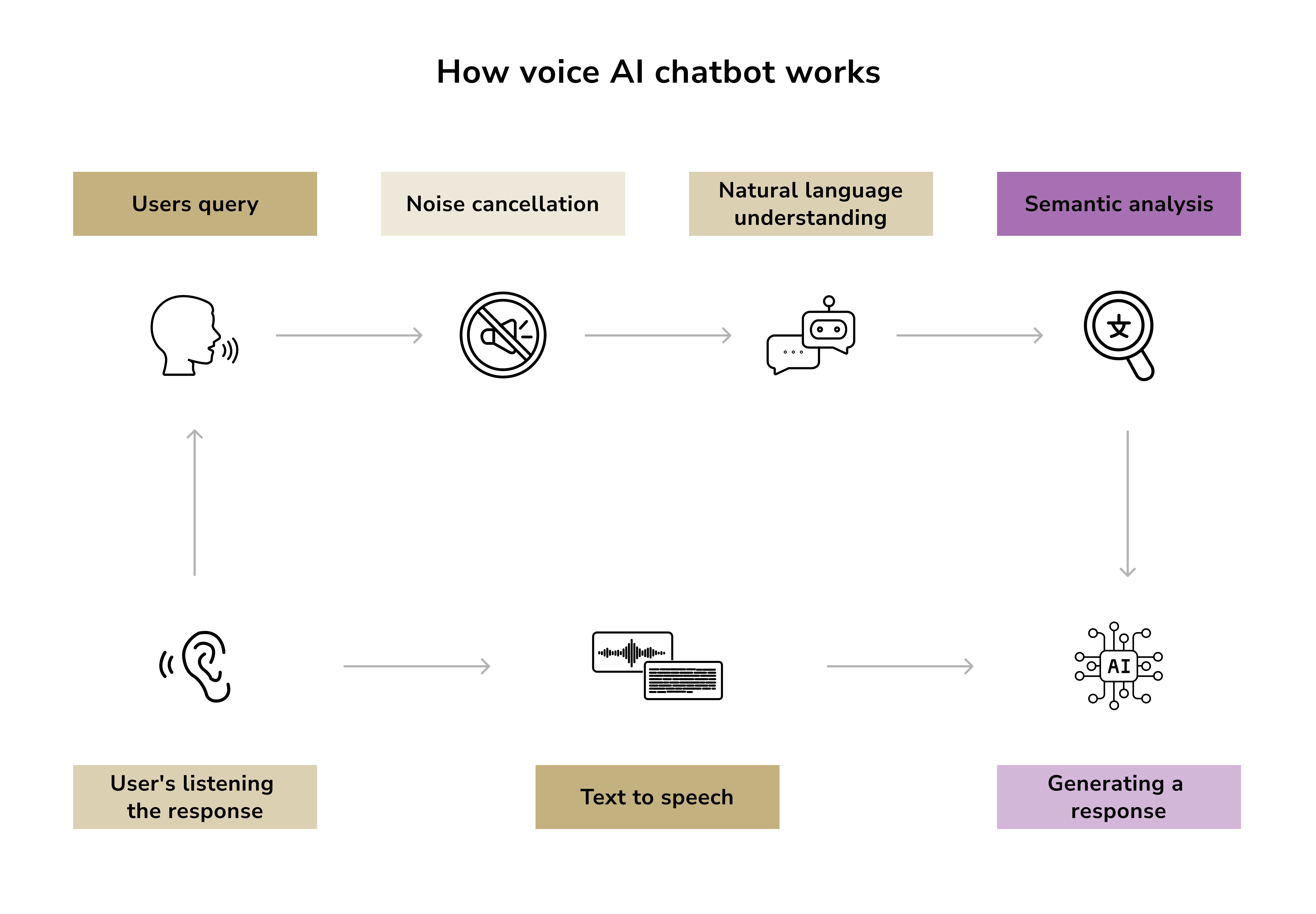

Major tech novelties seem to always inspire businesses to refine customer experience. In this regard, voice AI is no different. AI chatbots for business have altered customer support by providing 24/7 assistance without human intervention. Voice AI systems can handle complex inquiries, intelligently route calls, and resolve issues faster than traditional methods. Here's how it works:

Bank of America's Erica is one of the prime examples of how conversational banking can be integral for a great user experience. The secret sauce with AI solutions is always tuning. Since its launch, Erica has received over 75,000 updates. Initially, Erica could answer 200 to 250 types of questions. Now, it can answer more than 700 intents, providing answers so quickly that the average client interaction lasts 48 seconds.

The healthcare industry also has an ongoing relationship with conversational AI. Hospitals are using voice AI to streamline patient interactions, automate appointment scheduling, and provide medication reminders. Voice technology also assists with clinical documentation, allowing medical professionals to focus more on patient care rather than administrative tasks.

Integration in Consumer Devices

Embedding voice AI into devices and apps is the best way to prove its versatility and user appeal (Erica was an integral part of Bank of America's mobile app since its launch). Smartphones, smart speakers, and wearable devices now feature sophisticated voice assistants that can perform multiple tasks simultaneously.

- Smartphone integration: Voice AI in mobile devices enables hands-free navigation, message composition, and app control. Users can dictate emails, search for information, and manage calendars without touching their screens.

- Smart speaker ecosystem: Devices like Amazon Alexa and Google Assistant have created entire ecosystems of voice-controlled applications, from music streaming to home automation.

- Automotive applications: Modern vehicles integrate voice AI for safer driving experiences. Drivers can thus control navigation, make calls, and adjust vehicle settings without taking their hands off the wheel.

Enhanced Customer Support

AI in customer experience management facilitates business tasks related to customer inquiries. Voice AI systems can analyze customer emotions through tone and speech patterns, enabling more empathetic and effective responses.

- Intelligent call routing: Voice AI can identify customer intent and route calls to the most appropriate department or specialist, reducing transfer times and improving first-call resolution rates.

- Multilingual support: Advanced voice AI platforms, like ElevenLabs, support over 30 languages, enabling businesses to serve global customers without hiring multilingual staff.

- Predictive assistance: By analyzing conversation patterns and customer history, voice AI can anticipate needs and proactively offer solutions before customers explicitly request help.

In-App Voice Assistants and Voice Navigation

Voice AI text-to-speech capabilities enable apps to read content aloud, while voice navigation simplifies complex user interfaces.

- eCommerce applications: Voice shopping allows customers to search products, compare prices, and complete purchases using voice commands, creating more natural shopping experiences.

- Banking apps: Financial institutions use voice AI for secure authentication and transaction processing, combining convenience with advanced security measures.

- Navigation and travel: Voice-enabled navigation apps provide turn-by-turn directions and let users search for nearby restaurants, gas stations, and points of interest without manual input.

Accessibility and Inclusive Services through Voice AI

Voice AI technology is making digital services accessible to users with disabilities. For individuals with visual impairments, voice interfaces provide alternative ways to navigate websites, use applications, and access information.

- Screen reader enhancement: Voice AI improves screen reader functionality by providing more natural-sounding speech and better context understanding.

- Motor impairment support: Users with limited mobility can control devices, compose messages, and perform complex tasks via voice commands rather than physical interactions.

- Cognitive assistance: Voice AI can help users with cognitive disabilities by simplifying complex processes and providing step-by-step guidance through tasks.

Conversational AI with Emotional Intelligence

The latest voice AI generator technologies incorporate emotional intelligence capabilities, enabling systems to detect user emotions through voice analysis and respond appropriately. This advancement creates more empathetic and personalized interactions.

- Sentiment analysis: Voice AI can identify frustration, satisfaction, or confusion in customer voices, allowing for more appropriate response strategies.

- Adaptive communication: Systems adjust their tone, pace, and language complexity based on detected user emotions and preferences.

- Therapeutic applications: Healthcare providers use emotionally intelligent voice AI for mental health support, providing empathetic responses and crisis intervention capabilities.

Employee Training and Development

Voice-enabled training platforms can provide personalized learning experiences and immediate feedback.

- Interactive training modules: Employees can engage with training content through voice commands, making learning more interactive and engaging.

- Language learning: Voice AI helps employees improve communication skills by providing pronunciation feedback and conversational practice.

- Performance coaching: Voice analytics can identify areas for improvement in customer service calls and provide targeted coaching recommendations.

Content Creation and Marketing

Voice AI technology allows businesses to create personalized marketing messages, podcasts, and educational content without requiring professional voice talent.

- Automated narration: Marketing teams can generate voice-overs for videos, advertisements, and presentations using AI voice synthesis.

- Personalized audio messages: Businesses can create customized audio content for different customer segments, enhancing engagement and conversion rates.

- Podcast production: Voice AI streamlines podcast creation by generating consistent, professional-quality narration and enabling easy editing and customization.

How Voice AI Improves UX

Several companies have pioneered voice AI integration with remarkable success. Netflix uses voice AI to power its recommendation engine through smart TV interfaces, allowing users to search for content using natural language commands. This implementation has increased user engagement by 40% and reduced content discovery time.

Starbucks deployed voice AI through its mobile app, enabling customers to place orders through voice commands. The system processes over 2 million voice orders monthly, reducing wait times and improving order accuracy. Customer satisfaction scores increased by 15% following implementation.

American Express integrated voice AI into its customer service operations, handling routine inquiries and transaction disputes. The system resolves 60% of customer calls without human intervention, reducing average call resolution time from 8 minutes to 3 minutes.

Why User Experience Matters More Than Ever

User experience has become the primary differentiator in competitive markets. Companies that prioritize UI/UX design see measurable benefits: increased customer satisfaction, higher retention rates, and improved business outcomes.

Voice AI chatbots for business applications demonstrate this principle clearly. When customers can resolve issues through natural conversation rather than navigating complex phone trees or chat interfaces, satisfaction scores increase significantly. The technology removes friction from common interactions, allowing users to accomplish tasks more intuitively.

AI in customer experience management extends beyond simple automation. Modern voice systems analyze conversation patterns, detect emotional cues, and adapt responses accordingly. This creates personalized experiences that feel more human and responsive to individual needs.

The business case for superior UX grows stronger as customer acquisition costs rise. Retaining existing customers through better experiences costs significantly less than acquiring new ones. Voice AI provides a pathway to achieve this by making interactions more convenient and satisfying.

The Growing Importance of Sound Design in UX

Sound design has evolved from a nice-to-have feature to an essential component of user experience. As voice interfaces become more prevalent, the quality and thoughtfulness of audio elements directly impact user perception and engagement.

UI sound design focuses on specific interface interactions, such as the click of a button, the notification chime, or the confirmation beep. These sounds provide immediate feedback about user actions and system status.

UX sound design takes a broader approach, considering how audio elements contribute to the overall user journey. This includes ambient soundscapes, brand audio signatures, and the tonal qualities of voice AI text-to-speech systems.

The distinction matters because each serves different purposes in the user experience hierarchy. UI sound design addresses functional needs, while UX sounds build emotional connections and reinforce brand identity.

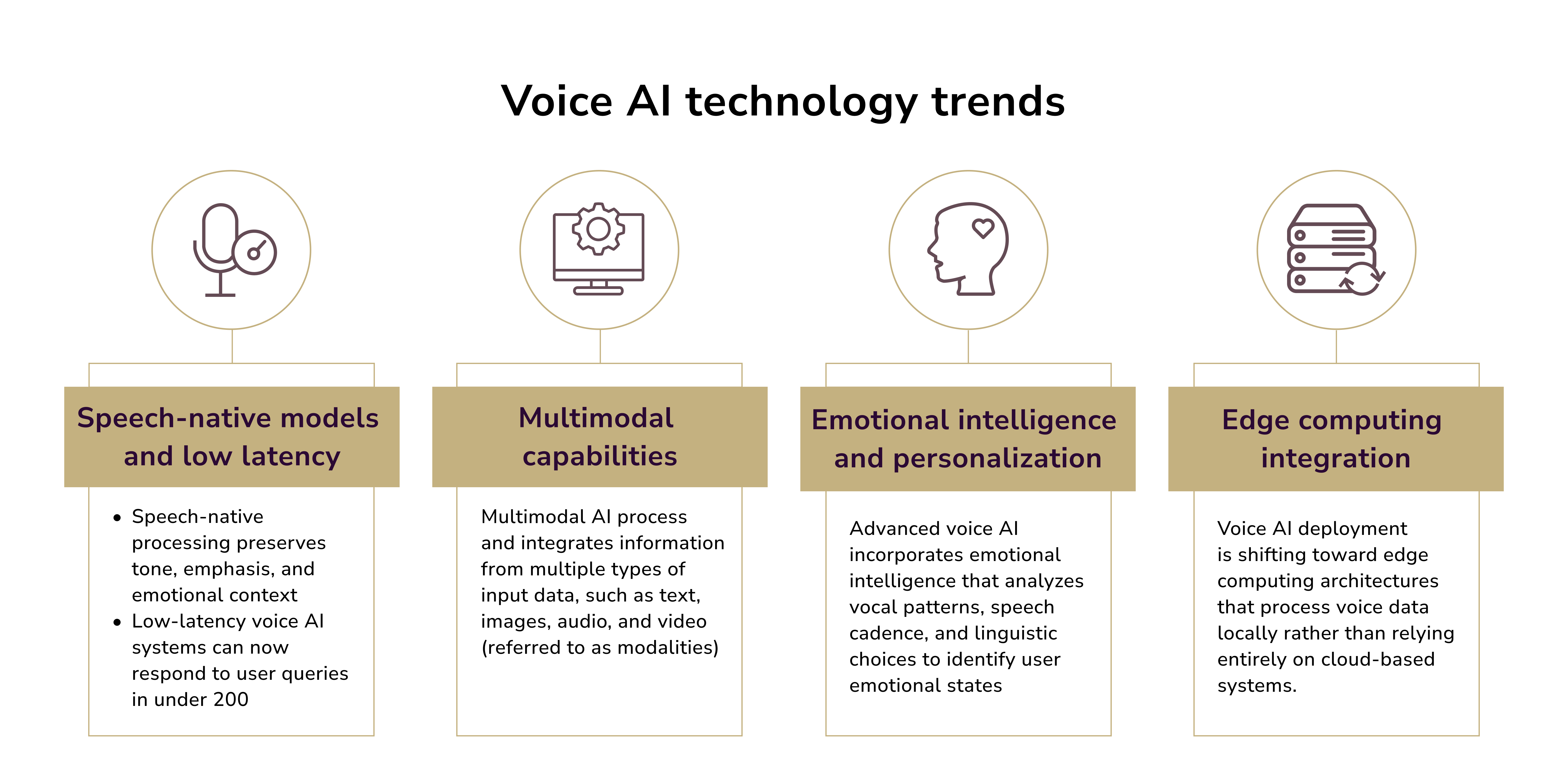

The latest technologies used in voice AI solutions promise a bright future for sound design and UX.

How Industries are Using Voice AI to Improve UX

Rather than relying solely on traditional interfaces, companies now use conversational AI to provide immediate, personalized responses that feel natural and human-like.

Voice AI in Banking

Banks and investment firms deploy voice AI systems to handle routine transactions, account inquiries, and payment processing. These systems use advanced natural language processing to understand complex financial terminology and provide accurate responses about account balances, transaction histories, and loan applications.

Investment firms utilize voice AI to deliver real-time market updates and portfolio summaries. Clients can request stock prices, market analysis, and investment recommendations through natural conversation, eliminating the need to navigate complex mobile applications or websites.

Note: Security remains paramount in financial voice AI applications. Multi-factor authentication through voice biometrics ensures that only authorized users access sensitive financial information. This technology analyzes vocal patterns, speech rhythm, and other unique characteristics to verify user identity.

Voice AI Example in Banking

JPMorgan Chase uses voice biometrics for customer authentication, reducing fraud attempts while improving login success rates.

Wells Fargo integrated voice AI into its mobile banking app, enabling customers to transfer funds and pay bills using voice commands. The feature increased mobile app usage and improved customer satisfaction scores across all age demographics.

Voice AI in Healthcare

Hospital systems use conversational interfaces to guide patients through pre-appointment preparations, collect medical histories, and provide post-treatment instructions. This approach reduces wait times and ensures consistent information delivery.

Telehealth platforms integrate voice AI generators to create more engaging virtual consultations. The application of AI in telemedicine helps translate medical terminology into patient-friendly language. This ensures clear communication between healthcare providers and patients, regardless of medical literacy levels.

Note: Voice AI text-to-speech technology proves particularly valuable in healthcare settings. Medical professionals can dictate patient notes, treatment plans, and prescription details while maintaining focus on patient care. The technology converts spoken words into accurate medical documentation, reducing transcription errors and saving valuable time.

Voice AI Example in Healthcare

Mayo Clinic uses voice AI generators to transcribe physician notes during patient consultations. This is one of the great examples of how AI reduces administrative burden, allowing doctors to focus on patient interaction.

Epic Systems integrated voice AI into its electronic health record platform, enabling hands-free data entry and retrieval. Physicians can dictate patient information while maintaining sterile conditions during procedures. The system now supports 42% of the hospital market, making it the leader in the healthcare AI industry.

Nuance Communications' Dragon Medical platform processes over 300 million voice interactions annually across healthcare facilities. The platform helps physicians create clinical documentation up to 45% faster and collect up to 20% more relevant data.

Voice AI in eCommerce

AI in customer experience management becomes evident through voice-enabled shopping assistants. These systems learn from previous purchases, browsing history, and stated preferences to suggest relevant products. The technology understands context, allowing customers to refine searches through follow-up questions and clarifications.

Order tracking and customer support benefit from voice AI integration. Customers can check delivery status, modify orders, and resolve common issues without navigating complex phone menus or waiting for human representatives. The system provides immediate responses to routine inquiries while escalating complex issues to human agents when necessary.

Voice commerce continues expanding as smart speakers and mobile devices become more sophisticated. Retailers optimize their voice AI systems to handle complex product catalogs, process payments securely, and provide detailed product information through conversational interfaces.

Voice AI Example in eCommerce

Walmart integrated voice AI into its grocery pickup service, allowing customers to add items to their cart using voice commands. The feature increased average order value and reduced cart abandonment rates.

Shopify's voice AI integration enables merchants to manage inventory and process orders through voice commands. Store owners can check product availability, update pricing, and generate sales reports hands-free. This voice AI in customer experience management has improved merchant productivity.

Target's voice AI system provides product recommendations based on customer preferences and purchase history. The technology analyzes voice patterns to determine customer sentiment, enabling personalized marketing messages that increase conversion rates.

Implementing Voice AI: Technical Considerations

Now that we've laid out the basics of voice AI applications, we can move on to their technical implementation aspects. The steps described below will be useful to keep in mind if you're creating AI chatbots or sophisticated voice AI generators.

Selecting Your AI Platform Foundation

The platform you choose forms the backbone of your voice AI implementation. Modern voice AI platforms offer varying levels of customization, scalability, and integration capabilities that directly impact your system's performance.

- Cloud-based solutions provide immediate scalability and reduced infrastructure management. Amazon Alexa for Business, Google Cloud Speech-to-Text, and Microsoft Azure Cognitive Services offer enterprise-grade reliability with built-in natural language processing capabilities. These platforms excel in handling high-volume requests and provide robust APIs for integration.

- On-premises solutions offer greater control over data privacy and customization. Open-source frameworks like Mozilla DeepSpeech and Kaldi provide complete control over your voice AI text-to-speech implementation, but require significant technical expertise and infrastructure investment.

- Hybrid approaches combine cloud processing power with on-premises control. This architecture allows sensitive data processing locally while leveraging cloud capabilities for resource-intensive tasks like model training and updates.

Note: Platform selection should align with your specific requirements for data security, processing volume, customization needs, and integration complexity. Consider factors like API rate limits, pricing models, and long-term vendor support when making this critical decision.

Architecting Effective Conversation Flow

Conversation flow design determines how naturally your voice AI interacts with users. Effective flow architecture balances user expectations with system capabilities while maintaining contextual awareness throughout interactions.

- Dialog management systems handle the conversation state and determine appropriate responses based on user input and conversation history. These systems must track multiple conversation threads, manage context switches, and handle interruptions gracefully.

- Intent mapping translates user utterances into actionable system commands. Well-designed intent recognition systems account for variations in phrasing, regional dialects, and conversational nuances. Create comprehensive intent libraries that cover expected user goals while building fallback mechanisms for unrecognized inputs.

- Response generation strategies range from template-based responses to dynamic content creation. Template approaches provide consistency and control but may feel rigid. Meanwhile, dynamic generation offers more natural interactions but requires sophisticated language models and careful quality control.

Note: Consider implementing multi-turn conversations that maintain context across extended interactions. This approach enables more sophisticated AI in customer experience management by allowing users to build complex requests through natural dialogue.

Mastering Language Training and Intent Recognition

Accurate intent recognition forms the core of effective voice AI systems. Your training approach will directly impact system accuracy, user satisfaction, and operational efficiency.

Consider the following steps for intent recognition training:

- Curate diverse, representative samples that reflect your actual user base.

- Include variations in pronunciation, accent, background noise, and speaking styles to improve real-world performance.

- Implement feedback loops that capture user corrections, successful interactions, and failure cases.

- Plan for language-specific training datasets and culturally appropriate response patterns.

Take into account that the multi-language support considerations extend beyond simple translation. Different languages have unique grammatical structures, cultural contexts, and interaction patterns that affect intent recognition accuracy.

Integrating Voice AI into Existing Systems

Modern voice AI solutions must seamlessly connect with existing business systems, databases, and workflows.

- API architecture should follow RESTful principles with clear endpoint definitions and comprehensive error handling. Design APIs that support both synchronous and asynchronous operations to accommodate different integration patterns and performance requirements.

- Data flow management ensures information moves efficiently between voice AI components and existing systems. Implement proper data validation, transformation, and routing mechanisms. Consider data privacy requirements and compliance standards when designing integration pipelines.

- Authentication and security protocols protect both user data and system integrity. Implement multi-factor authentication, encrypted communications, and access controls that align with your organization's security policies. AI security risks require careful data handling, which we will discuss below.

- Legacy system compatibility challenges require creative solutions. Many organizations need to integrate voice AI with older systems that lack modern API capabilities. Consider implementing middleware layers or data synchronization tools to bridge these gaps effectively.

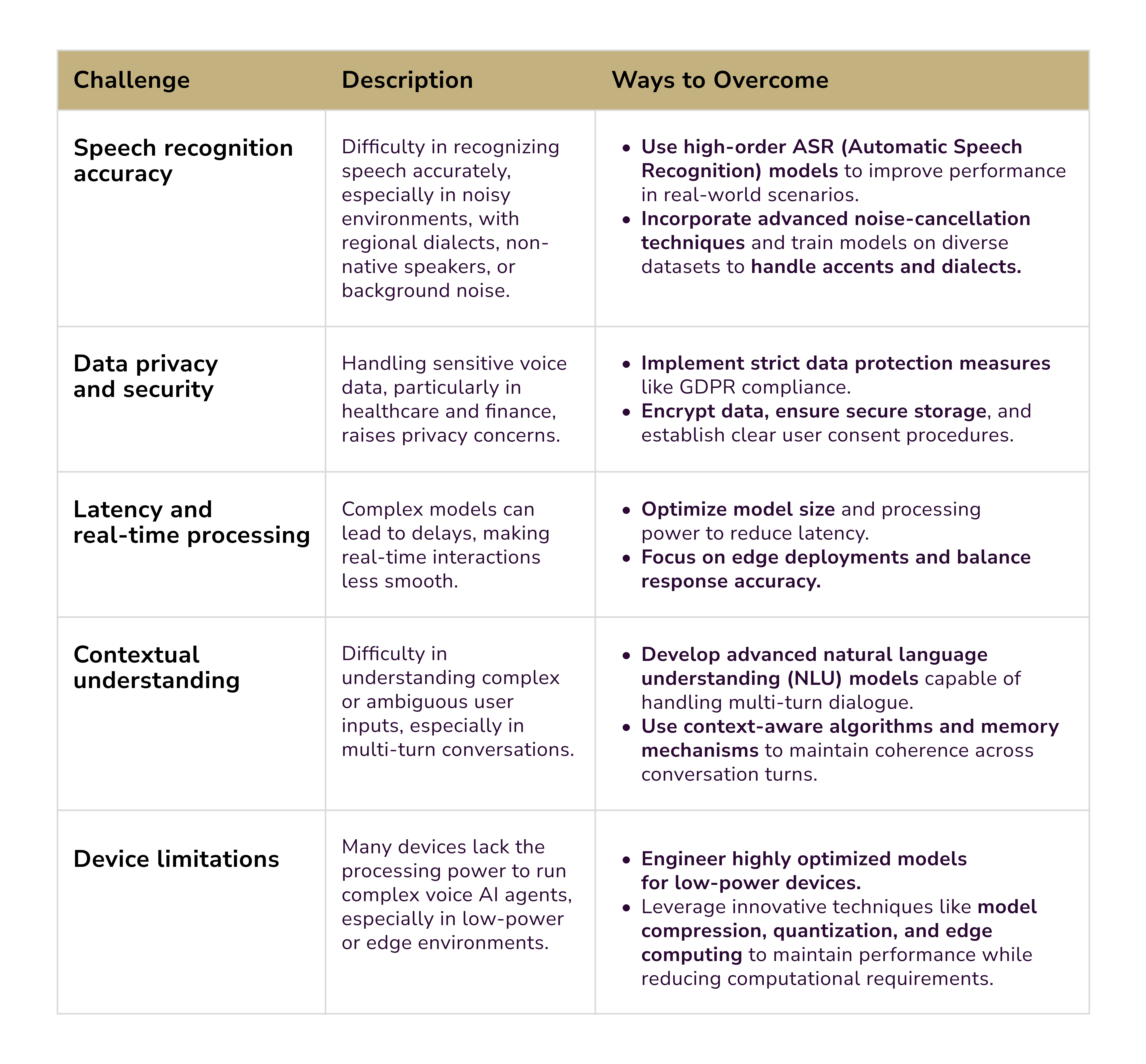

Keep in mind that voice AI comes with its challenges, which you should address before implementation.

Essential Technical Components

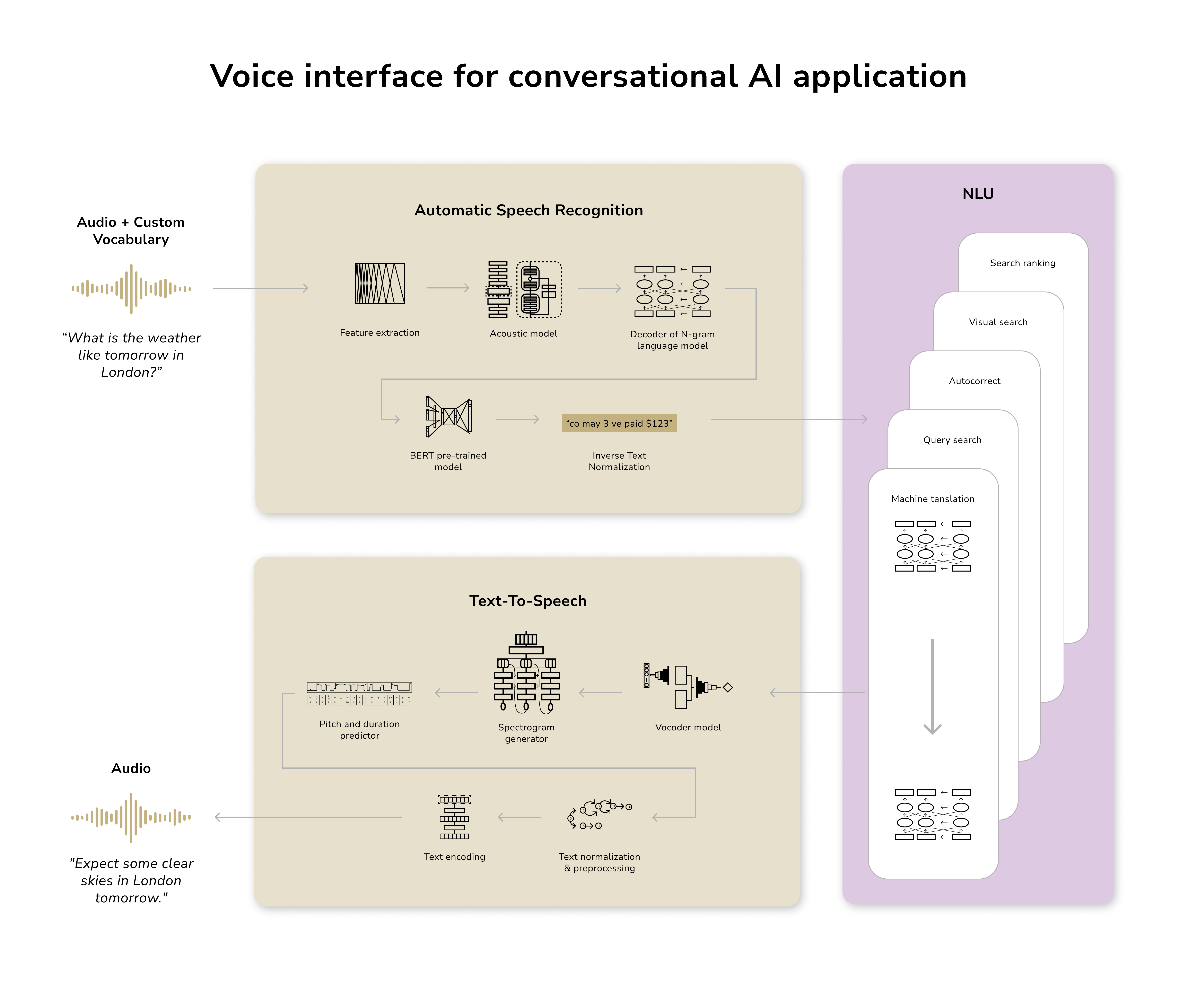

Voice AI systems require carefully orchestrated technical components working in harmony to deliver seamless user experiences. The necessary frontend components include voice capture systems that must handle varying audio quality conditions. Consider implementing noise reduction algorithms, automatic gain control, and echo cancellation to ensure consistent input quality.

You will also need real-time feedback to keep users engaged during processing delays. This can encompass visual indicators, audio confirmations, and progress updates. They might prevent users from abandoning interactions during natural processing pauses.

For the backend, you will need to take care of speech-to-text processing. This requires a robust infrastructure capable of handling concurrent requests with minimal latency. Employ proper queuing mechanisms and load balancing to maintain performance during peak usage periods.

Next, implement context management systems that track conversation state across multiple interactions. Design efficient data structures that maintain relevant context while preventing memory bloat in long-running conversations. Finally, implement caching mechanisms for common responses while maintaining the flexibility to generate dynamic responses when required.

For the integration layer, you can go for the API Endpoints that serve as the bridge between your voice AI system and external applications. Design comprehensive APIs that support all necessary operations while maintaining security and performance standards. Meanwhile, real-time communication protocols like WebSockets enable immediate responsiveness that users expect from voice interactions. Implement proper connection management and failover mechanisms to maintain reliability.

Quality Assurance and Testing Strategies

Testing strategies should cover technical functionality, user experience, and system performance under various load conditions. Consider three essential steps in this process:

- Implement unit tests for individual components, integration tests for system interactions, and end-to-end tests for complete user workflows.

- Conduct testing sessions with representative user groups to identify usability issues and performance gaps that automated testing might miss.

- Establish baseline measurements and alerting thresholds to maintain consistent service quality.

Voice Data Security and Compliance

Voice data presents unique security challenges that traditional text-based systems don't face. Voice recordings contain biometric identifiers that can permanently identify individuals, making them particularly sensitive from a privacy perspective.

- Implement end-to-end encryption for all voice data transmission and storage. Voice AI systems should use AES-256 encryption at a minimum, with separate encryption keys for different data types. This applies whether your system processes real-time conversations or stores recorded interactions for analysis.

- Collect only the voice data necessary for your specific business functions. Many voice AI implementations can operate effectively using transcribed text rather than storing actual audio files. This approach, called data minimization, significantly reduces security risks while maintaining functionality.

- Design your storage systems with voice data sensitivity in mind. Implement air-gapped backups, regular security audits, and automated deletion schedules for voice recordings that are no longer needed for business operations.

Also, proper access control prevents unauthorized individuals from accessing sensitive voice AI systems and the data they process. This becomes particularly critical when AI chatbots for business handle customer service interactions or financial transactions.

- Establish rule-based access controls and permission levels based on job functions. Customer service representatives might need access to current conversation transcripts, while system administrators require broader technical access. Voice AI generators used for content creation should have different permission structures than customer-facing systems.

- Require multiple authentication factors for anyone accessing voice AI systems. This includes not just human users but also API connections between different systems in your technology stack.

- Conduct quarterly reviews of who has access to what voice AI capabilities. Remove permissions for employees who have changed roles or left the organization, and verify that current access levels match actual job requirements.

Finally, voice AI implementations must comply with various regulations depending on your industry and geographic location. These requirements often overlap and can create complex compliance obligations.

GDPR privacy regulations give individuals specific rights regarding their voice data, including the right to know what data you collect, how you use it, and the right to request deletion. Your voice AI systems must be able to identify and remove specific individuals' data upon request.

Healthcare organizations implementing voice AI must consider HIPAA requirements, while financial services companies need to address PCI DSS standards. Each industry brings specific obligations that affect how you can collect, process, and store voice information.

Andriy Lekh

Other articles