AI Tech Trends Across Industries

Artificial intelligence has transcended its experimental phase to become the backbone of digital transformation. Large language models are becoming more efficient and specialized. Entirely new paradigms, such as neuro-symbolic AI, promise to address the fundamental limitations that have plagued current systems. Meanwhile, the integration of AI into critical sectors is creating unprecedented opportunities for innovation and growth.

Understanding these technological shifts is crucial for those who wish to tap into AI software development and implementation. The trends shaping 2025 reveal fundamental changes in how AI systems operate, learn, and integrate into our daily operations.

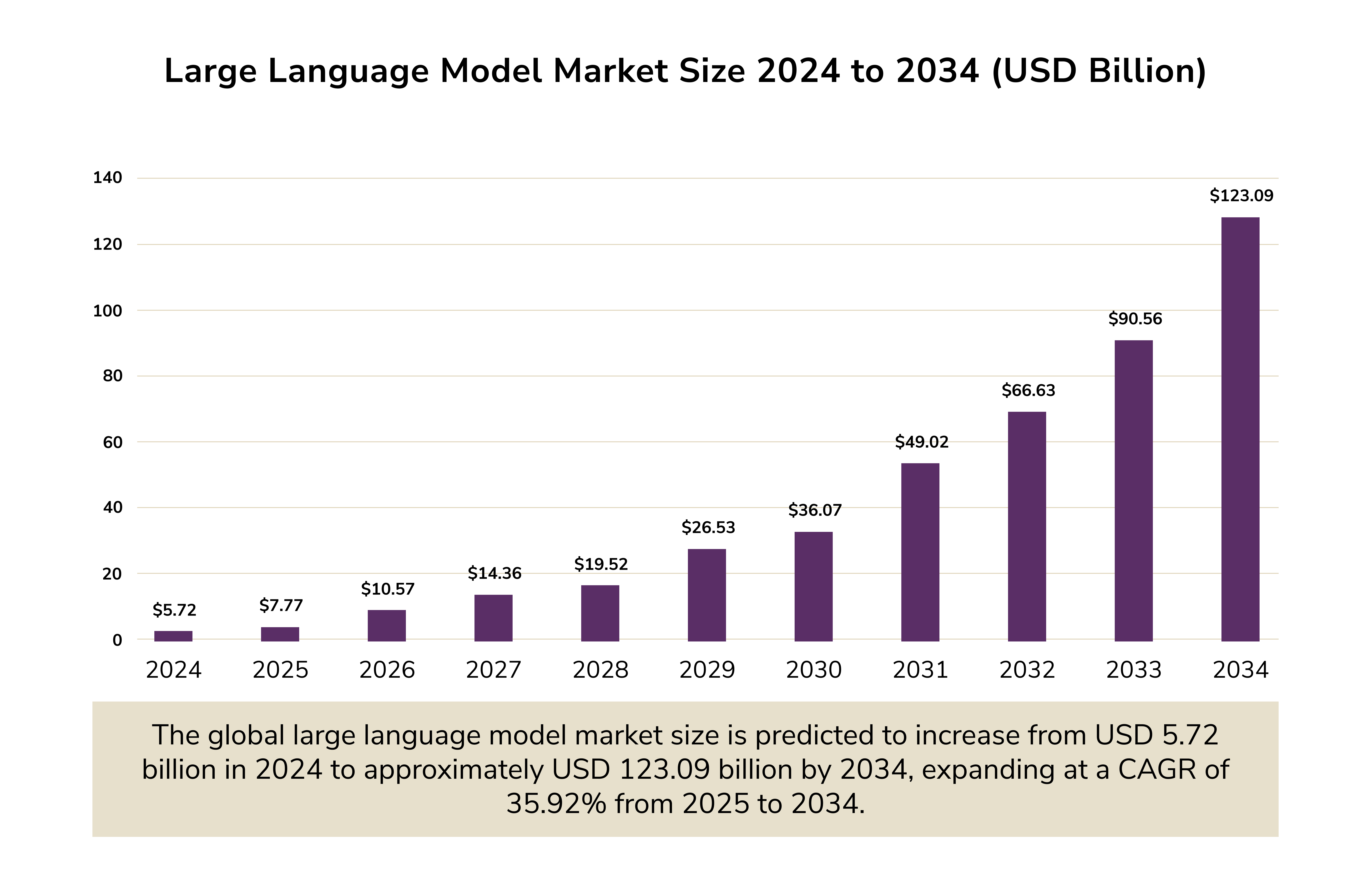

LLMs Evolution

The trajectory of LLM development has reached a critical inflection point. After years of adhering to the "bigger is better" philosophy, the industry is shifting toward specialization. This transformation stems from both practical limitations and economic realities.

The numbers tell a compelling story about this evolution. OpenAI's GPT-4 required approximately $78 million in computational resources to train. Google's Gemini Ultra consumed around $191 million. These astronomical costs represent a dramatic escalation from earlier models. GPT-4 demanded roughly 10,000 times more computational resources than GPT-2, despite being released just four years later.

However, the problem isn't just in the rising costs. Many tasks show minimal performance improvements once models exceed certain parameter thresholds. This creates a performance-to-cost imbalance, which is among the challenges in generative AI that compromise the ideology of LLM development itself. But there is a solution.

Model Fine-Tuning and Why It Matters

Fine-tuning refines AI models using smaller, relevant datasets (within one specific niche). This process helps optimize the model for task-specific performance. For instance, the insurance industry operates with highly specialized terminology, numerous acronyms, and complex regulatory language. General-purpose LLMs lack this specific contextual knowledge. Hence, they may misinterpret or mishandle commonly used terms like COI (Certificate of Insurance), admitted/non-admitted policies, etc.

Fine-tuning enables models to learn industry-specific language, allowing for accurate processing and reducing errors. This way, it addresses several critical limitations of general-purpose models.

- Knowledge cutoff: Pre-trained models cannot access new information that arises after their training date.

- Hallucinations: At times, AI models generate incorrect or nonsensical outputs for complex queries. Fine-tuning bridges these knowledge gaps, boosting reliability.

- Bias: Bias in the training data of pre-trained models can lead to skewed results. Fine-tuning with balanced, domain-specific datasets helps address these imbalances.

The Expansion of Multimodal AI

Multimodal AI represents a fundamental shift in how AI systems process and understand information. Companies integrate text, images, audio, and other data types into unified models. This way, AI systems are developing more human-like comprehension capabilities. The aim is to mirror human natural learning processes.

OpenAI's GPT-4 exemplifies this evolution. The company seamlessly combines text, vision, and voice processing within a single framework. This makes AI's real-time conversational abilities, such as conversational banking, feel remarkably natural. Users can interact with systems through multiple channels simultaneously.

Anthropic's Claude 3 has pushed multimodal capabilities into enterprise applications. In healthcare settings, the solution can analyze medical imagery alongside patient records. This combination of visual and textual analysis assists in diagnostic processes. The benefits from big data and AI in healthcare are greater than either of the data types could offer independently.

Meanwhile, MIT and Microsoft's Large Language Model for Mixed Reality (LLMR) is making strides in virtual environment creation. Users can describe desired changes in natural language and watch as the system implements them in real-time, mixed-reality environments. This capability bridges the gap between human imagination and digital creation in unprecedented ways.

Explainable AI (XAI) in Ethics and Society

Organizations continue to grapple with the opacity of black-box models. The issue is particularly vivid when AI is used in decision-making processes. Black-box models excel at generating accurate predictions but fail to provide interpretable explanations for their outputs. This limitation becomes particularly problematic in the healthcare sector. AI systems may recommend diagnoses or treatments. However, without clear insight into decision-making processes, professionals struggle to validate, trust, and justify AI-generated recommendations.

Note: the growing use of AI in mental health by users across the world has raised concerns regarding its potentially harmful effects. Low-cost AI therapy chatbots have been promoted as a potential solution to growing mental health needs. However, recent research from Stanford University highlights potential biases and operational shortcomings in these tools, which could pose significant risks.

That is why the focus on Explainable AI (XAI) intensified. The need for transparency stems from both ethical obligations and practical necessities. See, in high-stakes applications, AI-driven decisions carry significant consequences. AI models trained on biased datasets perpetuate or amplify existing inequities. This is one of the problems that could be solved, but the lack of transparency makes it difficult to identify its roots.

XAI focuses on creating AI models that offer clear and interpretable explanations for their decision-making processes. These explanations help understand the reasoning behind AI decisions. By doing so, XAI fosters trust, ensures ethical accountability, and supports compliance with legal and regulatory requirements.

Foundation Model Transparency Index

The Foundation Model Transparency Index (FMTI) was developed by researchers from Stanford, MIT, and Princeton. It represents a significant advancement in measuring and promoting transparency in AI.

The FMTI evaluates AI models across multiple dimensions, including development processes, functionality, and applications. Recent assessments indicate progress, with scores rising to 58 out of 100, and the highest-scoring model achieving 85 points. All evaluated companies revealed new information, averaging 16.6 disclosed indicators that were previously not public.

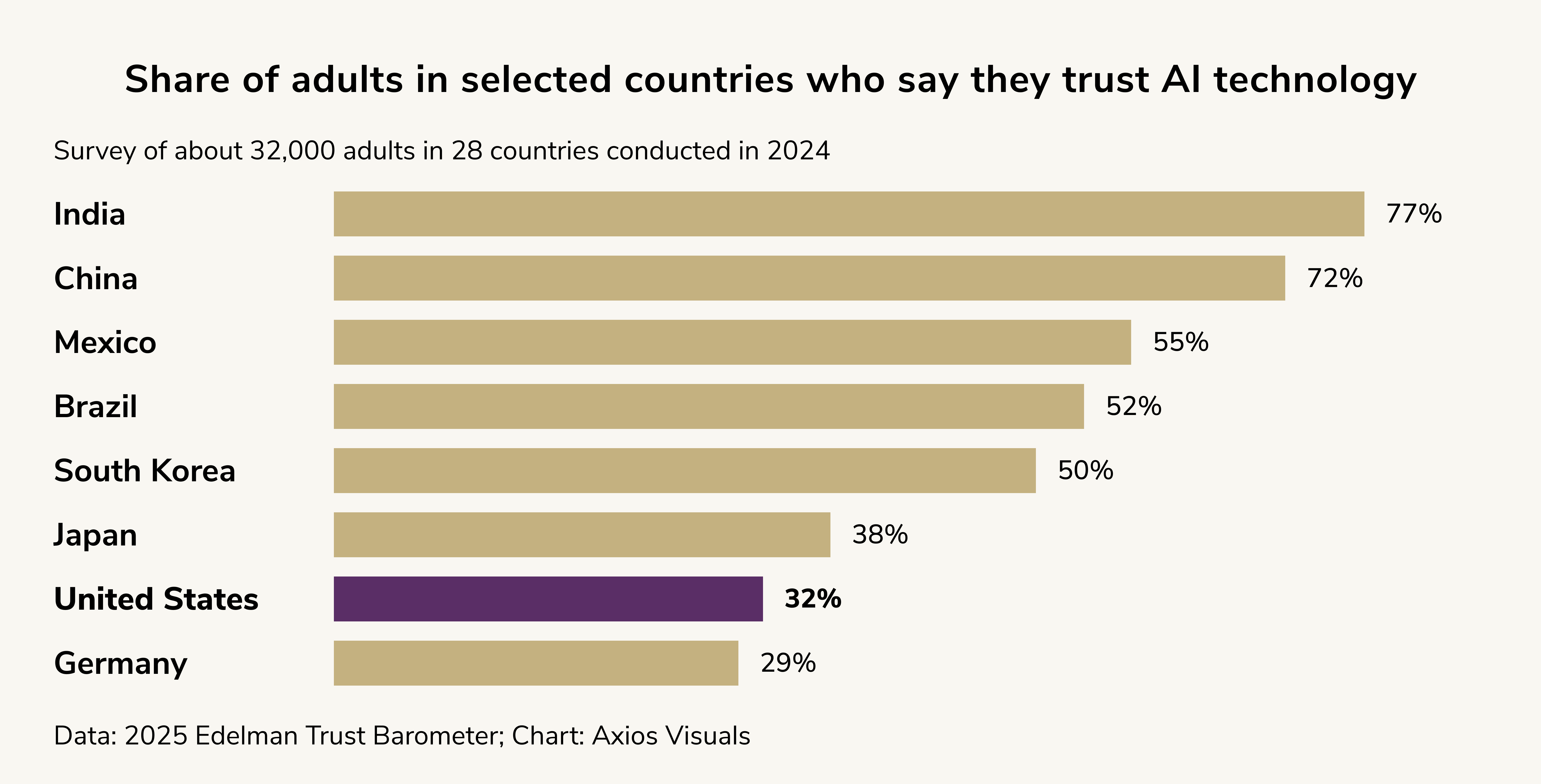

So far, however, the trust in AI still has room to grow.

Regulatory frameworks also support transparency requirements. The National Institute of Standards and Technology (NIST) has introduced an AI Risk Management Framework, while the University of California, Berkeley, offers a Taxonomy of Trustworthiness for AI. These frameworks provide benchmarks for researchers and highlight areas where regulatory intervention may be necessary.

Neurosymbolic AI

Neurosymbolic AI is promising for addressing many issues with LLMs. What problems exactly are we talking about?

- The major challenge with today's LLMs is their persistent struggle with hallucinations. This is a fundamental issue that is unlikely to be resolved through current approaches. The "human-in-the-loop" method (where human intervention helps evaluate and correct AI outputs) has been pushed as a solution. However, this method leaves users vulnerable to misinformation and does little to solve the AI's inherent limitations.

- LLMs' predictive approach is another significant flaw. Their logic stems from probabilities rather than genuine understanding. So errors and inaccuracies are inevitable.

- Another concern is the escalating demand for training data. It has pushed AI developers to rely on synthetic data generated by the LLMs themselves. This in turn amplifies existing biases and errors. Flaws in the original training data shape newer iterations of LLMs.

- Finally, fine-tuning these models after training (post-hoc alignment) is expensive and difficult. The complexity of neural networks makes errors harder to identify and correct.

How Neurosymbolic AI Can Help?

Neurosymbolic AI tackles these issues by incorporating formal logic, mathematical principles, and predefined meanings of words and symbols into AI systems. It has the potential to deliver greater transparency in decision-making processes.

We're already seeing early use cases of neurosymbolic principles in niche areas where rules are well-defined. For instance:

- Google's AlphaFold utilizes neurosymbolic techniques to predict protein structures, thereby accelerating the process of drug discovery.

- AlphaGeometry solves complex geometry problems using similar methods.

- China's DeepSeek takes another step forward by employing "distillation," a method that draws valuable lessons from data.

For AI to advance meaningfully, it must overcome the limitations of deep learning. Systems need to adapt, apply knowledge across tasks, verify their understanding, and reason with precision. Neurosymbolic AI has the potential to satisfy all these requirements. It can also reduce data dependency and amplify transparency.

AI in Cybersecurity

The cybersecurity domain is an ideal example of AI's dual nature. In this field, AI is both a powerful defensive tool and an enabler of sophisticated attacks. AI-powered security solutions are becoming essential for protecting digital assets against complex threats. Meanwhile, cybercriminals simultaneously leverage AI to enhance their attack capabilities.

By leveraging ML algorithms and NLP, attackers have developed sophisticated malicious strategies, including:

- Hyper-personalized phishing emails. Hackers use AI technology to analyse datasets, such as public profiles. Then, they craft phishing emails tailored specifically to recipients. This level of personalization makes these emails appear more legitimate. It can increase the likelihood of successful deception.

- Creation of deepfake media. Using advanced AI models, cybercriminals can now generate highly realistic deepfake audio and video content. They can convincingly imitate trusted executives or colleagues, making fraudulent requests more believable and more challenging to identify as scams.

- Automated phishing campaigns. Since AI is vital for automation, malicious actors have found a way to leverage it. AI can automate phishing campaigns, thereby boosting their efficiency. Think about it: attackers can deploy thousands of highly targeted messages within minutes. They can dramatically increase the scale and impact of their malicious efforts.

Malware and Phishing Detection

On the other hand, AI-powered cybersecurity systems have proven effective in detecting malware and phishing attempts. AI models achieve detection rates between 80% to 92%, a sizeable leap from the 30% to 60% effectiveness of legacy signature-based systems.

By analyzing email content, context, and patterns, AI can accurately distinguish between spam and phishing emails and legitimate communications. Advanced ML algorithms enable systems to adapt and stay ahead of emerging threats, such as sophisticated spear phishing campaigns. Proactively intercepting malicious activities prevents them from compromising corporate networks. This makes AI an invaluable tool for modern cybersecurity.

Note: Researchers at the University of North Dakota developed an ML-based phishing detection technique. It boasts an impressive 94% detection accuracy. This is one of the most inspiring examples of how AI refines threat detection to safeguard sensitive information.

AI-powered email security solutions have transformed phishing detection through several advanced capabilities:

- Utilizing ML algorithms to detect unusual email patterns and behaviors.

- Refining these algorithms to improve their ability to identify phishing attempts as new threats develop.

- Examining email content, sender details, and contextual clues to assess potential risks.

- Accurately predicting phishing attempts by analyzing diverse data points.

AI-Powered Encryption

AI encryption method employs ML algorithms to monitor and adapt to new cyber threats. This adaptability ensures consistent data security even as cyber threats evolve in complexity. AI integration also boosts the encryption system's ability to detect anomalies and suspicious activities within a network. This adds another layer of protection against unauthorized access or data breaches.

Ultimately, AI-powered encryption elevates data security measures, making them more agile.

Note: We've noticed an active online debate regarding AI and its potential to break encryption. While AI excels in many areas, breaking encryption remains a formidable challenge. Encryption techniques, such as AES (Advanced Encryption Standard) and SHA (Secure Hash Algorithm), are based on complex mathematical foundations. They are resilient against brute-force attacks and predictive methodologies, including those performed by AI. Encrypted data can be compared to an intricate puzzle. AI requires considerable computational power and time to analyze which components are valid and which are not. What’s more, security algorithms embed unpredictability. This makes it exceptionally difficult for attackers, including AI systems, to decipher them.

Quantum-Resistant Encryption

One of the most pressing concerns for cybersecurity lies in the intersection of AI and quantum computing. When fully developed, quantum computers will have the power to undermine many current encryption methods. They could reduce the time required to break even the most advanced cryptographic defenses. So, the combination of quantum advancements and AI’s capabilities could potentially bypass encryption mechanisms deemed secure today.

To stay ahead of these challenges, researchers are focusing on developing post-quantum encryption techniques. These might help resist attacks from quantum-powered systems. Here, AI also plays a critical role. It helps create the next-generation encryption protocols that are robust enough to withstand future technological advancements.

AI in Energy

The energy industry faces a paradoxical relationship with AI. See, AI systems consume unprecedented amounts of energy and water resources. Simultaneously, they offer solutions to optimize energy efficiency and reduce environmental impact. This duality requires careful balance. Yet, more and more organizations pursue AI-driven innovations. However, managing sustainability concerns will be essential in the near future.

The resource intensity of AI systems becomes apparent as deployment scales. A single ChatGPT query consumes approximately 2.9 watt-hours of electricity—nearly ten times more than a typical Google search. Microsoft's 2024 sustainability report revealed a 29% increase in emissions and a 23% rise in water consumption, primarily attributed to the deployment of generative AI.

Projections indicate that AI-related power consumption could increase by 60% to 330% of US power generation capacity growth by 2030. AI data centers alone could require an additional 130 GW in capacity, while US power generation is expected to expand by just 30 GW during the same period. This imbalance presents significant challenges for scaling sustainable AI infrastructure.

Efficient AI Architectures

The growing demand for AI systems is not just about increasing energy availability but also about enhancing the efficiency of computing architectures. For decades, traditional computing has relied on the Von Neumann architecture, in which memory and processing units are separate. This results in constant data transfer between the two components, creating what's known as the "Von Neumann bottleneck." This inefficiency consumes a significant amount of time and energy. It restricts scalability to meet the increasing demands of AI systems.

Rethinking how system components interact—"simultaneous and heterogeneous multithreading" (SHMT)—might be precisely what the industry needs. Traditional architectures often struggle with bottlenecks caused by data transfers between processing units, such as GPUs and AI accelerators. SHMT addresses this problem by enabling parallel processing across different hardware platforms. For instance, a system integrating ARM processors, Nvidia GPUs, and Tensor Processing Units demonstrated nearly double the performance while cutting energy use in half.

Beyond SHMT, neuromorphic computing offers a groundbreaking solution inspired by the human brain. Neuromorphic chips, such as Intel's Loihi, mimic the brain's structure and excel at parallel processing. These chips enable simultaneous task execution and can achieve energy efficiencies up to 1,000 times greater than conventional processors for specific tasks.

Another exciting direction is optical neural networks (ONNs), which use light instead of electrons for computations. This allows them to drastically reduce energy consumption while boosting performance. MIT's HITOP optical technology, for example, can process ML models 25,000 times larger than previous networks, while using 1,000 times less energy.

Together, these advancements represent a shift toward efficient computing architectures that can scale in response to the immense demands of AI.

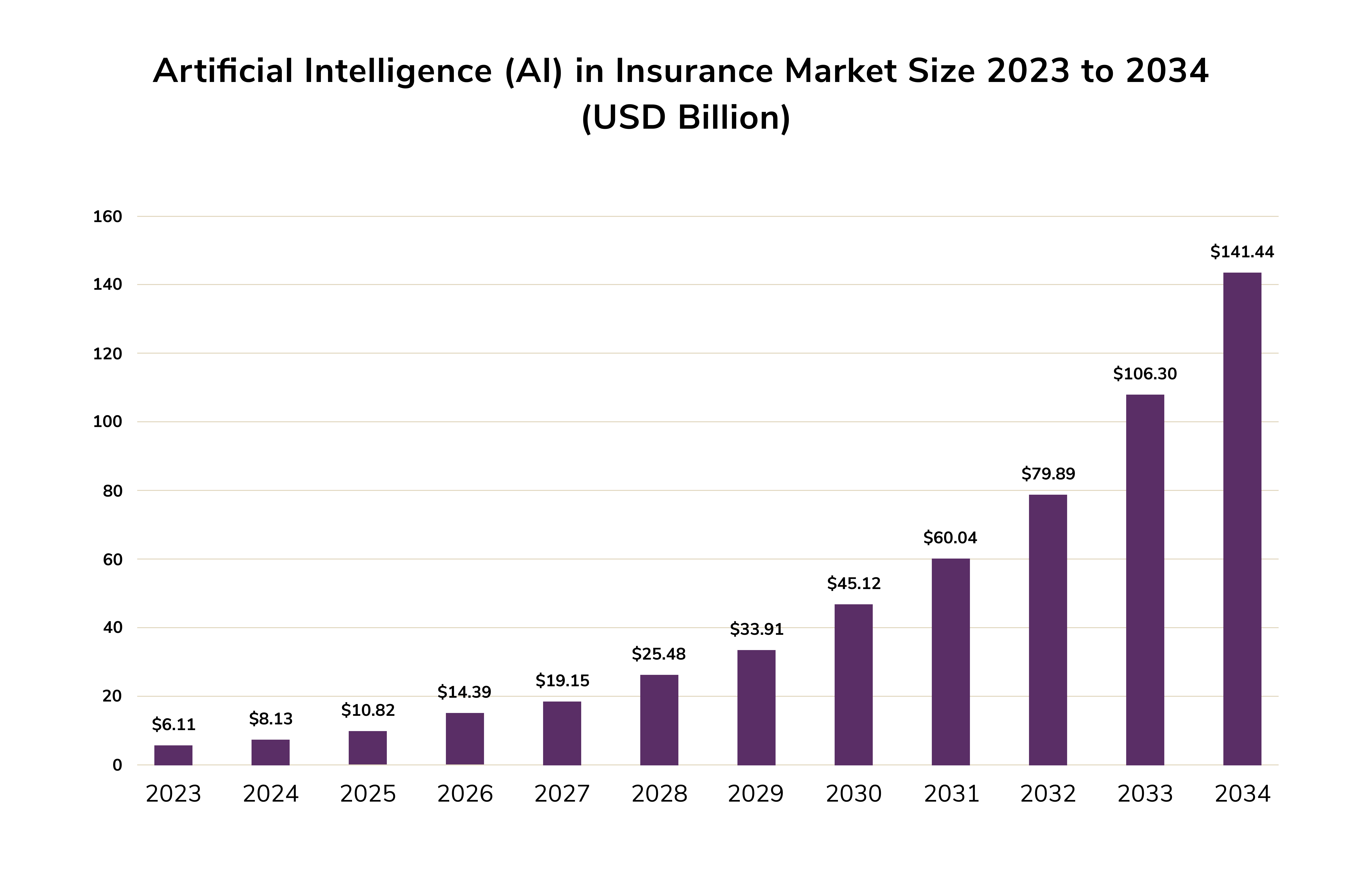

AI in Insurance

Like other areas of financial services, the insurance industry relies heavily on data. Historically, the domain has utilized algorithms extensively for underwriting processes. However, AI takes these capabilities to a new level, enabling faster and more scalable analysis.

Predicting Workplace Injuries

Workplace safety represents a significant area of application for AI in insurance. JE Dunn has partnered with AI-powered platform Newmetrix to deploy proactive safety systems. This helped them prevent incidents among their 3,500 employees. Amazon is implementing ML and computer vision technologies in warehouses to issue real-time safety alerts. This measure resulted in fewer injuries and contributed to a 12% growth in net sales. Siemens has integrated AI-led safety protocols. The company achieved a 30% decline in workplace injuries over two years.

AI Powered Fire Prevention

AI-powered fire detection systems excel in speed, accuracy, and scalability compared to traditional methods. For example, convolutional neural networks (CNNs) achieved an impressive 95% accuracy rate in detecting fire from visual data. Also, smart building solutions utilize AI algorithms to detect anomalies signaling potential fire risks. This helps reduce response times to fire hazards by up to 40% through efficient analysis of environmental data.

The integration of thermal imaging with AI algorithms further enhances early detection, even in limited visibility environments. Predictive models for wildfire management have also been instrumental. Machine learning algorithms that incorporate spatial and temporal factors allow authorities to predict fire spread with high precision.

Following the January 2025 wildfires in Los Angeles, the collaboration between fire departments and researchers has accelerated to enhance AI-driven wildfire detection systems. These cutting-edge systems integrate satellite imagery, drones, and smart sensors to deliver early warnings and real-time data. One notable initiative is ALERTCalifornia, spearheaded by UC San Diego. It operates over 1,100 cameras and sensors to provide 24/7 monitoring of high-risk fire zones. In December 2024, it promptly identified a fire in Black Star Canyon at 2 a.m., enabling firefighters to limit its spread to less than a quarter of an acre.

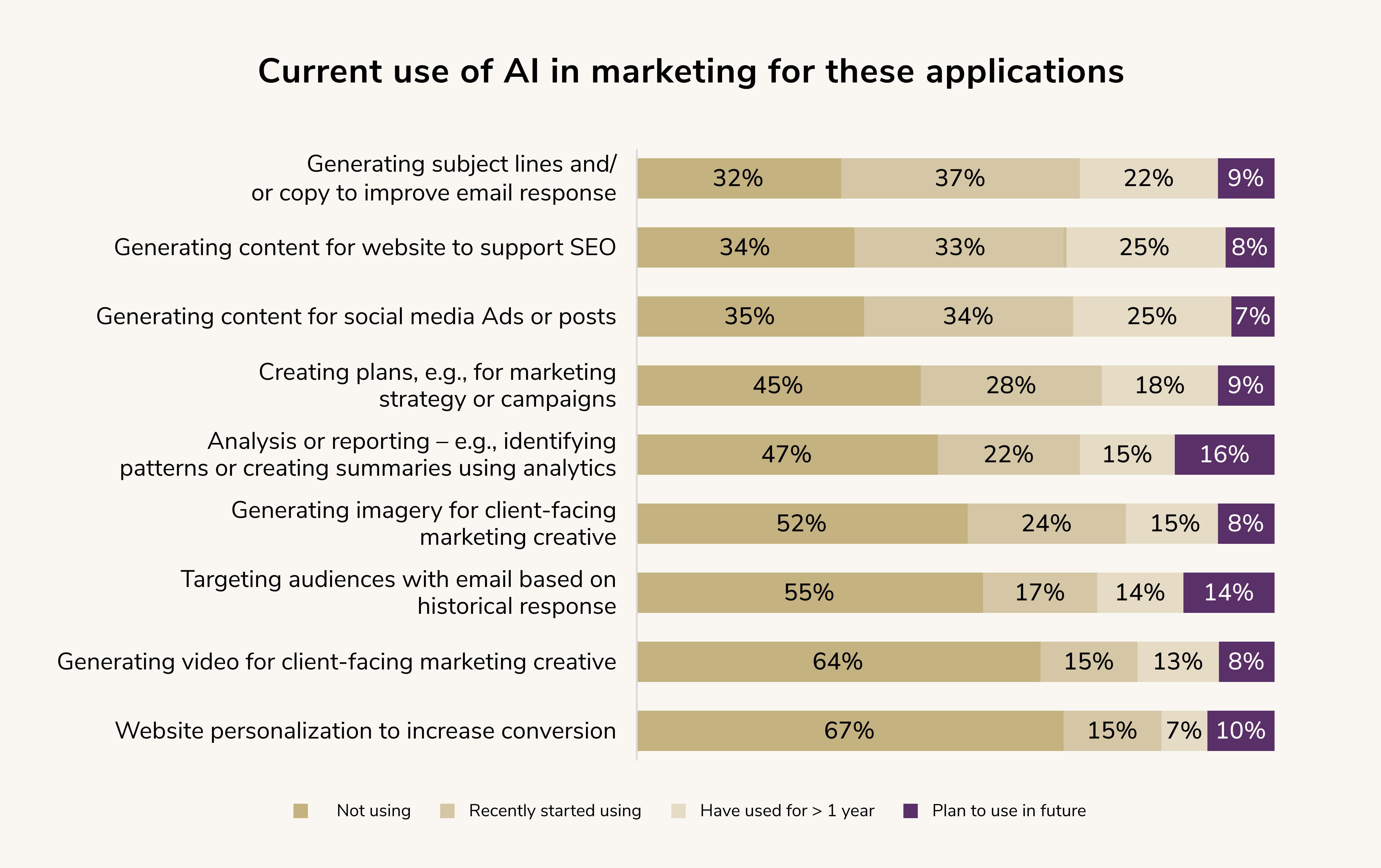

AI in Marketing

Modern marketing faces an unprecedented challenge: creating authentic connections with diverse consumer bases spanning multiple languages, cultures, and socioeconomic backgrounds. But crafting authentic communication at scale for such a broad audience is no small task.

For years, many businesses have turned to personalization as a solution. And it's what consumers increasingly demand. Research from McKinsey highlights that 71% of customers expect personalized interactions. 76% become frustrated when their expectations aren't met. When executed well, personalization can generate incredible value.

Generative AI Enables Scalable Content Creation

However, many companies rely on fragmented and standalone tactics to engage their customers. To truly meet consumers where and how they want to be reached, marketers can adopt two key innovations:

- AI-driven targeted promotions.

- GenAI to create and scale highly relevant, personalized content at unprecedented speed and volume.

These innovative approaches pave the way for growth. Advanced analytics models allow brands and retailers to deliver valuable, targeted offers to micro-communities, wherever they choose to engage. At the same time, genAI empowers marketers to craft bespoke content that resonates deeply with these audiences.

To unlock the full potential of AI tools, companies should prioritize enhancing their marketing technology infrastructure. A robust system built on strong data, decision-making processes, design, distribution, and performance measurement is critical.

Deep Personalization

Historically, tailoring creative content to smaller subsets of consumers was always resource inefficient. Generative AI changes that by providing scalable, cost-efficient content creation for niche audiences. Seamlessly integrating solutions that align people, processes, and platforms can help marketers create rigorous, high-performing content.

Although today's content creation process involves heavy manual effort, genAI can streamline and magnify productivity for everyone involved. The more material marketers feed into a comprehensive content data model, the better AI can refine and generate tailored content. However, it's crucial to establish robust governance frameworks. These should ensure content adheres to enterprise-wide standards and safeguards against bias or harmful outputs.

AI-Powered Decision-Making Platforms

Organizations can choose between developing internal AI agents using tools like Botpress, Microsoft's AutoGen, or LangChain, or implementing pre-built platforms designed for specific marketing outcomes. Three notable platforms demonstrate different approaches to AI-driven marketing automation:

- Hightouch AI Decisioning focuses specifically on marketing outcomes. It helps drive engagement, revenue growth, and customer lifetime value. The platform employs ML algorithms to recommend data-driven decisions that optimize marketing performance across campaigns.

- UnifyGTM specializes in sales outreach automation. It handles repetitive tasks such as prospecting, lead identification, workflow management, and personalized message creation. This approach allows sales teams to focus on relationship building while AI manages routine communications.

- Cognigy enhances customer service through automated interactions across chat and voice channels. By integrating generative AI, the platform enables empathetic conversations. It produces hyper-realistic voice responses that improve customer satisfaction.

Sephora exemplifies successful AI implementation in retail marketing. Their Virtual Artist app utilizes augmented reality and AI to enable customers to virtually try thousands of makeup products. Meanwhile, their AI-driven recommendation engine analyzes customer preferences, purchase history, and search behaviors. This comprehensive approach helps suggest products that match individual beauty needs.

AI in Healthcare

The introduction of ChatGPT highlighted both AI's potential and limitations in healthcare applications. General-purpose AI models treat all information sources equally, whether from social media posts or peer-reviewed medical journals ( see AI in EHRs ). This fundamental flaw drove the development of healthcare-specific language models trained exclusively on medical data.

Medical-Specific AI Systems

Google's Med-PaLM system, introduced in March 2023, represents a significant advancement in medical AI. Med-PaLM 2 leverages carefully curated medical datasets and specializes in clinical decision-making, diagnosis support, and medical question answering. This targeted training ensures responses derive from reliable medical knowledge rather than general internet content.

Google has also developed HeAR, an AI model focused on analyzing medical sounds. Built using 300 million audio samples, including 100 million recorded coughs, HeAR identifies conditions like tuberculosis through audio diagnostics. This capability extends AI's diagnostic potential beyond traditional visual and text-based analysis.

In radiology, Radiology-Llama2 leverages Meta's open-source framework while specializing in medical imaging interpretation. This model offers AI-powered diagnostic assistance based on radiological images, potentially improving accuracy and reducing interpretation time.

Innovative AI Architectures

Hippocratic AI's Polaris system demonstrates how innovative architectures can enhance patient communication. Using a "constellation" approach where multiple AI models collaborate through a trillion parameters, Polaris learns from high-quality medical literature and simulated practitioner-patient dialogues. This design enables accurate and empathetic patient communication that maintains clinical precision while improving bedside manner.

PicnicHealth's LLMD model takes a different approach by training on millions of real-world patient records across various healthcare locations. Unlike traditional medical models focused on terminology or theoretical knowledge, LLMD identifies complex patterns in actual patient care. The system understands how medications are prescribed over time and how treatments interconnect across multiple hospitals and years.

Despite using only 8 billion parameters—relatively small compared to other AI models—LLMD outperforms larger systems by excelling in real-world care dynamics. This success demonstrates that practical healthcare AI effectiveness depends more on understanding care patterns than memorizing medical textbooks.

Wearable AI

One of the most promising areas for wearable AI is healthcare. Devices like the Apple Watch Series 8 utilize AI to monitor irregular heart rhythms and assess sleep patterns. Fitbit's Sense smartwatch tracks health metrics and delivers tailored wellness insights.

The most widely used AI wearables in healthcare are listed in the pic below.

Wearable AI technology is advancing quickly, but not without challenges. For instance, Humane introduced the AI Pin in April 2024, a screen-free device designed to clip onto clothing, project displays onto the user's hand, and respond to voice commands. Despite innovative features like object recognition and cellular connectivity, the device was criticized for lacking practical usability. This product mirrors earlier attempts to revolutionize human-computer interaction in wearable form.

An example of this was Microsoft's SkinPut, launched back in 2010. It projected interfaces onto the skin and used vibrations to detect touch input. While groundbreaking from a technical perspective, it ultimately failed to gain widespread traction. Today, companies are primarily finding success by integrating AI technologies into familiar devices, rather than inventing entirely new ones. A notable example is Iyo, which has created AI-driven earbuds combining the functionality of Bluetooth headphones with advanced features like real-time translation and personalized workout coaching.

Note: While interest in AI-powered wearables is evident, no product has yet fully replaced the smartphone. Instead, the most successful wearable devices are those that enhance existing technologies rather than attempting to replace them outright. The future of wearable AI likely lies in complementing and augmenting current devices, offering added functionality without entirely disrupting established systems.

We live in a truly remarkable time, where the number of successful AI use cases across various industries continues to grow daily. Impressit works hard to keep you informed. Check out our blog for more valuable insights.

Roman Zomko

Other articles