How AI for Mental Health Works

Would you entrust AI with your life problems? Would you follow its advice on addressing them? The reason why we're asking these questions is that AI therapists are amazingly popular. Given the current market prices for an hour-long session with a qualified therapist, it doesn't even come as a surprise.

Over the last few years, telemedicine has undergone alteration due to the pandemic. Once considered a niche option, digital therapy became a mainstream solution basically overnight. Video therapy sessions, once rare, became a crucial support system for millions struggling with anxiety, isolation, and grief. For example, in the United States, virtual mental health services skyrocketed from less than 1% of outpatient visits before the pandemic to over 40% at their height. Even now, tele-mental health accounts for approximately 36% of outpatient mental health care in the U.S.

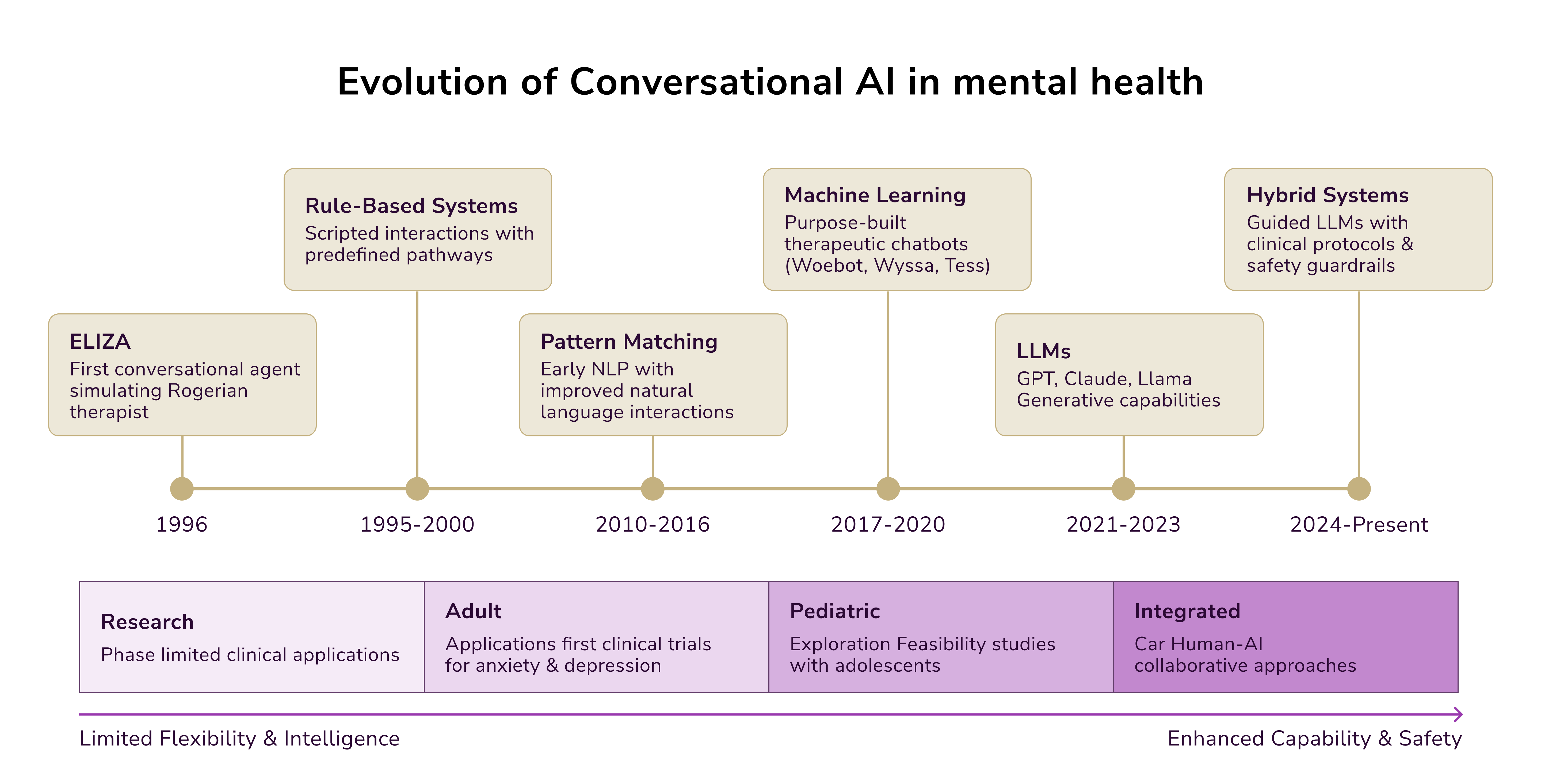

Artificial intelligence and machine learning development are driving these changes further. But is it working right, and how to draw a line between therapy led by humans and that supported by machines?

What Drives the Need for AI in Mental Health

Mental health issues are a leading concern worldwide, impacting individuals and communities alike. Before the COVID-19 pandemic, an estimated one billion people suffered from mental health or substance abuse disorders globally. The pandemic exacerbated this crisis, with rates of anxiety and depression increasing by 25%-27%.

A significant driver for the adoption of AI in mental health is the global shortage of qualified mental health professionals. According to WHO's Mental Health Atlas, there are only 13 mental health workers per 100,000 people globally. This issue is even more pronounced in low-income countries, where resources are scarce compared to high-income regions. For example, the availability of mental health workers in high-income countries is up to 40 times higher than in low-income nations.

Consequently, approximately 85% of individuals with mental illnesses, particularly in low- and middle-income countries, do not receive treatment. These stark disparities highlight the urgent need for scalable, technology-based solutions to make care accessible to everyone, regardless of location or income level.

How AI for Mental Health Works

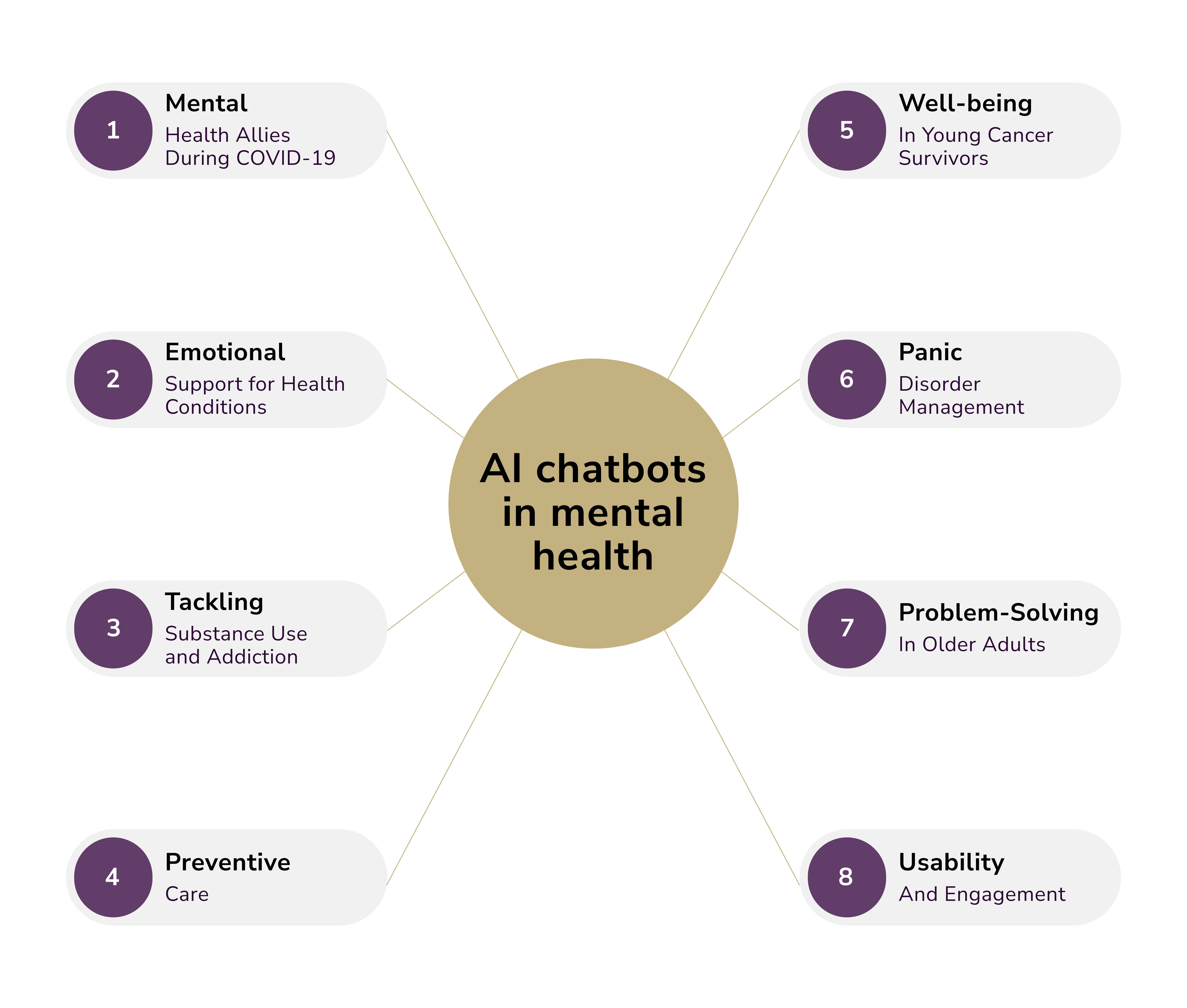

Early disease detection and diagnostics have become one of the major AI tech trends in 2025. The role of AI in promoting positive mental health is diverse and expanding, mostly in the following forms:

- The development of healthcare software and platforms that utilize chatbots and virtual assistants. Those provide immediate support during challenging times, offer conversational companionship, and even identify potential mental health concerns.

- Machine learning algorithms enable personalized therapeutic interventions tailored to individual needs. They are utilizing real-time data collected through wearable devices to support more informed clinical decision-making.

- AI-driven tools for clinical practices and wellness applications. These leverage AI to deliver mindfulness exercises and tailored recommendations for overall mental well-being.

Let us explain which AI elements have the potential to support mental well-being both within clinical settings and beyond.

Machine Learning

One significant use of Machine Learning (ML) in mental health is analyzing patient data, such as electronic health records and behavioral patterns, to aid in diagnosing conditions like depression, anxiety, or schizophrenia. ML algorithms also predict risks of developing mental health disorders based on historical data and help design tailored treatment programs. They can recommend specific therapies or medications based on individual patient data and response patterns. Also, since it simulates (or aims to do so) human-like decision-making, reasoning in AI can aid in early detection of mental health issues, personalizing therapy plans, and providing real-time support through chatbots and virtual therapists.

Supervised vs. Unsupervised ML

Supervised Machine Learning (SML) relies on pre-labeled data, where the system learns to classify input features accurately, such as distinguishing between major depressive disorder (MDD) and its absence. The algorithm uses extensive labeled training data to identify patterns and connections between various sociodemographic, biological, and clinical measures. Once trained, it is assessed using unlabeled test data to verify its ability to make accurate classifications based on these learned patterns. Labels can either be categorical (e.g., MDD vs. no MDD) or continuous (e.g., varying severity of depression).

Conversely, Unsupervised Machine Learning (UML) does not use predefined labels. Instead, it identifies similarities between input features and detects inherent patterns within datasets. UML is especially useful for exploring complex datasets without the bias of pre-existing categories. For instance, neuroimaging datasets could uncover previously unknown subtypes of conditions like schizophrenia, aiding in diagnosis and treatment planning. By clustering biomarkers, UML can support prognosis and help refine therapeutic interventions.

Deep Learning and Artificial Neural Networks

Deep Learning (DL) employs Artificial Neural Networks (ANNs), which mimic the structure and functionality of the human brain. DL processes complex, raw datasets through layers of interconnected nodes to model sophisticated patterns. This capability is especially valuable in mental health applications, such as analyzing brain imaging (e.g., MRI, CT scans) to detect structural abnormalities associated with mental disorders.

Natural Language Processing (NLP)

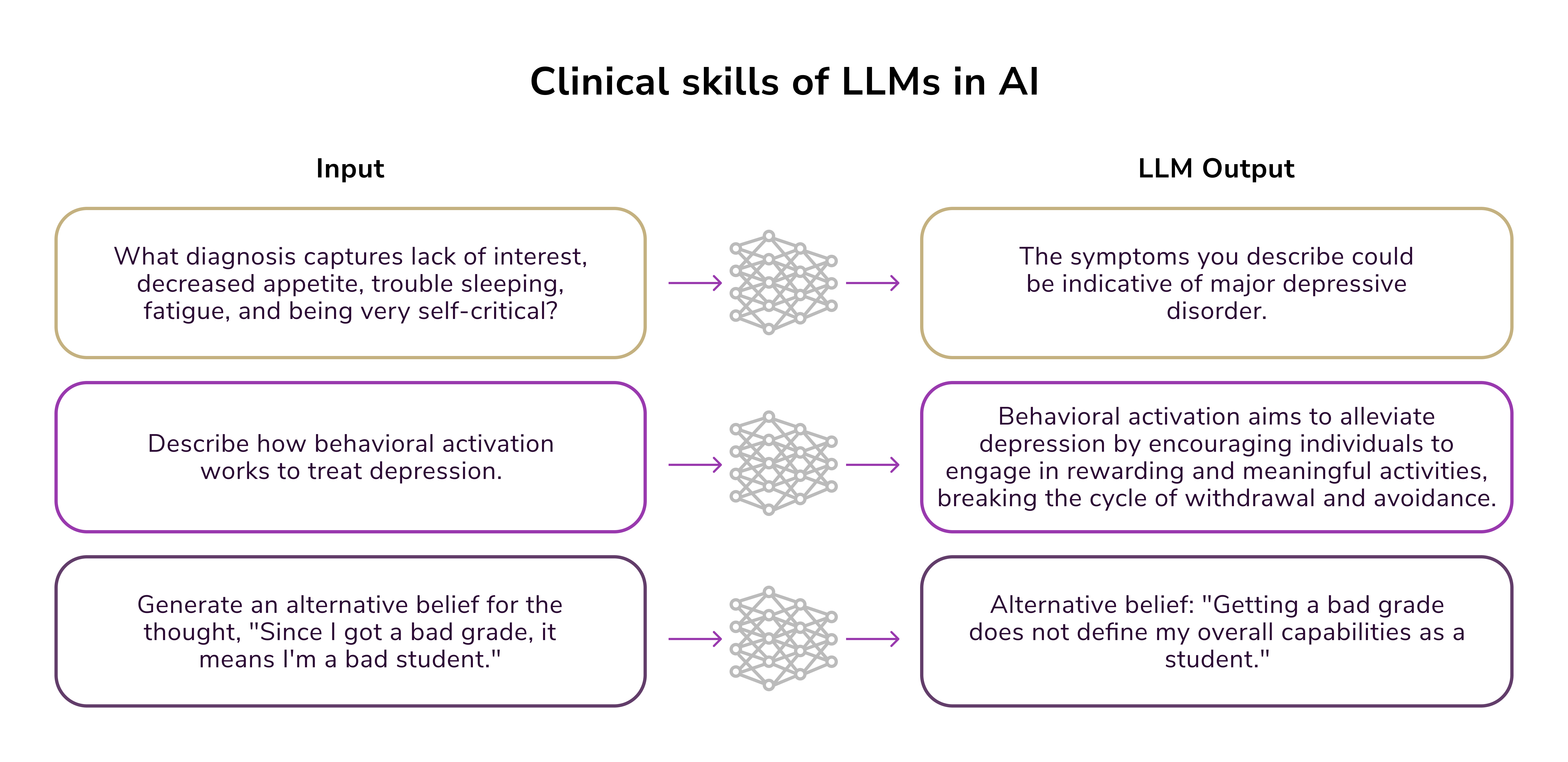

Natural Language Processing (NLP) allows machines to interpret, analyze, and generate human language. Within mental health care, NLP tools can identify emotional states by analyzing patient language through chat, text, or speech. Clinicians can use these insights to monitor mental well-being over time. Additionally, AI chatbots leverage NLP to engage users in real-time conversations, offering coping strategies and directing them to professional help when necessary.

Reinforcement Learning and Adaptive Interventions

Reinforcement Learning (RL) concentrates on training an agent to make sequential decisions through trial and error. RL frameworks integrate dynamic adjustments into therapeutic tools, such as virtual reality-based exposure therapy, by tailoring treatment intensity in real-time based on patient responses. This adaptability ensures that treatments are both impactful and patient-centered, with the algorithm continuously refining its strategies based on feedback.

Computer Vision

Computer vision focuses on interpreting and processing visual data, which has a range of mental health applications. For example, analyzing facial expressions and gestures using computer vision algorithms can help infer emotional states and assess well-being. It also aids in detecting conditions like autism spectrum disorder (ASD) by examining visual and behavioral patterns to support early diagnosis and intervention.

Practical Application of AI in Mental Health

Now, let's see how AI can help improve mental healthcare services.

Analyzing Patient Data to Identify Mental Health Risks and Disorders

Modern AI systems analyze various types of data, including electronic health records, blood tests, brain scans, questionnaires, voice recordings, behavioral cues, and even insights gathered from social media activity. Data scientists leverage advanced techniques such as supervised machine learning, deep learning, and natural language processing (NLP) to dissect this information. These tools help identify physical and mental states such as pain, stress, boredom, mind-wandering, or suicidal ideation, often linked to specific mental health disorders.

A recent analysis by researchers from IBM and the University of California reviewed 28 studies focused on AI in mental health. Their findings reveal that, depending on the AI model and the quality of its training data, algorithms can detect an array of mental health conditions with an accuracy rate ranging from 63% to 92%.

One notable example is the tool developed under the Detection and Computational Analysis of Psychological Signals (DCAPS) project. This system combines machine learning, computer vision, and NLP to analyze language, physical gestures, and social cues, looking for signs of psychological distress. It has been specifically designed to assess soldiers returning from combat, enabling early identification of those who might need further mental health support.

Utilizing AI for Self-Assessment and Therapy Sessions

This category of AI in mental health is led by chatbots leveraging NLP and generative AI technologies. These tools provide personalized advice, track user responses, evaluate mental health progression, and help manage symptoms. They can operate autonomously or be integrated with a certified psychiatrist ready for live interaction.

Some of the most prominent AI-powered virtual therapists include Woebot, Replika, Wysa, Ellie, Elomia, and Tess.

- Tess utilizes artificial intelligence to deliver highly tailored therapy based on cognitive behavioral therapy (CBT) principles, psychoeducation, and clinically validated methods. Users interact via text-based conversations, enabling Tess to rely on advanced language processing to understand emotions. One study conducted using Tess revealed significant reductions in mental health symptoms among students who engaged with the chatbot daily over two weeks, compared to those with less frequent interactions.

- Ellie represents a more advanced AI chatbot with capabilities beyond conversational language. Ellie interprets non-verbal cues, such as facial expressions, posture, and gestures, allowing it to assess emotional states and tailor responses to alleviate stress and anxiety effectively.

AI isn't limited to conversational therapy; it also plays a critical role in mental health monitoring. Integrating AI with wearable devices enhances the ability to track physical and mental well-being. For instance:

- BioBase is an AI-powered mental health app that processes data from wearables measuring metrics like heart rate, blood pressure, and oxygen levels. Designed to combat workplace burnout, the app reportedly reduces sick days by as much as 31%, providing tangible benefits for businesses and employees alike.

Personalized Treatment Plans Through AI

ML algorithms analyze patient information, such as genetic data, biomarkers, medical history, activity levels, lifestyle habits, and previous treatment outcomes. This deep analysis allows AI to recommend interventions specifically tailored to each individual's mental health needs, unlocking greater effectiveness in care.

Customized Treatments for Children with Schizophrenia

A research team at the University of California demonstrated the potential of AI to personalize treatments for mental health conditions. By using computer vision to analyze brain images, they developed individualized therapy plans for children with schizophrenia. This innovation addresses a critical challenge in mental health care: variation in treatment efficacy due to differences in brain function.

Traditionally, doctors rely on trial and error to determine the best medication for a patient, which can be a lengthy and frustrating process. However, the AI system used in the study was trained with diverse medical brain images and associated patient responses to specific treatments. It was able to detect patterns and recommend effective therapies quickly, reducing the need for extensive trial periods.

AI-Assisted Decision-Making with Network Pattern Recognition

Another promising development comes from Network Pattern Recognition, an AI model designed to analyze responses to patient surveys. By examining patient answers to targeted questions, the AI identifies specific mental health needs and offers data-driven recommendations for care. This system supports mental health practitioners by providing evidence-based insights, enabling more informed decisions on treatment pathways.

Enhancing Mental Health Care with AI-Powered Automation

Mental health professionals often face unique challenges when it comes to managing patient care, in part due to the complex and individualized nature of mental health conditions. Legacy technology tools and traditional advisory systems are often insufficient to meet their specialized needs. However, the integration of AI-driven mental health platforms is showing great promise in reducing administrative burdens and improving care delivery.

One innovative example is OPTT, an AI-powered platform designed to help mental health professionals streamline their workflows. Retrieving data from various IT systems within a healthcare setting can generate on-demand reports detailing each patient's progress, current condition, and potential outcomes. Early research suggests that solutions like OPTT could increase access to quality mental health services by as much as 400%, addressing a critical gap in the mental health care industry.

Beyond patient reporting, enterprise-grade AI is proving its value in automating daily administrative tasks, including:

- EHR management: Speed up the identification of key clinical information by organizing and sorting medical records efficiently.

- Paperwork assistance: AI tools simplify form-filling processes, allowing mental health professionals to focus more on patient interactions.

- Literature analysis: By processing clinical papers, AI systems help therapists stay updated on the latest evidence-based practices without investing hours in frequent research.

Mental Health AI: Real World Examples

Several important examples of AI exploration and testing already exist, and we feel the need to highlight them.

Predicting Mental Health Risks

Generating accurate predictions is where AI shines. Researchers at Duke Health have created an AI model capable of accurately predicting when adolescents are at high risk of developing severe mental health issues before symptoms escalate. This groundbreaking approach focuses on identifying underlying causes, such as sleep disturbances and family conflicts, rather than relying solely on current symptoms. Doing so enables proactive interventions and expands access to mental health services via primary care providers.

The model leverages data from the ongoing ABCD study, which has assessed the psychosocial and brain development of over 11,000 children across five years. Using a neural network (to mimic brain connections), the researchers analyzed psychosocial and neurobiological factors to predict which children could transition from lower to higher psychiatric risk within a year. Patients or their parents complete a questionnaire about current behaviors, feelings, and symptoms, and the AI ranks their responses to estimate the likelihood of mental health deterioration. Impressively, this model achieved an accuracy rate of 84% in forecasting escalations in mental illness.

Additionally, Duke's researchers developed an alternative AI model that not only predicts worsening mental health but also identifies potential mechanisms driving the deterioration. With a 75% accuracy rate, this new model provides valuable insights into triggers and pathways to mental illness, equipping healthcare providers and families with tools to take preventive action and implement targeted interventions.

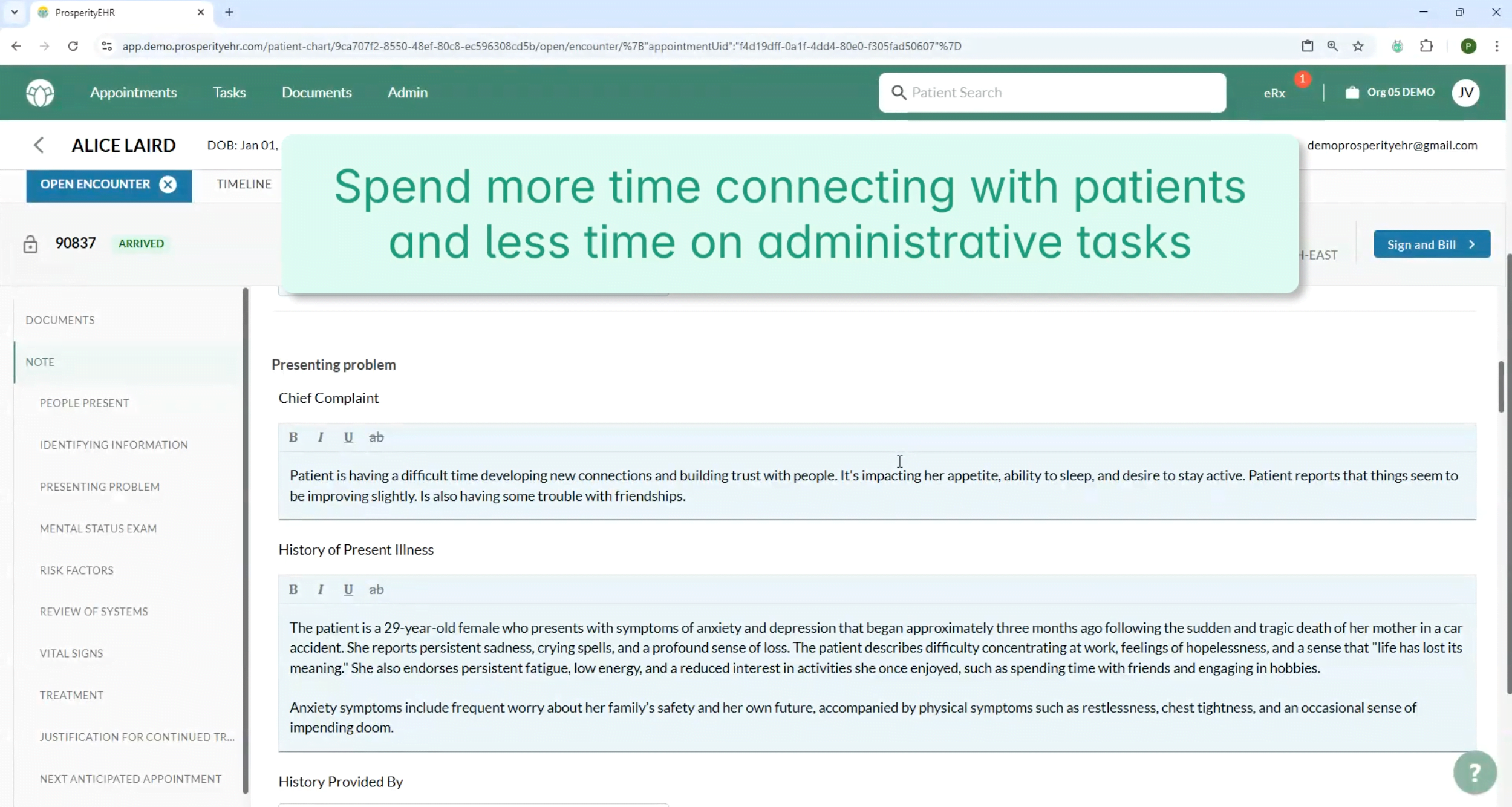

EHR for Behavioral Health Practices

ProsperityEHR has introduced a revolutionary electronic health record (EHR) system tailored for behavioral health organizations, designed to enhance financial stability, streamline operations, and elevate patient care with its AI-ready architecture.

The behavioral health sector faces unique challenges, including a sharp increase in service demand, limited access to federal healthcare incentives, and slower technology adoption rates. ProsperityEHR bridges these gaps by integrating mental health workflows within a single platform. This unified system connects essential tools such as insurance verification, clearinghouses, e-prescribing platforms, and telehealth solutions, addressing the industry's technological lag.

Currently, only 6% of mental health facilities and 29% of substance use treatment centers utilize certified EHRs, compared to an adoption rate exceeding 96% in hospitals. ProsperityEHR highlights additional challenges, including rising claim denial rates and delayed reimbursement timelines, which pressure providers to reevaluate their operational strategies. The new platform emphasizes improving interoperability while empowering behavioral health practices to adapt to industry challenges.

Personalized Therapeutic Sessions

In March 2025, Talkspace introduced an innovative feature enabling mental health professionals to create AI-generated audio experiences tailored to their patients. These personalized podcasts include affirmations, strategies, and guidance designed to reinforce therapeutic progress between sessions.

Therapists can generate and review 3-to-5-minute, HIPAA-compliant podcast episodes before sharing them with consenting clients aged 18 or older. According to Talkspace, incorporating therapeutic exercises into these podcasts can improve patient outcomes. By utilizing this tool, providers extend their support beyond sessions, empowering clients to practice recommended techniques and skills in their own time.

During the pilot rollout, mental health providers on the platform praised Talkcast for referencing specific themes and concepts discussed during therapy sessions. This customization allows therapists to design podcast episodes with AI-generated hosts, offering a unique, mobile-friendly resource for patients who opt in.

Managing Mental Health Conditions

Click Therapeutics is a biotechnology company specializing in AI-powered software designed to provide cutting-edge medical treatments and interventions. This software, accessible through a mobile app, can function independently or alongside pharmacotherapies to manage conditions such as depression, migraines, and obesity.

By leveraging advanced algorithms, the app collects and analyzes patient data, including symptom severity and sleep patterns, to detect trends and deliver personalized treatment strategies. It also utilizes digital biomarkers, like smartphone sensors, for additional insights. For instance, the app monitors a patient's heart rate to identify high stress levels, then recommends tailored solutions such as mindfulness exercises, relaxation techniques, or cognitive-behavioral therapy modules.

Patients can share these app-generated insights with their healthcare providers, offering a holistic view of their conditions and behaviors for more informed treatment decisions. This collaboration between patient and provider enhances care delivery, optimizes outcomes, and supports better management of chronic conditions.

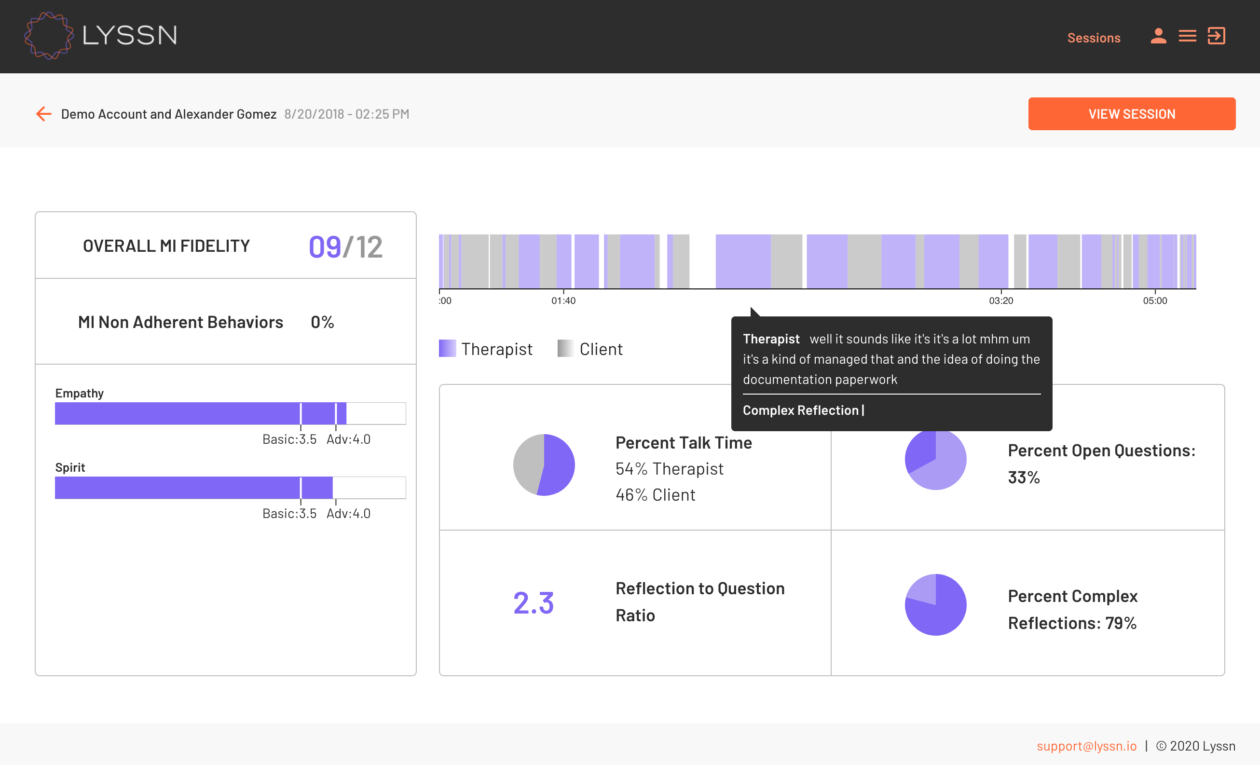

Improving Mental Health Services

Lyssn is another groundbreaking AI-powered platform designed to enhance the quality of mental health services. Their on-demand training modules empower behavioral health providers to refine their engagement strategies and improve interactions with patients.

With patient consent, providers can record therapy sessions, enabling Lyssn's advanced AI to analyze elements like speech patterns and tone from both parties. This technology provides actionable insights, helping clinicians hone their conversational approaches for better patient outcomes.

For instance, the platform can evaluate how clinicians respond during sessions that require cultural sensitivity, assessing their curiosity about the patient's experiences and their ability to discuss sensitive topics without apprehension. Based on these detailed assessments, Lyssn offers immediate feedback, along with personalized training recommendations to improve providers' skills.

Conversational AI in Mental Health

Therapeutic chatbots leverage NLP and machine learning to simulate conversations and offer emotional guidance. By addressing cognitive and emotional factors, they provide immediate, accessible, and anonymous support. These features make them particularly useful for individuals in underserved or remote areas where access to licensed therapists may be limited. Notably, chatbots are valuable for users who feel stigma or discomfort seeking help, such as individuals with autism, as they create a safe space free from judgment.

Key benefits of therapeutic chatbots include:

- Availability: Bots can provide 24/7 support, ensuring individuals have access to assistance at any time.

- Consistency: Unlike humans, who may vary in responses due to mood or fatigue, chatbots maintain uniformity in their interactions.

- Anonymity: Users can express themselves freely without fear of judgment, enhancing openness in seeking support.

Note: Although the potential applications of AI in mental health have been widely discussed, specific frameworks that define how clinicians and AI might collaborate remain underdeveloped. The focus should shift toward creating actionable collaboration models and migration paths to integrate these technologies effectively within existing therapeutic practices. Conversational AI is unlikely to reach the level of sophistication required to replace human therapists in the near future. However, it doesn't need to pass the Turing Test (i.e., demonstrate human-like conversational abilities) to have a meaningful impact on mental health care. Instead, a more immediate challenge lies in designing and executing collaborative tasks that combine relatively straightforward AI systems with human practitioners.

Ethical and Safety Concerns in Generative AI for Mental Health

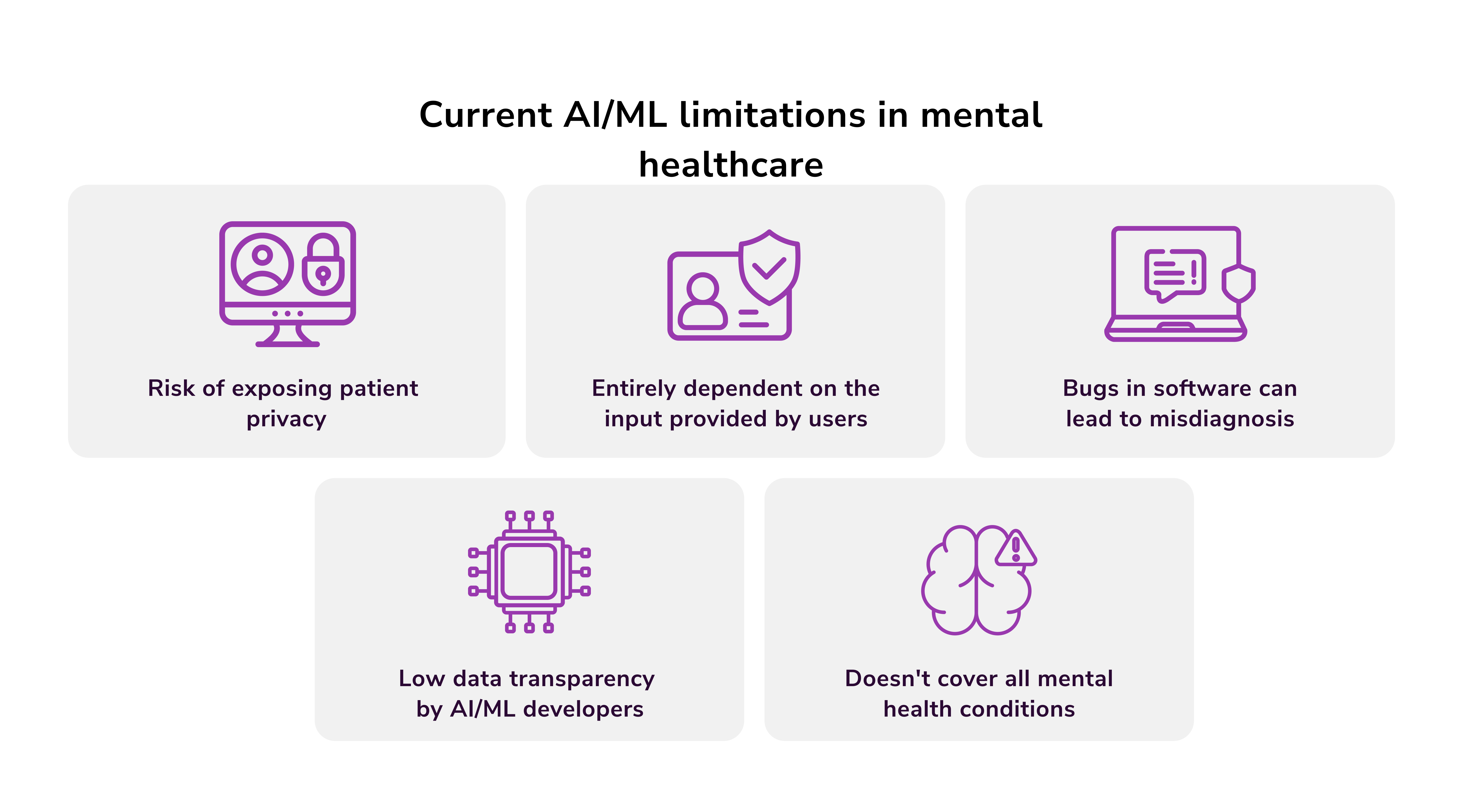

When implementing generative AI in mental health care, certain ethical and safety questions must be addressed. A primary concern is whether these systems could exacerbate symptoms in patients with severe mental illnesses, particularly psychosis.

A 2023 analysis highlighted the risks of generative AI's lifelike responses. Combined with the public's limited understanding of how these systems operate, these interactions could potentially reinforce or align with existing delusional thinking. This concern is perhaps why studies involving Therabot and ChatGPT chose to exclude individuals presenting psychotic symptoms.

However, excluding such individuals raises issues of equity. Those with severe mental illnesses often experience cognitive impairments like disorganized thinking or challenges with maintaining attention. These very hurdles could limit their ability to engage with digital tools, yet these individuals are also among those who could derive the most benefit from accessible mental health innovations. If AI-driven mental health tools are only accessible to individuals with advanced communication skills and high digital literacy, their utility within clinical populations may be restricted.

Another potential limitation of AI systems in mental health is the phenomenon of "AI hallucinations." This occurs when AI systems generate fabricated responses, such as citing nonexistent sources or sharing incorrect explanations. These errors, while inconvenient in other contexts, could pose significant risks in the realm of mental health. For example, an AI tool misinterpreting a prompt might inadvertently validate harmful plans or offer guidance that unintentionally reinforces negative behaviors.

Dangers of LLMs

Is AI breaking barriers of accessibility and offering scalable support to a broader population? Yes. However, it isn't a panacea.

According to a report by the World Health Organization published last year, large language models could exacerbate technology-enabled issues like gender-based violence, including cyberbullying and hate speech. These harms have serious implications for the overall health and well-being of populations, especially adolescent girls and women.

AI tools like chatbots, virtual companions, and other forms of agentic AI are believed to negatively impact user mood, self-esteem, and mental resilience. These effects not only affect individuals but also resonate within communities. Adding to the complexity, governments face difficulties in regulating such technologies effectively. While certain AI tools could potentially be classified as medical devices, opening them to stricter oversight, many operate in undefined areas and fall outside existing regulatory frameworks.

At present, there is a significant knowledge gap regarding the scale of generative AI use and its potential harms. This is because critical data, such as the prevalence of use, dominant AI products, the companies manufacturing them, and information about the user base, remain insufficiently documented.

Victoria Melnychuk

Other articles