Overcoming Challenges in Generative AI: Scaling, Costs, and Accuracy

Adoption of generative AI (GenAI) is entering a pivotal stage, with 67% of organizations reporting increased investments due to the significant value delivered so far. While expectations for GenAI's transformational potential are high, challenges related to scaling, costs, and accuracy are creating barriers, dampening some enthusiasm among leadership. To secure future investments, it will be essential to demonstrate GenAI's impact to the C-suite. Although 54% of businesses are focused on leveraging GenAI for efficiency and productivity gains, only 38% are actively measuring changes in employee productivity.

With its dynamic capabilities, generative AI development has become a key growth driver for businesses and innovators seeking to push boundaries. However, it comes with a set of limitations and challenges that the tech world is yet to overcome.

Generative AI Scaling Problems

Compared to previous technological shifts like cloud computing or the internet, organizations are significantly less prepared for generative AI. The thing is, implementing generative AI requires IT leaders to build a sophisticated, multi-layered technology stack.

Even the simplest generative AI solution involves assembling 20 to 30 different components. These components include a user interface, data enrichment tools, security protocols, access controls, and an API gateway to connect with foundational models. Automating tasks like model testing and validation is also crucial to fully unlock the potential of generative AI.

Another challenge lies in seamlessly integrating GenAI tools with existing enterprise IT infrastructures, particularly legacy systems. But, corporate mainframes hold vast amounts of valuable data that can be leveraged to train AI models. Mainframes play a critical role in driving AI forward.

Scaling from prototype to production

Moving an AI concept from prototype to a fully functional production solution can be challenging. Teams often need to transition quickly from initial testing phases to final implementation, and failing to adhere to best practices can lead to "technical debt." This results in:

- frequent issues where things break or don't work as intended

- difficulties in improving or optimizing the codebase

- higher costs to keep systems operational

- prolonged timelines to complete projects

- limited bandwidth for creating and innovating new features

To address this problem, try Kedro. This is an open-source Python framework designed to address these challenges effectively. Here's how it helps:

- facilitates reproducible, maintainable, and modular code

- integrates best practices into the development process right from the beginning

- boasts over 17 million downloads and 10,000 stars on GitHub

- trusted by developers from 250+ companies

Gif credit: QuantumBlack, AI by McKinsey.

Unlocking Reusability Through Assetization

To scale AI effectively, organizations must prioritize reusing code, insights, and resources wherever possible. Doing so accelerates time-to-value and enhances productivity. However, many companies face significant hurdles when it comes to establishing reusability, including:

- Solutions that are not designed with reuse in mind.

- A lack of centralized repositories for reusable AI assets.

- Hesitation among teams to adopt code they didn't create themselves.

Brix addresses these challenges by providing a platform for assetizing reusable AI use cases. Its core features include:

- Discovery of reusable code to identify and share valuable assets seamlessly.

- Telemetry tools to track and analyze asset performance over time.

- Integration of siloed code, ensuring previously isolated solutions are consolidated.

- Streamlined project integration, making reusable assets easy to implement.

- Robust versioning systems to maintain clarity and track updates.

Gif credit: QuantumBlack, AI by McKinsey.

Repurposing AI Assets for Use Cases

Sharing and adapting models across different business units or customer groups is far from simple. This process demands specialized expertise, clearly defined workflows, and advanced tools. Often, teams face challenges in keeping their code streamlined and properly organized, which can hinder productivity and performance outcomes.

Alloy provides a structured framework designed to simplify the development of reusable software components. This innovative system empowers AI teams to translate their knowledge into actionable code while fostering modularity and collaboration at scale.

Key features of Alloy:

- Acts as a "factory" for generating adaptable AI use cases.

- Facilitates the creation of modular AI components that can be customized as needed.

- Helps package these components into enterprise-ready solutions.

Gif credit: QuantumBlack, AI by McKinsey.

Generative AI Implementation Costs

For business owners, entrepreneurs, data scientists, and developers, understanding the financial considerations behind building generative AI is essential. This includes evaluating its feasibility and identifying the various factors influencing costs. The development process involves multiple phases, from software design and data acquisition to infrastructure and ongoing maintenance. By breaking down these components, one can better comprehend potential hidden expenses and plan for successful implementation.

Building a generative AI application presents a considerable investment. Initial development costs, including research, data processing, and infrastructure, typically range between $600,000 and $1,500,000. Annual operational expenses, covering maintenance, updates, and ongoing requirements, may vary between $350,000 and $820,000.

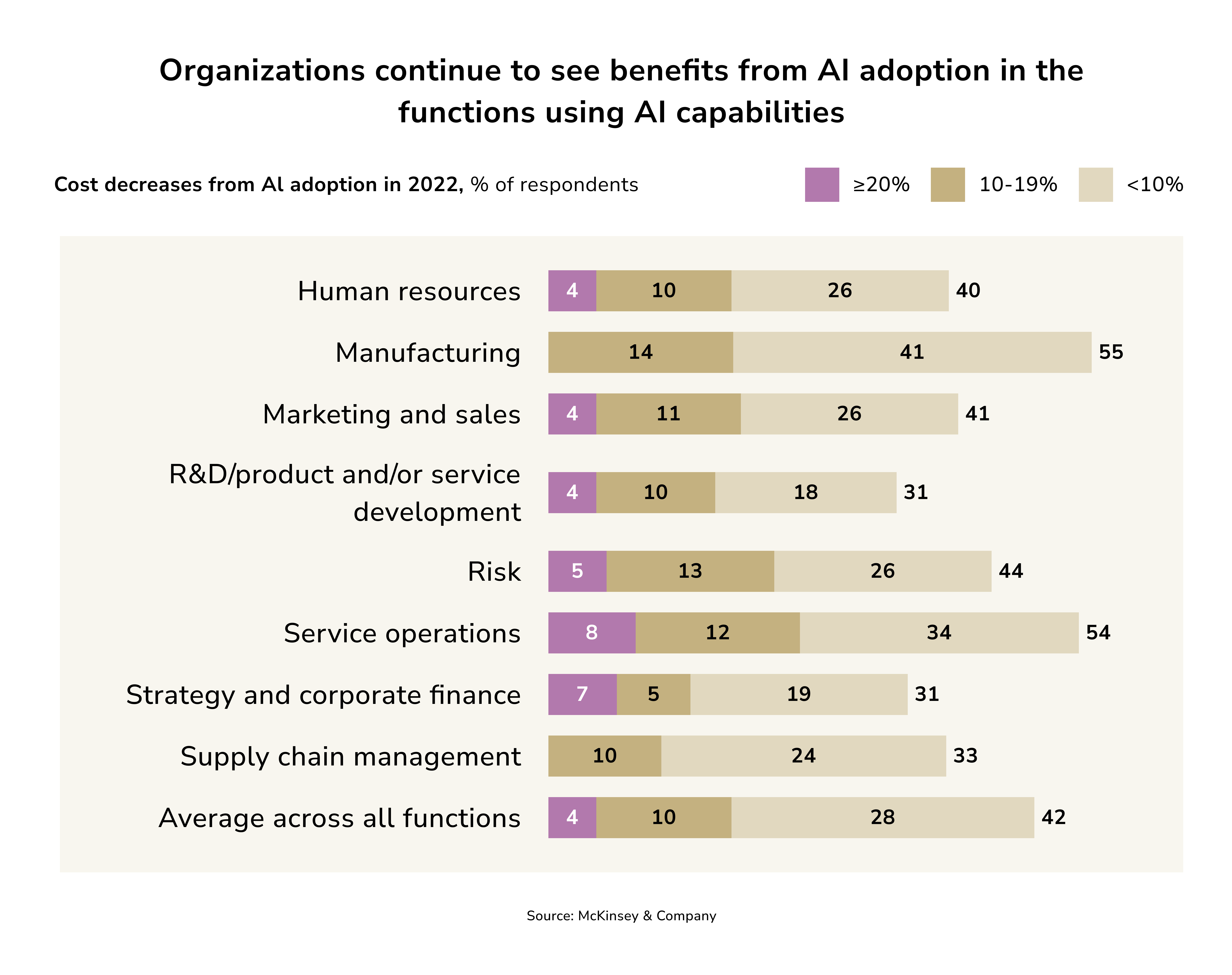

Despite the high deployment costs, organizations continue to reap benefits from AI implementation.

Factors Influencing the Cost of Developing Generative AI

Developing a generative AI app involves various factors that can influence the overall cost. Here's a concise breakdown:

- Scope and complexity. Advanced apps (e.g., image generation, language understanding) require more effort and are costlier than simpler text-based apps.

- Type of generated content. Text-based apps are generally more affordable. Apps generating images, videos, or audio involve advanced algorithms and higher costs.

- R&D. Significant investment in experts (researchers, data scientists) is necessary, costing between $50,000 and $150,000 per specialist.

- Algorithm and model selection. Implementing advanced or custom-trained models can range from $40,000 to $150,000.

- UI/UX. Building an intuitive UI/UX adds value but increases costs.

- Data acquisition and processing. Quality datasets cost $30,000 to $100,000. Cleaning and processing them may add $20,000 to $60,000.

- Integration with external systems. Integrating APIs or databases costs between $30,000 and $100,000, with deployment expenses ranging from $40,000 to $120,000.

- Testing and quality assurance. Rigorous testing and validation cost between $20,000 and $80,000.

- Infrastructure requirements. High-performance GPUs and scalable cloud services can cost anywhere from $30,000 to $120,000.

- Development team. Skilled developers, engineers, and AI specialists demand higher wages, ranging from $80,000 to $150,000.

- Maintenance and updates. Ongoing updates and maintenance add long-term expenses between $40,000 and $150,000.

- Geographic location. Development costs vary significantly by region. Teams in North America and Europe are more expensive than teams in regions like Asia or South America.

- Compliance and ethics. Ensuring regulatory compliance (e.g., GDPR, data security) costs range from $30,000 to $100,000.

Investing in generative AI technologies is undeniably significant, yet the potential returns in efficiency and innovation can be transformational. While the upfront costs may seem high, a well-implemented generative AI application has the capacity to deliver substantial value, making it a strategic choice for organizations aiming to lead in today's AI-driven economy.

GenAI Accuracy and Bias Concerns

Since the introduction of ChatGPT in 2022, interest in generative AI tools and their applications has surged. According to Gartner, by 2025, generative AI will account for 10% of all data produced globally. However, data generated by generative AI tools can also exhibit biases, much like other AI models.

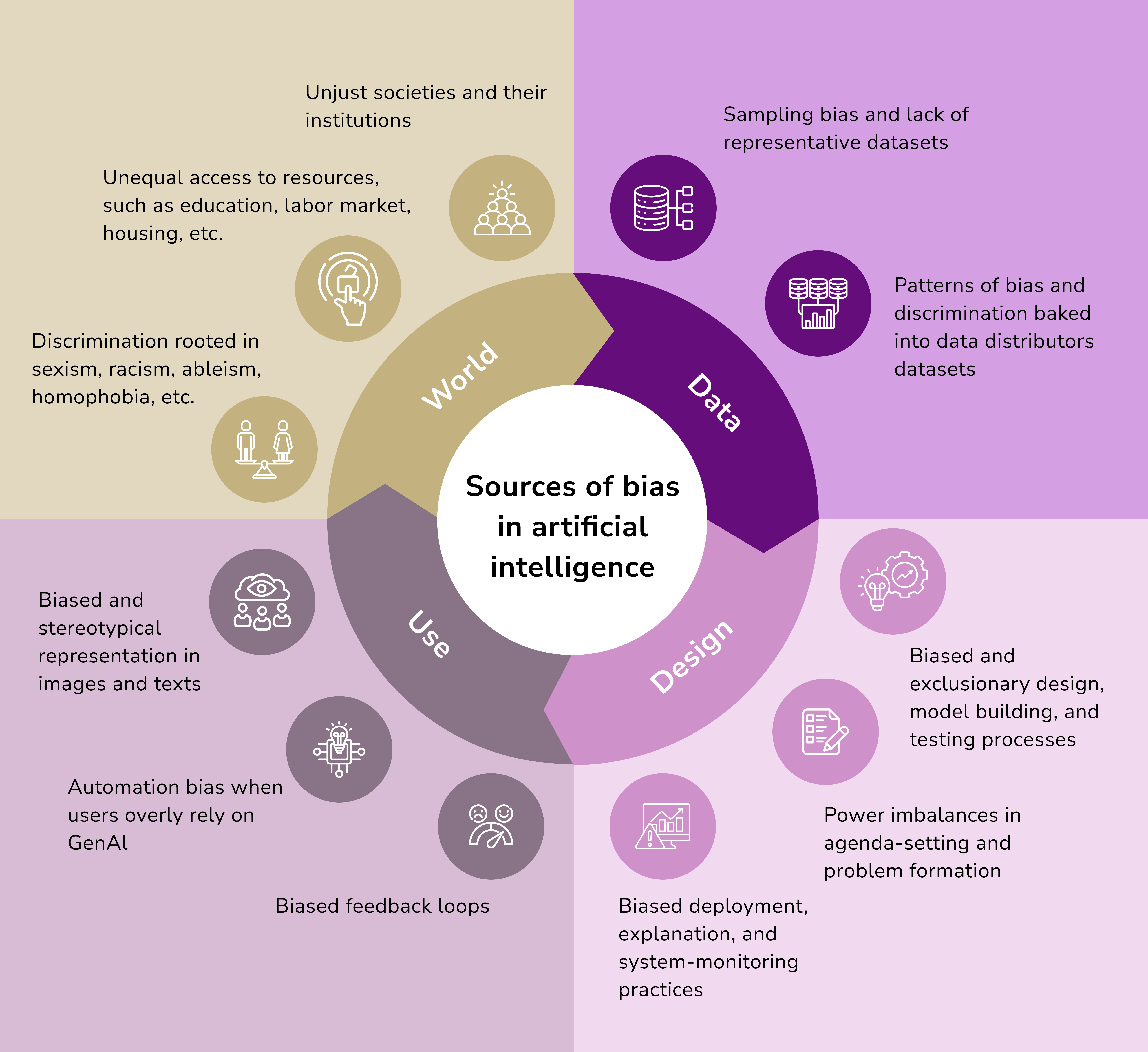

AI bias refers to irregularities in the outcomes of machine learning algorithms caused by biased assumptions during their development or prejudices inherent in the training data. The implications of these biases extend beyond technology—they can lead to real-world consequences, such as increasing risks for specific populations when such systems are incorporated into predictive policing software. This can result in outcomes like physical harm or wrongful imprisonment, underscoring how critical it is to address and mitigate these biases.

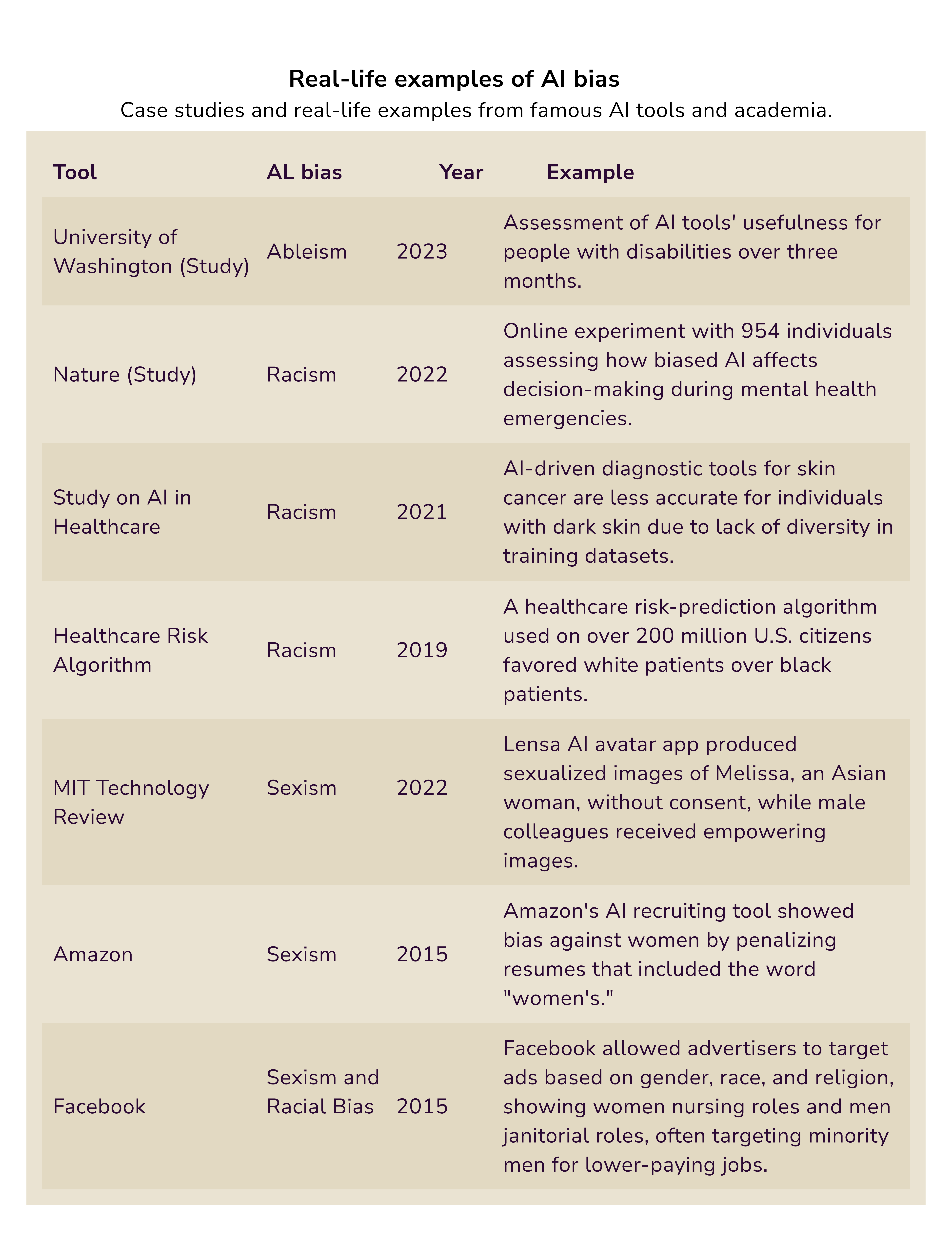

A study by Bloomberg examined the outputs of Stable Diffusion, a generative AI text-to-image model, and revealed some concerning biases. The findings showed that in the "world" created by Stable Diffusion, white male CEOs dominate leadership roles, while women seldom appear as professionals such as doctors, lawyers, or judges. Contrastingly, men with darker skin tones were frequently depicted as criminals, while women with darker skin were portrayed in lower-paying roles like flipping burgers.

Stable Diffusion creates images based on written prompts using artificial intelligence. While some outputs might seem accurate at first glance, they often reflect significant distortions of reality. An analysis of over 5,000 images generated by Stable Diffusion found that it amplified racial and gender stereotypes to a degree far worse than those observed in real life.

The analysis revealed a bias in the generated image sets where individuals with lighter skin tones were predominantly depicted for high-paying professions, while those with darker skin tones appeared more often in prompts such as "fast-food worker" or "social worker."

Bloomberg study evaluating outputs based on skin color and job occupation.

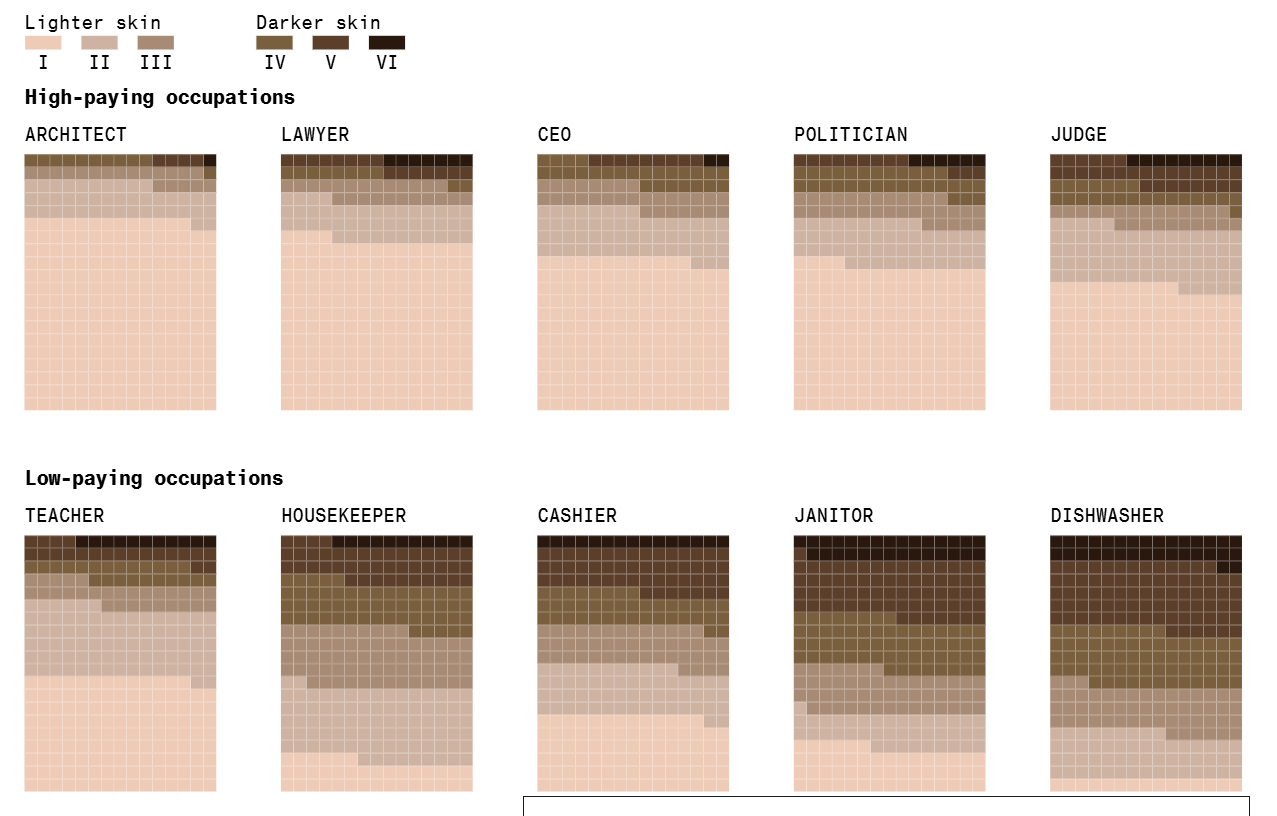

A similar pattern emerged when analyzing images by gender. A team of reporters labeled the perceived gender of each individual depicted in the imagery. Results showed that for every image of a perceived woman generated, there were nearly three times as many images of perceived men. Men dominated most professions in the dataset, except for lower-wage occupations like housekeepers and cashiers.

Bloomberg study evaluating outputs based on gender and job occupation.

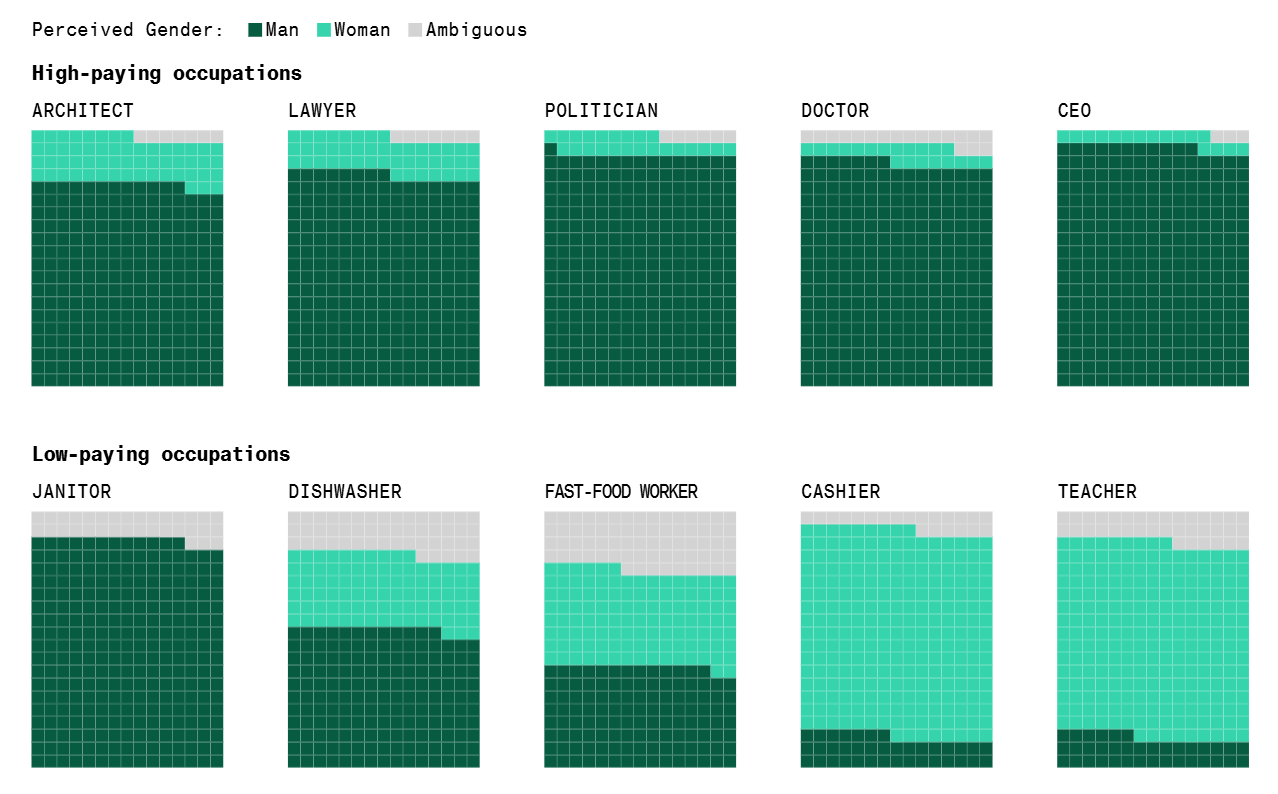

Sources of AI Bias

Bias can infiltrate GenAI primarily through real-world training data that reflects existing societal inequalities and discrimination, as well as unbalanced datasets that favor more privileged groups. If left unchecked, such bias can become embedded in development processes, often dominated by a single demographic—predominantly white men. A lack of diversity during the design, development, and testing stages can make it harder to identify and address these biases.

When biased outputs are deployed in real-world applications, they can perpetuate stereotypes, further widening disparities in fairness, representation, and access to opportunities. Over time, as GenAI evolves and its integration deepens, these outputs may re-enter training datasets, creating a self-reinforcing loop of bias that amplifies discrimination, exclusion, and inequity.

The UNESCO research from 2024 highlights a troubling societal trend in how GenAI systems associate genders with certain stereotypes. For example, women are linked to terms like "home," "family," and "children" four times as often as men, while male-associated names are tied to terms such as "career" and "executive." EqualVoice used GenAI image generators to analyze bias and discovered that the prompt "CEO giving a speech" yielded male images 100% of the time, with 90% being white men. On the other hand, the prompt "businesswoman" generated images of women who were uniformly young, conventionally attractive, and predominantly white (90%).

Beyond societal implications, this kind of bias poses significant risks at an organizational level. Businesses relying on GenAI for tasks like market segmentation, product design, or customer engagement may face challenges unless safeguards are put in place. Ineffective checks and balances can result in flawed decision-making, stagnated innovation, and missed opportunities due to a failure to address diverse user needs and perspectives. Additionally, ethical lapses caused by bias can harm brand reputation and customer loyalty.

Looking ahead, as GenAI's integration across industries accelerates, regulations surrounding its ethical use are only expected to become stricter. Organizations that fail to address these challenges risk serious financial and operational consequences as compliance expectations grow.

Factors Contributing to AI Bias

There are three main factors contributing to AI bias.

Cognitive Bias

Cognitive biases are unconscious errors in thinking that influence judgment and decision-making. They arise from the brain's tendency to simplify the vast amount of information it processes daily. Over 180 cognitive biases have been identified by psychologists, highlighting their scope and complexity. Such biases can find their way into AI algorithms through:

- Designers inadvertently embed them in the model's design.

- Training datasets containing those biases.

These biases can subtly shape AI behavior, leading to flawed decision-making when the model processes data.

Algorithmic Bias

Algorithmic bias occurs when existing prejudices in training data or the algorithm's design amplify inequalities. These can result either from explicit programming choices or the assumptions held by developers. For example, an algorithm prioritizing factors such as income or education may perpetuate stereotypes and contribute to discrimination against marginalized communities.

Incomplete Data

Another common source of bias is incomplete or unrepresentative data. If a dataset lacks diversity, the AI trained on it will reflect those limitations. For instance, much of psychological research relies heavily on data derived from undergraduate students—a narrow demographic that does not accurately represent the general population.

Will AI Ever Be Completely Unbiased?

Technically, it is possible for an AI system to be free of bias, but this depends on the quality of its input data. By ensuring training datasets are free of conscious or unconscious biases—whether related to race, gender, or ideological concepts—it's feasible to create an AI capable of making fair, data-driven decisions.

Yet, in practice, achieving a completely unbiased AI remains challenging because AI is only as good as the data it is trained on, and humans are inherently responsible for creating that data. Human biases, many of which are only now being identified, inevitably make their way into datasets and models. Furthermore, humans and human-created algorithms are responsible for identifying and addressing these biases, which compounds the challenge.

While fully unbiased AI may not be immediately attainable, we can focus on minimizing bias. By rigorously testing datasets and algorithms and adhering to principles of responsible AI, we can work toward reducing the influence of these biases.

How Can Biases in AI and Machine Learning be Addressed?

The first step is acknowledging that AI biases stem from human prejudices embedded within the data. Efforts should focus on identifying and eliminating these prejudices, though this task is far from straightforward.

A simplistic approach might involve removing protected categories such as gender or race from datasets or eliminating labels that might introduce bias. However, this strategy can backfire, as it may impact the AI model's understanding and reduce its accuracy.

There is no single, quick solution for eradicating all biases, but organizations like McKinsey have outlined best practices for minimizing AI bias. These recommendations emphasize the importance of designing AI systems thoughtfully while implementing robust testing methodologies to ensure fairness and accuracy.

Learn more about traditional AI and how it differs from GenAI.

Challenges in Ensuring Fairness in Generative AI

Addressing bias in artificial intelligence necessitates a multi-faceted approach. Key strategies include:

- Enhancing training data: Carefully selecting and diversifying data, while addressing existing imbalances, can significantly reduce ingrained biases within the system.

- Utilizing fairness metrics: Defining, measuring, and optimizing fairness criteria provides a framework for identifying and minimizing algorithmic biases.

- Adopting techniques like adversarial debiasing: This approach explicitly optimizes AI models to diminish learned biases through targeted training.

- Inclusive design from the outset: Incorporating diverse use cases during the design phase ensures broader applicability and minimizes exclusion. Tools and frameworks such as STEEPV (Social, Technological, Economic, Environmental, Political, and Values) analysis help assess potential risks to fairness and discrimination in practical scenarios.

- Testing models in varied environments: Reviewing models under different conditions highlights contextual biases that might otherwise go unnoticed.

- Promoting diversity: Increasing the diversity of data sources and the teams developing AI systems helps offset potential biases. Many leading organizations have dedicated responsible AI teams or AI ethics offices, which oversee the process to ensure alignment with ethical guidelines.

Although no single solution can fully eliminate biases, integrating these methods into AI development has begun to establish effective best practices for mitigation.

Limitations of Generative AI

Generative AI holds immense promise, offering creative and innovative solutions in fields like marketing, design, and entertainment. However, it’s far from perfect and comes with specific challenges and limitations. Here's an overview of its main constraints:

1. Dependency on Data

The quality of generative AI's outputs heavily relies on its training data. If the data used during training is incomplete, biased, or flawed, the AI's outcomes will mirror these issues. Such dependency becomes particularly problematic in areas requiring unbiased, comprehensive, and accurate information, leading to potentially skewed or unreliable outputs.

2. Lack of Transparency (Black Box Problem)

Generative AI models often operate as "black boxes," meaning it's unclear how they arrive at their decisions or outputs. This lack of transparency can be troubling, especially in high-stakes fields like healthcare or finance, where understanding the reasoning process is essential to making critical decisions.

3. Susceptibility to Manipulation

Despite their sophistication, generative AI systems can be easily misled or manipulated. Small adjustments to the input data—undetectable to humans—can drastically alter the AI's output. This vulnerability can be exploited in harmful ways, such as providing misleading data to skew results or conducting adversarial attacks to produce incorrect or false outputs.

4. Limited Creativity and Contextual Awareness

While generative AI systems mimic creative processes, they lack true originality, as they rely on rearranging and reusing existing patterns from their training data. They also struggle with context, especially in complex or nuanced situations, making it difficult for them to grasp cultural subtleties, emotional cues, or ethical considerations.

5. Poor Generalization to Unfamiliar Tasks

Generative AI systems excel at tasks similar to those included in their training but often fail when faced with entirely new or unfamiliar challenges. This limitation requires frequent retraining on updated data to ensure the models remain relevant and effective.

6. High Resource Requirements

The development and operation of generative AI models demand significant computational resources. Training large-scale models is not only expensive but also energy-intensive, making it challenging for smaller organizations to adopt such technologies. Additionally, the environmental impact of running energy-hungry data centers introduces sustainability concerns.

Generative AI is advancing rapidly, with recent model updates and releases continuously improving its capabilities. Yet, it's crucial to contextualize these limitations against the backdrop of its evolution. Understanding these challenges allows us to better harness the potential of generative AI while working to overcome its shortcomings.

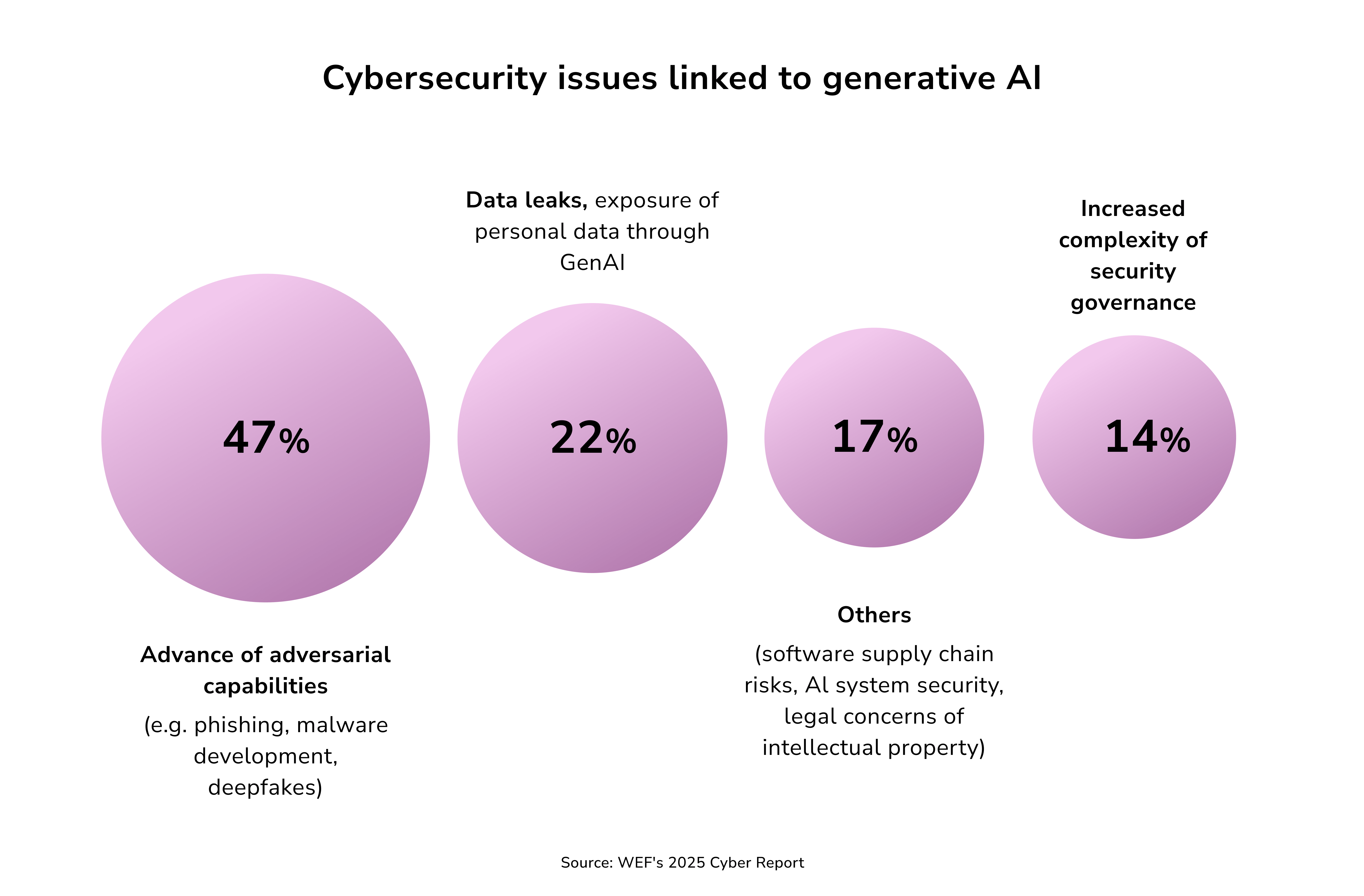

Generative AI Security Risks

The rise of generative AI brings with it a unique set of security challenges, largely due to its ability to produce highly convincing yet deceptive content. From deepfakes to synthetic identities and forged documents, these tools can facilitate fraud, spread misinformation, and enable other harmful activities. Such advancements pose serious risks to personal, corporate, and even national security, highlighting the urgent need to address the misuse of generative AI.

Additionally, as generative AI becomes increasingly embedded in business and governmental operations, the risk of system manipulation grows. Malicious actors could exploit vulnerabilities in AI to influence decision-making, generate biased outcomes, or disrupt critical services. Securing generative AI is not only about protecting the infrastructure itself but also safeguarding the integrity of its outputs, which are now shaping real-world perceptions and influencing crucial decisions.

Let us briefly list the most widely-discussed GenAI risks and their possible solution:

- Misinformation & deepfakes. Risk: Hyper-realistic fake content spreads false narratives, impacting politics, society, and individuals. Solution: Use public awareness campaigns, media literacy, digital watermarking, and advanced AI detection tools to spot and combat deepfakes.

- Training data leakage. Risk: AI systems may memorize and reveal sensitive personal or intellectual property information. Solution: Techniques like differential privacy obscure specifics in datasets, securing data confidentiality during training.

- User data privacy. Risk: Sensitive user data shared with generative AI tools can be misused or exploited. Solution: Implement encryption, restrict user data inclusion in training, and use privacy-enhancing technologies (PETs).

- AI model poisoning. Risk: Malicious actors corrupt AI training datasets, disrupting performance in high-stakes areas like self-driving cars or finance systems. Solution: Enforce rigorous model validation and regularly evaluate datasets for inconsistencies.

- Bias in AI models. Risk: AI systems unintentionally amplify societal biases, producing discriminatory outputs. Solution: Identify and rectify biased datasets before training, adopt ethical AI practices, and monitor AI outputs rigorously.

- AI-Driven phishing attacks. Risk: Generative AI automates realistic phishing scams that are hard to distinguish from legitimate communications. Solution: Strengthen defenses with AI-powered detection systems and educate users about evolving phishing techniques.

- AI-generated malware. Risk: AI creates advanced malware that evades traditional detection by continuously altering its characteristics. Solution: Rely on behavior-based detection and dynamic analysis tools to counter polymorphic threats effectively.

Leveraging GenAI has become a core driver of business innovation and growth. Organizations have shifted their focus from simply exploring the potential of these technologies to fully harnessing them on a large scale to capture market share.

At Impressit, we understand that implementing and scaling AI/ML systems is a complex undertaking that should not be underestimated. Our mission is to empower businesses to achieve accelerated, sustainable, and inclusive growth through the intelligent use of AI.

Andriy Lekh

Other articles