Computer Vision in Logistics: Technical Applications in Key Areas

Advanced computer vision algorithms (based on deep learning and convolutional neural networks, in most cases) enable machines to interpret visual data captured by cameras and sensors. Computer vision seeks to mimic human way of thinking to help companies make smarter decisions, driven by pattern-recognition, insight extraction and deep data-analysis. Today, CV technologies and custom AI solutions development are essential in logistics for process automation, increased data quality, and real-time visibility in warehouse and supply chain operations.

We decided to take an in-depth look at how this technology works, major CV algorithms, and application areas in logistics.

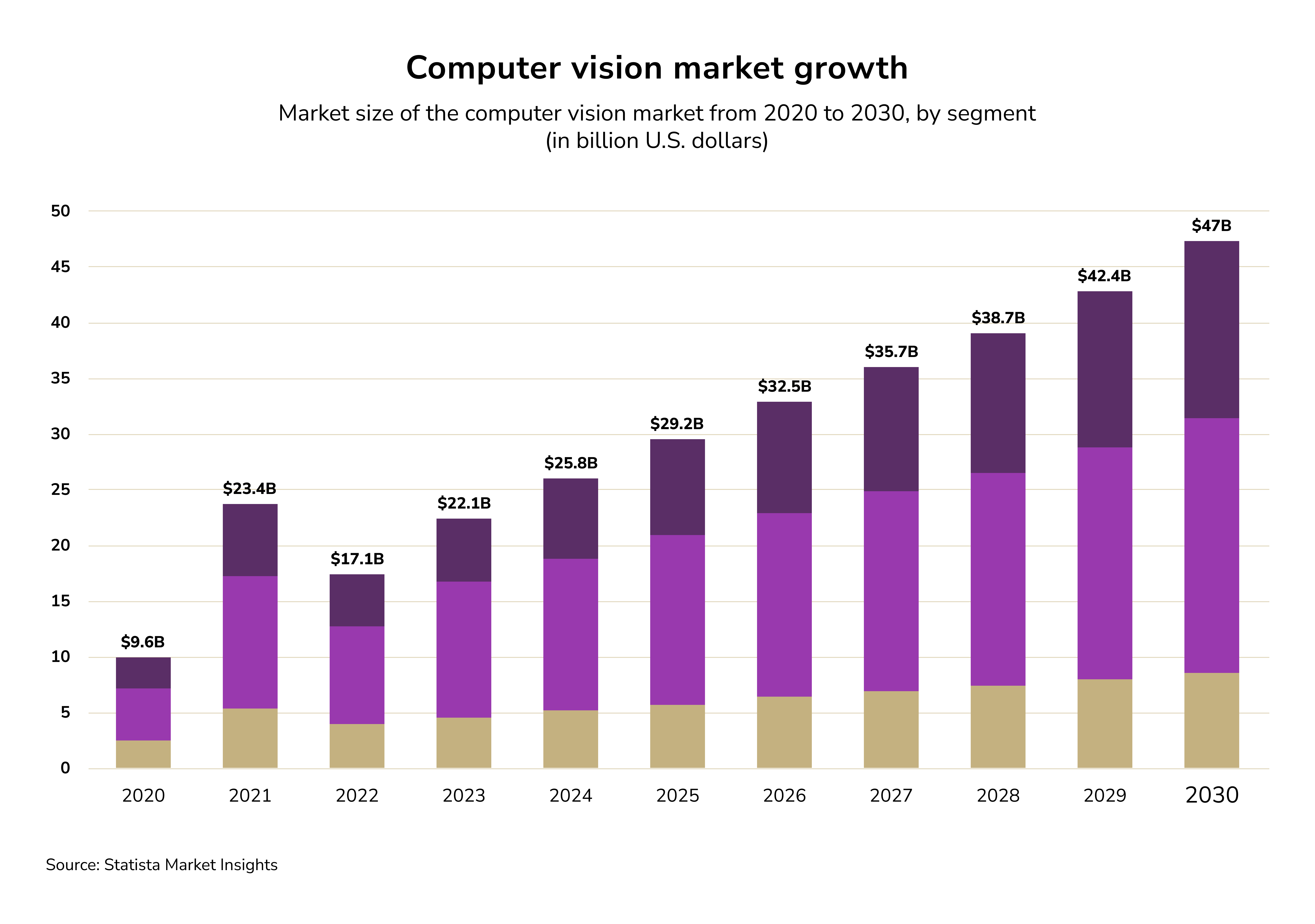

Computer Vision Market

The computer vision (CV) technology market is relatively young and is projected to expand rapidly compared to other market segments. By the early 2030s, the market value is expected to grow from the current $25 billion to about $50 billion. The rapid pace of technological advancements, the growing number of applications across other industries, and rising market demand are the main drivers of growth in the computer vision market. The use of CV technology enables companies to identify key areas for business process automation, which has been the goal of AI use from the outset.

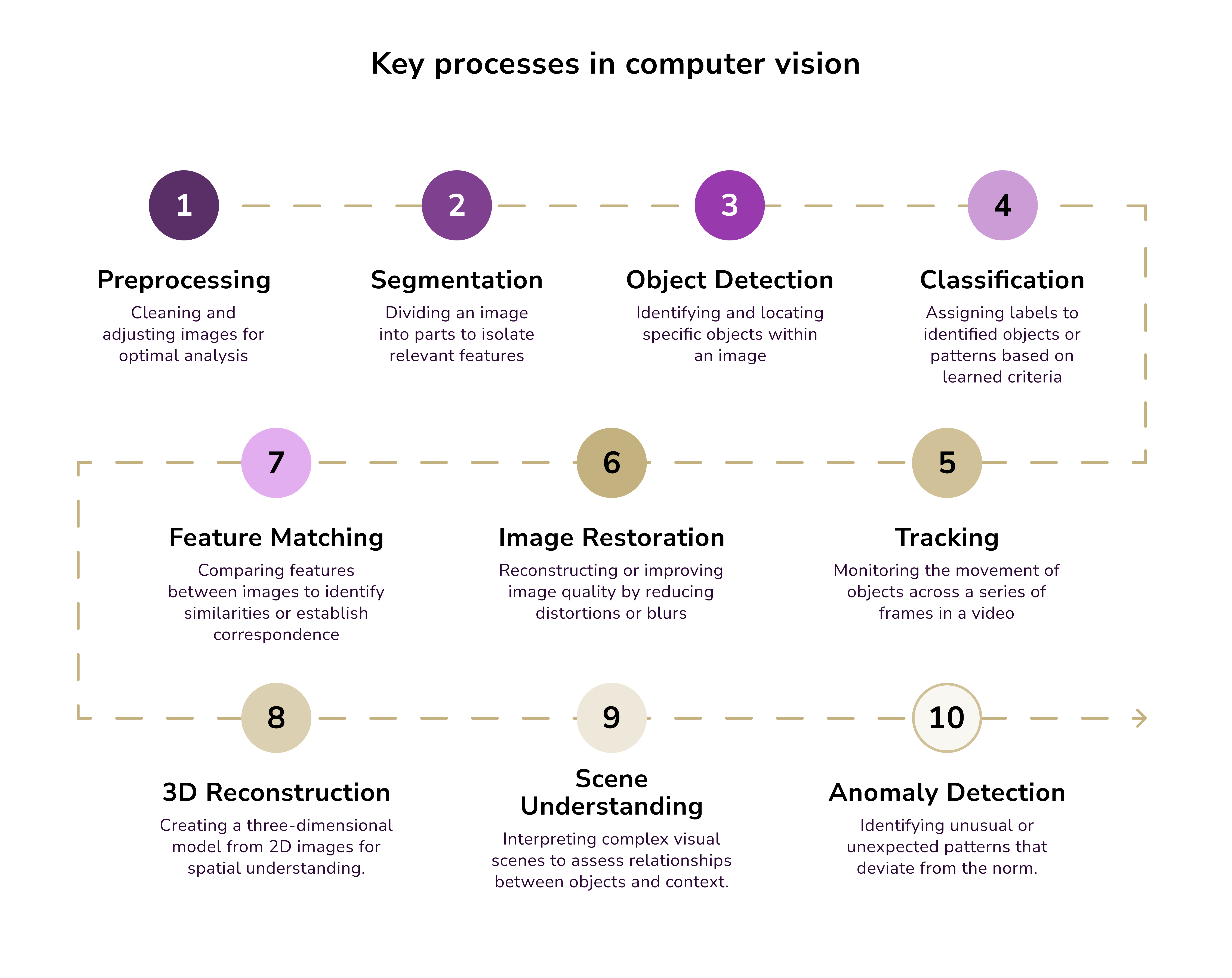

Key Processes in Computer Vision

Computer vision systems typically perform a sequence of image processing and analysis steps to convert raw visual inputs into useful information. A typical CV pipeline includes the following key processes:

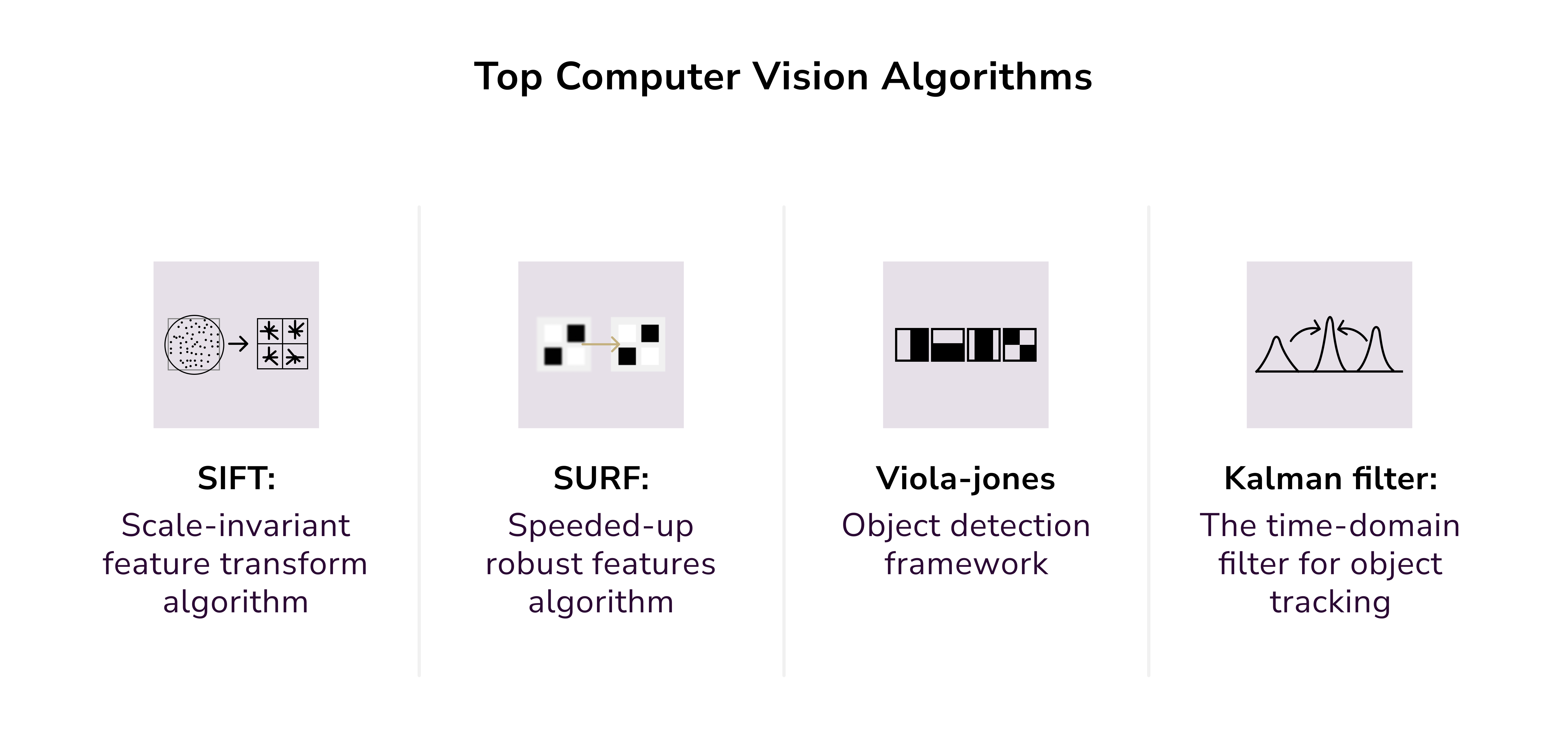

Computer Vision Algorithms

Several variants of algorithms facilitate logistics tasks, extending from classical image analysis to the most recent deep learning techniques. Let's summarize the most relevant algorithm types in computer vision.

Image Processing and Low-Level Vision Algorithms

These classical techniques operate on an image's raw pixel data to extract first-order visual primitives. Great examples include edge-detection image filters such as the Canny operator and the Sobel kernel, which detect sharp intensity variations and highlight image edges. Thresholding (e.g., Otsu's method), on the other hand, is a technique that converts grayscale images to binary images by removing all pixels below a given intensity threshold.

This method is useful to segment simple foreground/background regions. Other lower-level algorithms include morphological operations, such as dilation and erosion, to clean up noisy binary images, or noise-reduction filters to restore an image. These methods, however trivial, are often used as the first step in CV systems. They provide the necessary preprocessing steps for other, more complex algorithms or for quickly identifying and isolating regions of interest in an image.

Feature Detection and Description Algorithms

Before the widespread use of deep learning, classical CV relied heavily on handcrafted feature detectors. One of the most widely used algorithms for detecting keypoint features that remain constant regardless of image scale and rotation is SIFT. Other similar but more efficient algorithms include SURF and ORB. Some of the oldest algorithms also include the Harris corner detector. Once the image features have been extracted, computer vision systems use descriptors to recognize objects, along with other classical algorithms for image matching and classification.

Some of the more modern algorithms include k-Nearest Neighbors and Support Vector Machines (SVM) for classification. Take, for instance, a computer vision system for logo recognition: it would combine SIFT feature extraction with a nearest-neighbor matching algorithm to detect the logo in the image. Using the SIFT algorithm, a computer vision system can recognize objects. These hand-crafted algorithms and feature-detecting techniques represent a fundamental shift in the field for the numerous CV applications. Their importance remains in modern-day computer vision applications, particularly where deep learning is not feasible due to limited data and computing resources.

Machine Learning and Object Recognition Algorithms

As the datasets continued to grow, the integration of ML techniques into CV became commonplace. In the early days, engineers used the Viola-Jones cascade classifier, which was trained in real time to detect faces and other objects. A similar approach used Histogram of Oriented Gradients (HOG) features with an SVM classifier for image detection, a method widely recognized for pedestrian detection. Learning from data was an improvement over the manual rules of the previous generation, and the hand-crafted features that deviated from other standard techniques made it state-of-the-art in computer vision until the early 2010s, when the major shift in deep learning occurred.

Deep Learning Algorithms (Neural Networks and Beyond)

CNNs (Convolutional Neural Networks) are the most important and influential algorithms in deep learning. The models apply the principles of hierarchical feature learning to learn simple features near the input, such as edges and textures, while understanding more complex features and shapes at deeper layers. CNNs are the foundation of modern architectures for image classification and object detection. ResNet, VGG, Inception, and, more recently, EfficientNet achieve very high performance and highly accurate image classification across thousands of categories.

Faster R-CNN, YOLO, and SSD are the current state of the art for object detection and use CNNs as feature extractors to detect multiple objects in a single image. In image segmentation, deep models such as U-Net or Mask R-CNN provide pixel-wise classification of target images.

Autoencoders and GANs (Generative Adversarial Networks), which can perform image enhancement and synthesis or unsupervised feature learning, are another category of deep learning models. ViTs (Vision Transformers), which show significant promise, have recently diverged from the trend of relying solely on CNNs. ViTs segment images into multiple patches, then apply an NLP self-attention model to maintain long-range relations. They are effective for image classification, and detection and segmentation tasks are on the horizon as well. In summary, deep learning models are the current leaders in computer vision because they can learn rich feature representations from data in an unsupervised manner.

How Computer Vision Works

At a high level, CV systems use AI (mostly machine learning) to learn from images and apply that knowledge to recognize other images. When building CV systems, developers train models on large datasets of labeled images to help them generalize to real-world inputs.

For example, a model might learn from a million labeled images of cars to recognize visual features that distinguish cars from other visual inputs containing non-car objects. Model learning involves adjusting the algorithm's internal parameters to reduce the model's error. Accuracy improves as the model is trained on images in a continuous loop and as more data is provided. At the end of the training phase, the system should be able to form a mathematical representation of visual concepts for the training images.

After model training and deployment, new images undergo preprocessing (as explained previously), and the trained model is then invoked. Let's say a convolutional neural network is used for image recognition; the image's pixel values are convolved layer by layer. At the very first layer, the network might distinguish simple vertical or horizontal edges and blobs; at progressively deeper levels, it composes edges and blobs into more comprehensible features such as corners, textures, or complex shapes. Each layer of the network progressively describes the image, and retaining a detailed encoding of the image helps the network predict its content.

Computer Vision Package Sorting

Let's examine how a computer vision package sorting system operates in a logistics context. This type of system uses cameras to capture images of packages on a conveyor belt continuously. The software first runs image enhancement (e.g., adjusting image contrast to make labels clearer) and then, using a pre-trained model, extracts and analyzes attributes to determine the packages' identities. It may, for example, create a binary mask for each package and then run OCR to read its label. The system then assigns the packages to different destination bins.

The model "learns" by comparing its observations to its training data. For example, it may learn to read a label associated with a particular set of text and colors, thereby routing it to the right place. To do this, it needs to detect features in the image, locate text and characters, and classify them by their destination into a predetermined set of categories. This results in instructions such as "send to loading dock 3", which can be implemented by automated systems or people.

Note: The entire process from camera image retrieval and routing decisions occurs in a couple of seconds with laser accuracy, exemplifying how computer vision processes pixels and a slew of relevant data in real time for actionable insight.

Warehouse Management and Safety

One of the great benefits of using AI for warehouse management is enhanced employee safety. See, computer vision enhances warehouse management by giving robots and management systems "eyes" on the warehouse floor. One major application is mapping warehouse layouts for navigation. How does it work? Rather than following fixed paths (such as painted lines or magnetic strips), autonomous warehouse vehicles use cameras and Simultaneous Localization and Mapping (SLAM) techniques to create and update a real-time map of their environment. By integrating camera vision with other sensors, such as LiDAR, robots can perceive a 3D scene (of aisles and shelves) and localize themselves within it. This allows free navigation in dynamic layouts, while simultaneously supporting obstacle avoidance and path replanning. When the robot's on-board CV system detects a change (e.g., a new pallet placed in an aisle), it overhauls its internal map on the go. This mapping is even more important for large warehouses to coordinate automated guided vehicles (AGVs) and drones without heavy infrastructure.

On top of that, keeping tabs on workers helps ensure they follow safety rules. Instead of relying only on humans, cameras mounted around the site or gadgets built into machines keep watching live footage nonstop. That way, they spot warehouse employees moving near risky areas, such as operating robots or busy forklift paths. If someone gets too close to a moving machine, smart software steps in, slowing it down or shutting it off right away. These setups also log where forklifts go and where people are, sounding alarms instantly whenever someone wanders into a no-go area, or a vehicle exceeds speed limits or goes backward along its route.

These camera-driven safety tools usually connect to sensors and internet-connected devices—such as location-tracking tags—to build a stronger layer of protection that reduces accidents.

Robotic Arms and Pick-and-Place Machines

CV is also used to guide RPA systems in logistics, such as robotic arms and pick-and-place machines. In automated order fulfillment, robotic arms use CV systems to perform the order picking task. Overhead picking stations and robotic arms use high-resolution, custom CV systems that provide visual feedback to the arms, enabling them to see targets on shelves, in bins, or in drop zones. Object recognition in CV systems uses detectors (based on deep learning) to select which item to pick based on matching and selection criteria (e.g., shape, label, barcode), and to locate the item and determine its position and orientation.

When the robotic arm moves toward an object, computer vision makes tiny corrections on the fly, like adjusting its path using reference markers to keep placement accurate. Once lifted, the camera checks whether the right thing was taken and if it remains intact.

Example: At Ocado, machines grab food from containers using visual guidance; cameras spot every package, guiding grippers to latch gently with suction, avoiding damage by careful touch. Inside Amazon's Sparrow, artificial intelligence works alongside imaging tech to find individual goods hidden among countless types, showing how visual systems help bots manage endless variations in form and dimension. In warehouses, clear visibility lets robots understand space, move safely near people, lift objects precisely, organize them neatly, and stack boxes tightly.

Inventory Tracking and Identification

Maintaining continuous, accurate stock counts is one of the many challenges in the logistics industry. Computer vision offers automated identification, counting, and localization of stock items, drastically reducing the need for manual barcode scans or cycle counts.

Now, let's get into more detail about how it works. Images of products in storage are captured continuously by strategically placed high-resolution cameras that monitor shelves and pallet racks. Deep learning models based on CV algorithms, such as YOLO and R-CNN, help sort and identify individual products and/or packages on a shelf. A single "glance" at printed tags, scannable lines, or square codes lets camera-driven tools identify exactly which goods are available in stock and where they sit. Information flows directly into tracking software when machines detect these markers, keeping physical stock and digital logs aligned.

Example: NVIDIA's KoiReader is a vision-based inventory system deployed at PepsiCo that reads warehouse labels and barcodes at high speed, eliminating the need for manual scanning. It uses AI to read labels in odd orientations and with partial occlusions. Such systems ensure that digital inventories are updated instantaneously with additions or deletions of an item, and that stock level thresholds are automatically restocked without human involvement.

CV-Enabled Shelf Scanning

CV-enabled shelf scanning using fixed or mobile shelf scanning devices is not new. Some warehouses use autonomous robots and camera-mounted forklifts that can roam the aisles and periodically scan the shelves. These mobile vision units check whether an item is out of order or a slot is entirely empty, and report the item for correction. Many of these modern solutions use edge computing, where image processing (the actual detection and counting) is performed inside the camera, or at least locally rather than in the cloud. This is very important if the system uses high-speed conveyor belts or needs to update inventory very frequently, since it eliminates cloud latency to deliver a very responsive system. For these reasons, Gartner forecasts that by 2027, 50% of companies with warehouse operations will leverage AI-enabled vision systems to replace manual barcode cycle counting.

Inventory Drones and Aerial Vision

To reach more remote areas of stored inventory, more companies are using drones and aerial imagery to assist. Flying units autonomously glide down warehouse aisles to scan inventory at high elevations and yards. Drones can also follow programmed flight paths and CVs to record and identify pallet labels, and to count low stock from above. Importantly, drones improve safety and efficiency by flying to high altitudes and eliminating the need for manual labor with lifts and ladders. From thirty feet up, a device snaps detailed shots of goods. Algorithms then process those images, counting boxes or reading codes, all while workers watch them from the ground.

Example: Startups such as Gather AI and Corvus Robotics build drones that connect straight to warehouse software. These flying units carry vision systems able to detect barcodes, printed numbers, or expiry tags. They identify how many boxes are behind the others by analyzing only the front layer. This way, cameras mounted on walls, rovers rolling down aisles, or airborne scouts take over the job of watching stock. Accuracy improves sharply because updates arrive instantly as changes occur, and misplaced goods get found quickly. Ultimately, orders move faster through the system thanks to reliable data feeding at each step.

Supply Chain Monitoring and Optimization

Computer vision data is also pushing past warehouse walls, increasing visibility and driving better decisions at every stage of the supply chain. One prominent example of this technology is the use of logistics and the transport of goods across various operational stages.

Once goods have arrived at a fulfillment center and been assigned to a truck, CV systems at distribution hubs and delivery points can verify and record the shipment status at multiple transit points. For instance, at delivery points, CV can verify that the appropriate delivery is offloaded/ loaded onto the truck by recognizing the delivery label. The technology can also recognize the truck trailer ID or license plate, and record the time to pinpoint delays in the loading process. Operational managers can use this information to improve yard traffic flow and verify that the full load is on the truck, preventing costly shipping errors.

Example: Zetes has developed a vision system that snaps every loaded pallet, decodes multiple barcodes, and cross-references with the digital manifest; the system alerts operators of any missing or misloaded boxes in real time.

Deep Learning Anomaly Detection

Another contribution of computer vision is monitoring the state of transport and identifying problems in transit. Cameras that monitor individual or continuous checkpoints in the flow of packages can perform integrity and stability checks. CV models can determine whether a box's orientation is changing or whether the seal has been broken (indicators of tampering). They track and report along a covered delivery route. Vision-enabled terminal pass packages and use dented and puncture detection systems along with x-ray water stain detection. If they see something wrong with a package, they can remove it from the flow, drop it, and inspect it to prevent sending damaged products to customers.

What's more, modern delivery trucks have cameras inside that periodically monitor packages. If a CV system detects that a box has been shifted, it can inform a driver or a logistics system that the box has likely been crushed. This is a great application of deep learning anomaly detection. When trained on sets of normal and damaged parcels, the system is good at identifying small bits of damage that a human just wouldn't notice in the flow of things.

Example: FedEx employs computer vision technologies in its facilities to assess packages currently in transit for damage. If the technology identifies a problem, the package can be rapidly set aside for repacking or replacing to avoid delays.

Predictive Analytics and Operational Intelligence

Most of the visual data obtained from the CV captures streams of images throughout the supply chain, which can provide additional predictive capabilities. Event logging, built on computer vision models, captures and documents every observed detail and can be used to identify damaging patterns within the supply chain. A good example could be computer vision systems detecting a high number of damaged boxes as they are unloaded from a truck on a certain route. In this case, analytics could help uncover a packing defect, inadequate route containment, or underdeveloped driver training for the drivers assigned to this route.

Predictive analytics in logistics or computer vision metrics models can also help identify a route diversion. The misloaded pallet frequency or average loading dwell time per truck can be used to predict route diversions or poor targets. Control towers within the supply chain combine CV data to provide visibility and predictability. Thus, managers can see the exact status and location of every parcel, enabling them to anticipate disruptions. Transforming the process of observing captured data to computer vision is rapidly growing across the industry. Route predictions and supply chains optimally flowing into organized demand data are just a bonus.

Package Inspection and Damage Detection

Before goods reach customers, logistics companies need to confirm that each package is labeled, intact, and meets all requirements. CV-enabled automated parcel inspections help detect external damage and other defects much faster and more consistently than manual inspections. Along conveyor belts and sorting lines, high-speed camera arrays capture each parcel from multiple positions. The algorithms then classify each image and detect defects based on recurrent damages such as open flaps, dents, bulges, tears, and water stains, as well as surface anomalies that appear to be damage.

Example: DHL employs a vision-based inspection system (developed with Cognex) that uses a combination of 2D and 3D cameras to spot issues in real time. As soon as a box with a dent or a popped flap is recognized, the system can automatically divert it off the main line. This prevents a damaged parcel from being shipped out and avoids the complexity of retrieving it later in the process. It also keeps conveyor operations flowing smoothly by removing items that could jam machines (e.g., it helps ensure a severely deformed box doesn't get stuck in an automated sorter).

3D Vision for Damage Detection

Damage detection has been improved through the use of 3D technology. See, bulges on the sides of the box and crushed corners will not be identified in a single 2D photo, especially if they are large and damaged. Meanwhile, stereo vision technology with 3D cameras provides depth perception, enabling the visual detection system to determine whether the package has the expected characteristics of a cuboid. Coupled with advanced 2D scanning, the system can identify and flag small visible surface damage, such as pinholes, and box flaps with damage that have been opened.

Combining this method with 2D high-end visuals enables the detection of surface damage, such as small scratches, worn edges, or an opened box. After a package is tagged as suspicious, the line can automatically reject it or reroute it to an operator. Early damage detection gives the operator the opportunity to repackage, readdress, and deliver an item on time. Unlabeled copies and the item record can be properly annotated to ensure good follow-up.

Label Verification and Integrity Checks

In addition to handling physical damage, CV systems now also perform label verification and package integrity verification. As parcels rapidly move through an automated sort, shipping labels are photographed, and information is retrieved through OCR (optical character recognition) and barcode decoding.

CV inspection innovations have begun to diffuse into the industry at scale. FedEx, for instance, has developed damage-detection vision systems for the initial hub check-in and during sortation, enabling active interception of damaged parcels. The company then takes appropriate action, either patching or reboxing damaged parcels, to avoid customer dissatisfaction. Furthermore, FedEx retains such records for insurance purposes or to highlight problematic routes.

Similarly, in Amazon's fulfillment centers, packages are sent through smart conveyor tunnels with a camera that takes a rapid 360-degree image. CV algorithms confirm the image's barcode, report if the box has been opened or has loose flaps, and verify the box to prevent issues with oversized, undersized, or overstuffed packages. In other words, CV inspections enforce logistics quality control by ensuring that outgoing packages are in good condition and undamaged, preventing customer disappointment and damage to the brand image.

CV-Equipped Autonomous Vehicles and Robotics

Autonomous technologies rely on multiple specialized CV technologies:

- Stereo vision uses dual images to provide depth perception, critical for determining how far an obstacle is and for navigating a safe path around it. Some automated forklifts and shelf-scanners use stereo vision to identify a pallet's location and properly position their forks for load acquisition.

- Time-of-flight cameras measure the relative distance of an object by calculating the time it takes for emitted light to return. They can also provide depth, are effective in low-light scenarios, and can generate distance maps of complex scenes in an instant, assisting a robot in navigating a stash of items in a poorly lit warehouse.

- Visual tracking of a moving entity (e.g., a walking person or an automated robot) is also used in autonomous systems to predict and prevent collisions with the tracked entity. The result of merging multiple object tracking and multiple vision inputs is rich situational awareness. For example, the vision system of an autonomous delivery vehicle can simultaneously detect and classify objects, track them, and plan a real-time path. (This multitasking is generally performed using deep neural networks running on powerful GPUs for tasks such as road sign recognition, lane detection for outdoor delivery bots, and drivable surface segmentation.)

Amazon warehouses use many mobile robots from Proteus and Kiva that transport and shelf products; these robots use downward-facing cameras and front-facing depth cameras to make autonomous decisions while central control coordinates their movements. In these and many other cases, CV algorithms continuously serve as the vehicle's "vision," enabling autonomy.

Such information enables the robot to see and recognize obstacles. For instance, a person can be differentiated from a pallet. Knowing this, the robot can perform the correct response, such as stopping and yielding to a person, while simply detouring if the obstacle is a static box. CV-based navigation typically uses trained models to detect the most common objects in the warehouse environment, such as forklifts, pallets, and other objects.

Real-Time Tracking and Analytics

Delivering actionable insights from real-time monitoring processes is one of the main benefits of CV in the logistics industry. Companies in logistics are using computer vision to generate real-time, actionable insights from visual information captured by these technologies.

In warehouses and distribution centers, for instance, computer vision can track the position and real-time identity of each parcel as they go through speed sorting. Computer vision systems achieve this through a real-time parcel-tracking system that follows the parcel through a set of cameras. Managers can real-time track each parcel's location by logging into the system and seeing that, let's say, package X is currently in zone Y on conveyor lane three, en route to a truck for loading.

The system can alert staff to any diversion or unexpected activity by tracking the flow of packages through the facilities. The real-time insights from computer vision systems ensure issues are captured and resolved on the spot, rather than hours later due to delays in reporting them to a manager.

Vision-Based Forklifts Tracking

With real-time visibility into stock levels, discrepancies, and quality issues, issues can be rectified in real time rather than after they are resolved, as companies no longer have to rely on periodic scans or counts. This transparency supports more accurate forecasting and improves customer service. For example, the downstream delivery estimates can be altered proactively if a delay is identified in real time.

This automated process sends the information to the dashboards with analytical tools designed for warehouse managers and industrial engineers for layout and personnel planning. For instance, if real-time heatmaps on a screen show one loading dock as congested with pallets while the other dock is idle, managers can quickly move their team to the congested dock or adjust the schedule of incoming trucks. Vision-based tracking of forklifts is also improving safety in real time, as alerts can be provided to the floor manager and driver when a forklift approaches a zone with many people. In fully automated facilities, such alerts could activate safety devices, such as a warning light, a temporary barrier, or an automated zone controller, when people enter a zone used by industrial robots.

Real-Time Digital Twins

Because of the need for low latency, all of this is better done at the edge or local networks. Edge-based CV systems bypass the secondary integration layer by aggregating video feeds and data and visualizing them within each CV system. More and more warehouses have fully operational, real-time digital twins: software models that reflect the physical warehouse's current state. Computer vision is the primary technology for this digital twin as it fills the model in real time with data on items, their storage locations, operational machines, workers, and human motion.

Example: Gather AI's approach uses a drone and a camera to develop a near-real-time digital twin of a warehouse for inventory management. The system's dashboard is time-stamped, identifies inventory gaps relative to a warehouse's WMS, and informs system activity to ensure that operators rectify operational inventory accuracy in real time. System users observe real-time video and annotated images. A CV system might, for example, track and highlight each parcel in real time as it flows down an automated lane in a distribution center and maintain a tally of the processed items for that minute. The CV system indicates the lane's operational state by triggering an alert when a slowdown or blockage occurs.

Collision alarms are also programmed for emergencies (e.g., CV can detect when someone walks into the path of an automated machine, which might trigger a loud warning or a buzzing alarm on a wearable device). CV is integrated with warehouse management systems using APIs that report events (e.g., "uncoordinated worker in zone 5"). These events are sent directly to managers' log databases or to messaging platforms they use.

KPIs Tracking

CV real-time analysis provides additional insights into higher-level KPIs and supports managerial decision-making. Since vision systems capture valuable information, including what is touched and every action performed, machine learning systems can recognize patterns and perform predictive analysis. For instance, over time, CV data may identify a specific time of day when orders consistently block at the packing and posting stations, and managers may choose to alter shift patterns or add automation to the stations. Live CV footage of vehicles from the delivery and transportation segment, which also includes the driver and road, can be analyzed for compliance with safety policies. Facial expression and gaze detection systems provide alerts that a driver may be distracted or is showing signs of fatigue.

These alerts can be directed to a driver's dashboard, which also serves as a real-time communication device with fleet managers. The CV system can also use the same camera network for both safety and operational efficiency. For example, an AI surveillance system can determine whether an individual is in a restricted area of a warehouse after hours, and if there is a high-value item, move that individual from the area, issue a security alert, and update the inventory system's goods movement.

So far, CV is one of the most interesting and far-reaching subsets of AI. When combined with logistics technologies, it forms an intelligent analytical loop providing fine-grained data. Complemented with advanced analytics, CV ensures that supply chain functions are transparent and can be modified in real time. Firms that extract these real-time CV insights can skillfully, proactively, and continuously refine their safety processes and workflows without going through the iterative, after-the-fact, repetitive processes.

Want to integrate CV in your warehouse? Let us help you do it swiftly and painlessly. Contact us today to start building your project asap!

Andriy Lekh

Other articles