AI Reasoning for Enterprise Data: An Untapped Potential

The world's leading tech companies are competing to advance innovative applications of AI. They are focused on enhancing LLMs that mimic human reasoning, pioneering advancements in NLP, image creation, and coding, as well as developing systems capable of integrating multimodal data such as text, images, and videos.

This progress is laying the foundation for a new technological era, where cutting-edge AI capabilities are accessible to organizations at every level. Today, we'll explore how AI reasoning powers data-driven decision-making for businesses.

What is Reasoning in AI?

Reasoning in AI refers to the ability of machines to make predictions, inferences, and informed conclusions. It involves structuring data in a way that machines can interpret and applying logical methods, such as deduction and induction, to arrive at decisions.

Reasoning AI models are essential for complex, high-stakes scenarios that require deep analysis and creative solutions. During inference-time computing, AI systems pause to analyze data, consider potential outcomes, and apply logical methods to tackle intricate problems. While demanding in terms of computational resources, this approach delivers more meaningful and insightful outcomes.

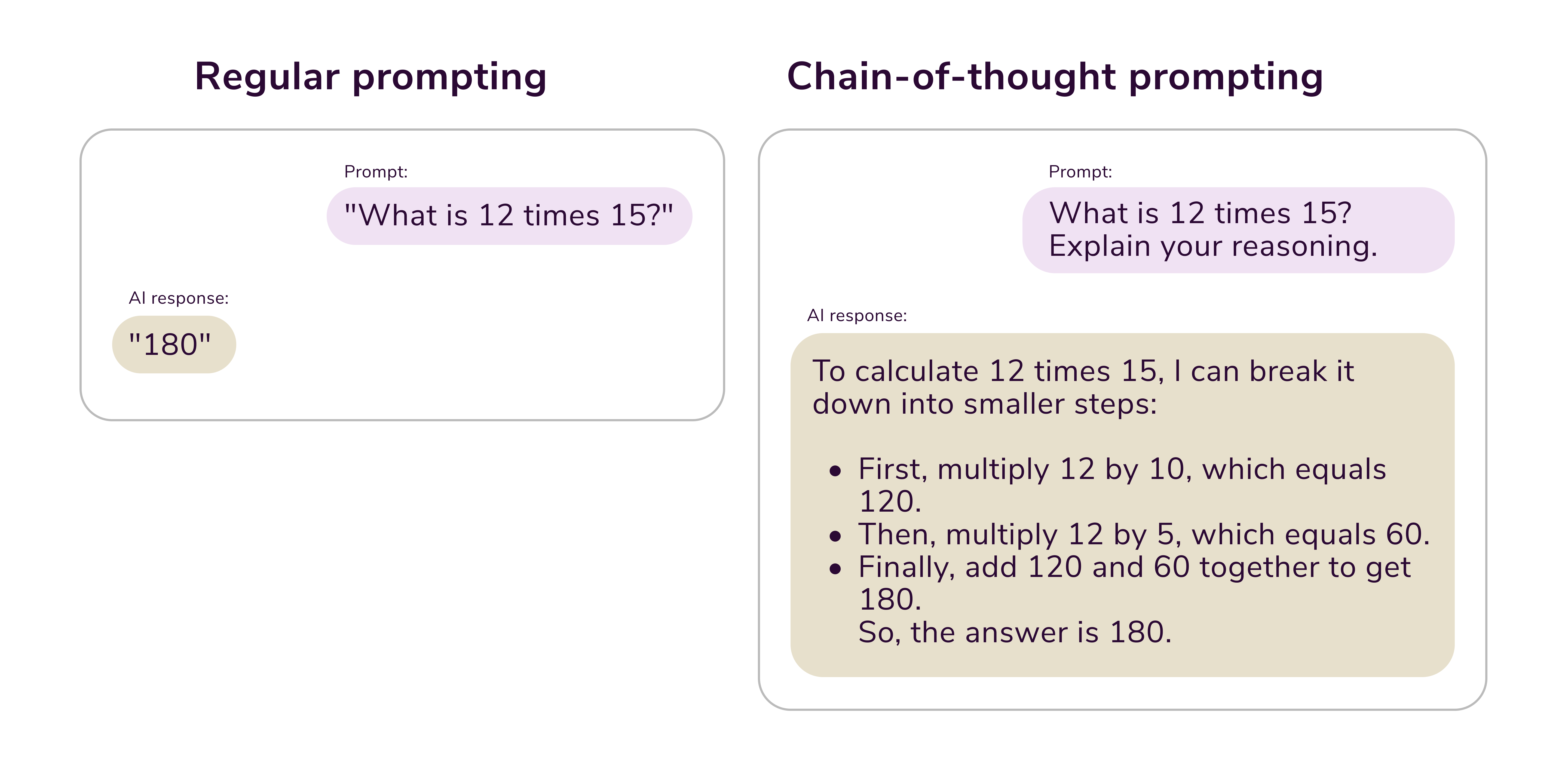

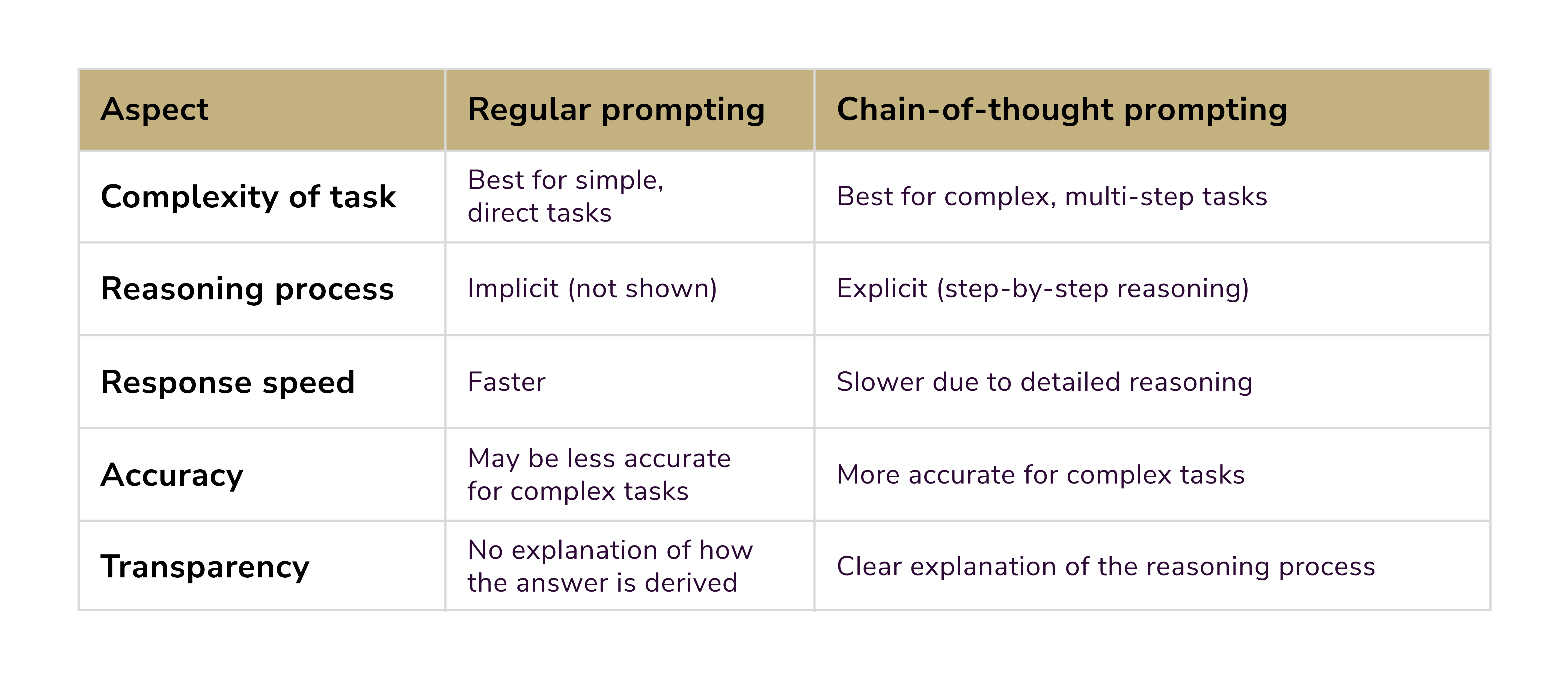

AI reasoning uses chain-of-thought prompting to break down tasks into small logical steps. Compare the two outputs:

Conventional LLMs excel in comprehending human language and providing straightforward responses to basic queries. Meanwhile, reasoning models (RMs) demonstrate their strength in deconstructing intricate problems into smaller, manageable parts using explicit logical reasoning. This capability is pivotal to developing AI systems that can truly comprehend and interact with the world in a contextually appropriate and meaningful way.

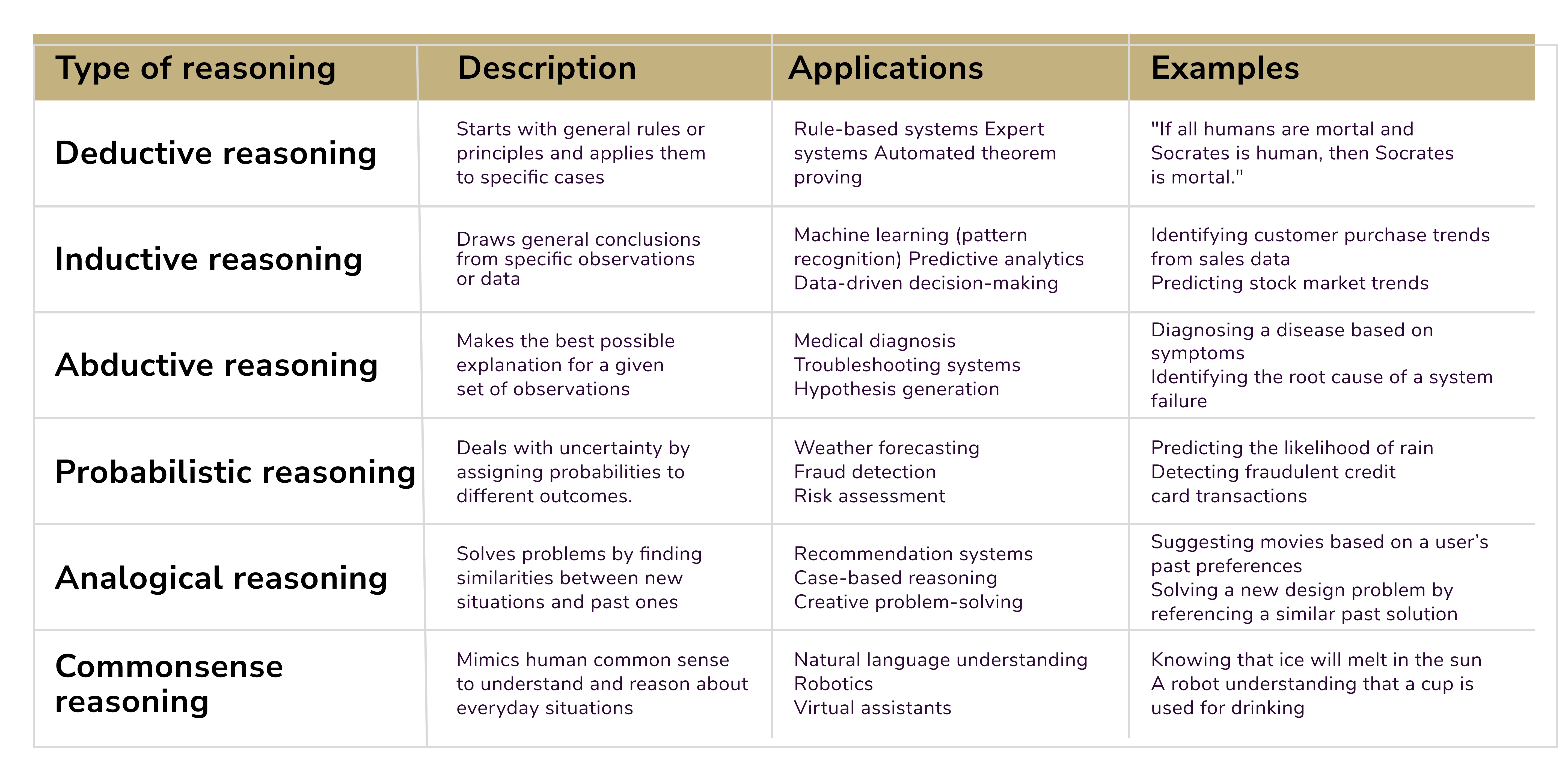

Types of Reasoning in AI

Let's delve deeper into the types of reasoning in AI, examples, and practical use cases.

Abductive Reasoning

Abductive reasoning involves forming the most likely explanation for a set of observations. It is often described as "inference to the best explanation."

Example: If a patient exhibits symptoms like fever and sore throat, abductive reasoning could suggest the most probable cause, such as an infection.

Use cases:

- Medical diagnosis systems: AI models, such as IBM Watson, employ abductive reasoning to hypothesize the most plausible diagnoses based on symptoms and patient history.

- Fraud detection: Banking systems can use AI to detect and prevent fintech fraud. Abductive reasoning helps spot irregular transaction patterns signaling potentially fraudulent activities.

Agentic Reasoning

Agentic reasoning centers on understanding and predicting the goals, actions, and behaviors of various agents (whether human or machine).

Example: Predicting that a pedestrian will wait at a crosswalk for the signal to change is an example of agentic reasoning.

Use cases:

- Autonomous vehicles: Self-driving cars use agentic reasoning to anticipate the behavior of other vehicles, pedestrians, or cyclists.

- Robots in human environments: Social robots like Pepper leverage agentic reasoning to interact seamlessly with people.

Analogical Reasoning

Analogical reasoning involves solving problems by transferring knowledge from a known situation to a new but similar one.

Example: If rain clouds lead to rain, analogical reasoning might help infer that dark clouds in a new region could also signify rain.

Use cases:

- Educational tools: AI-powered tutoring platforms use analogical reasoning to help students learn new concepts by referring to familiar examples.

- Transfer learning: AI models trained on one task (e.g., image recognition) can apply insights to related tasks (e.g., video analysis).

Commonsense Reasoning

Commonsense reasoning enables AI to make assumptions based on everyday knowledge. It helps machines understand the practical implications of situations.

Example: Knowing that ice is slippery, commonsense reasoning helps anticipate that running on icy surfaces might lead to a fall.

Use cases:

- Virtual assistants: Assistants like Alexa use commonsense reasoning to interpret incomplete user commands.

- Robotic systems: AI robots use common reasoning to perform tasks such as placing fragile objects carefully on a table.

Deductive Reasoning

Deductive reasoning follows a top-down approach, drawing specific conclusions from general premises that are assumed to be true.

Example: If all humans are mortal and Socrates is a human, then deductive reasoning concludes that Socrates is mortal.

Use cases:

- Expert systems: Deductive reasoning powers legal and accounting systems like TurboTax, helping users apply general laws or rules to their specific cases.

- Automated verification: AI-powered algorithms use deductive reasoning to validate software correctness in critical applications.

Fuzzy Reasoning

Fuzzy reasoning deals with reasoning under uncertainty, where data is not binary (true/false) but falls within a spectrum of values.

Example: A temperature described as "warm" could range from 20°C to 30°C, depending on the context.

Use cases:

- Smart thermostats: These use fuzzy logic to adjust temperatures by accounting for varying preferences.

- Industrial automation: Fuzzy reasoning enhances decision-making in systems like climate control or robotic arms.

Inductive Reasoning

Inductive reasoning involves making generalizations based on observations or patterns.

Example: Noticing that the sun has risen every morning leads to the generalization that it will rise again tomorrow.

Use cases:

- Predictive analytics: Systems like recommendation engines employ inductive reasoning to suggest products based on user behavior patterns.

- Data mining: Inductive reasoning identifies trends in large datasets, such as detecting sales surges in specific regions.

Neuro-symbolic Reasoning

Neuro-symbolic reasoning combines neural networks (for pattern recognition) with symbolic logic (for reasoning), merging data-driven and rule-based systems.

Example: A neuro-symbolic AI can recognize objects in an image while logically reasoning about their relationships.

Use cases:

- Visual question answering: Applications like OpenAI's DALLE or DeepMind systems integrate neuro-symbolic reasoning to provide coherent explanations.

- Healthcare: AI systems use it to interpret MRI scans and explain diagnostic decisions.

Probabilistic Reasoning

Probabilistic reasoning uses probability theory to deal with uncertain or ambiguous scenarios.

Example: Calculating the probability of rain based on cloudy weather conditions involves probabilistic reasoning.

Use cases:

- Weather prediction: AI-powered systems forecast meteorological patterns based on historical data.

- Autonomous systems: Autonomous robots calculate the likelihood of success for a specific action before executing it.

- Bayesian networks: building models from data and/or expert opinion.

Spatial Reasoning

Spatial reasoning deals with understanding and manipulating spatial concepts, such as distances, directions, and orientations.

Example: Determining the shortest route to a destination involves spatial reasoning.

Use cases:

- Augmented Reality (AR): Spatial reasoning powers AR applications, helping overlay virtual elements on real-world environments.

- Logistics optimization: AI systems, such as route planners for delivery trucks, depend on spatial reasoning.

Temporal Reasoning

Temporal reasoning relates to understanding and reasoning about time-dependent data or sequences of events.

Example: Recognizing that heating water half an hour earlier will make it cool by dinnertime is an example of temporal reasoning.

Use cases:

- Time series analysis: AI predicts future stock prices or demand levels by analyzing time-based trends.

- Healthcare: Temporal reasoning helps monitor and analyze patient data for detecting medical emergencies based on changing conditions over time.

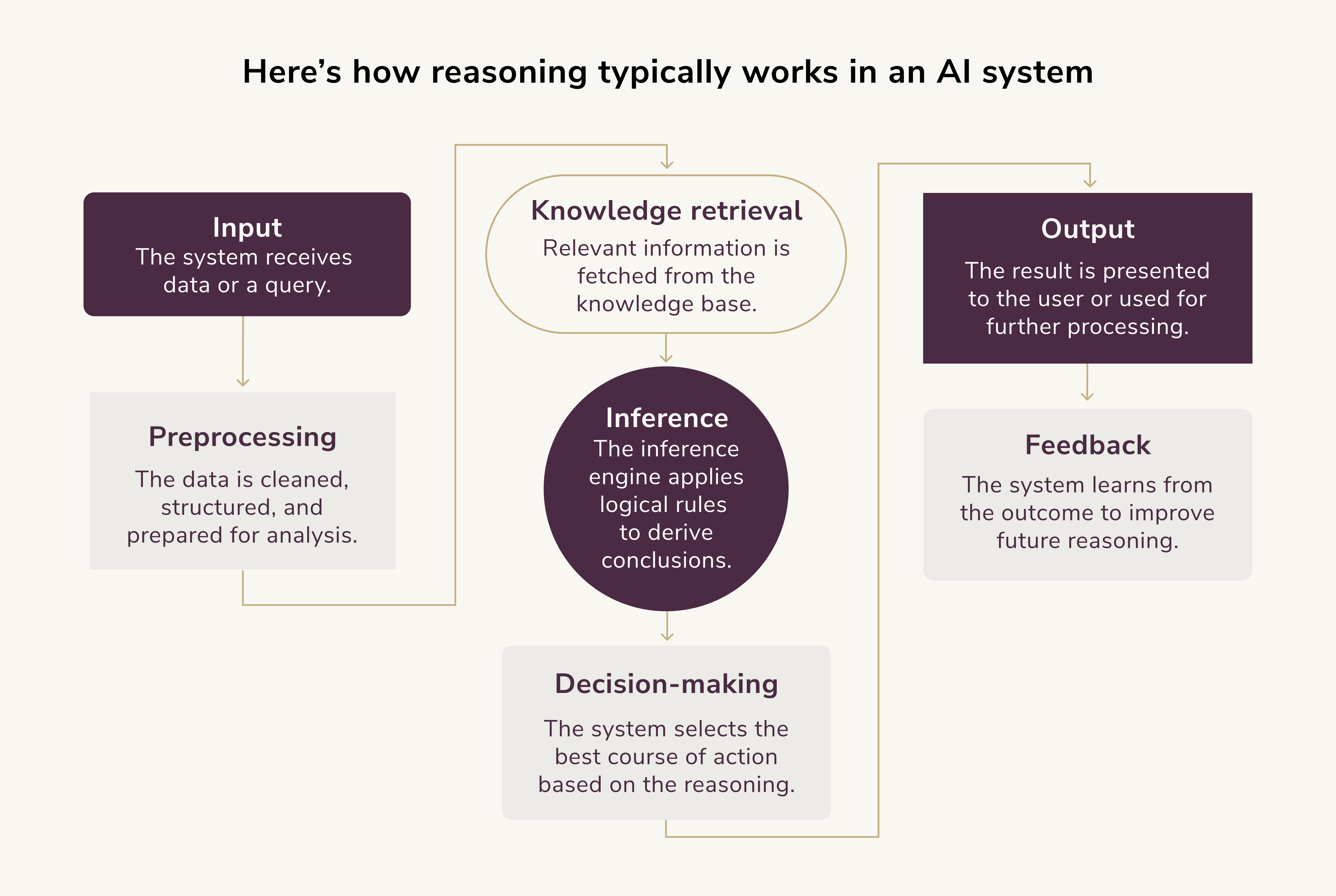

How Does Reasoning in AI Work?

Here is a breakdown of how reasoning in AI works:

AI reasoning relies on essential core components.

Knowledge Representation

AI systems require structured frameworks to store, retrieve, and apply information. Knowledge representation encodes data into formats such as semantic networks, ontologies, and symbolic logic. These frameworks equip AI reasoning engines with the means to understand context, interpret relationships between data points, and apply learned knowledge effectively. Without this foundational structure, AI problem-solving lacks depth, leading to inaccurate predictions and unreliable conclusions.

Logical Inference

Structured logical inference is fundamental for AI data processing and resolution generation. AI employs various reasoning methods:

These methodologies provide AI systems with robust frameworks for problem-solving, minimizing errors in complex decision-making processes.

Machine Learning Integration

By integrating machine learning models, AI reasoning systems refine their outputs using past data and emerging trends. As seen, traditional rule-based reasoning relies on predefined logic. Meanwhile, ML enables AI to adapt to new patterns and inputs. This adaptive approach is by far more efficient. It enables the analysis of vast datasets, uncovering correlations and improving predictive accuracy. These capabilities empower AI to solve problems across diverse industries.

The Role of Reinforcement Learning in AI Reasoning

Reinforcement learning (RL) plays a pivotal role in enhancing reasoning models. It allows systems to improve decision-making through trial and error. By interacting with an environment, performing actions, and receiving feedback in the form of rewards or penalties, these models iteratively refine their strategies to maximize cumulative rewards. For instance, a reasoning model tasked with solving a puzzle might experiment with different approaches. It earns rewards for efficiency and updating its process based on what yields better outcomes. RL techniques, such as Q-learning or policy gradients, strike a balance between exploring new strategies and exploiting proven ones. This enables reasoning models to adapt dynamically.

For instance, in robotic navigation, a robot in a maze earns rewards for successfully reaching the goal while incurring penalties for colliding with obstacles. Over several iterations, the robot improves by correlating actions with results. It uses its policy, represented as a neural network, to map sensory inputs to optimal movements. This iterative process makes RL particularly valuable for scenarios where explicit rules or labeled data are unavailable. The model learns directly from hands-on experience.

However, implementing RL in reasoning poses challenges. One common issue is sparse rewards, where feedback is infrequent, slowing down the model's learning process. For example, when solving complex math problems, the model might only receive a reward for the correct answer. This makes it difficult to understand the intermediate steps. Incremental rewards for achieving subgoals and actor-critic methods may help address this issue.

The Importance of AI Reasoning for Enterprises

With enterprise challenges growing more intricate, the ability to simply search for data or generate content is no longer sufficient. Complex and high-stakes problems demand deliberate, creative, and thoughtful approaches. AI systems must pause, analyze, and draw conclusions in real-time.

Businesses need AI to evaluate different scenarios, consider possible outcomes, and use logical methods to make informed decisions. This process, known as "inference-time computing," requires greater computational effort but leads to deeper and more meaningful insights. This advanced capability marks a turning point in AI's evolution, making it a powerful ally for solving the increasingly complex problems faced by enterprises today.

The top companies are working relentlessly to deliver AI software essential for businesses and individuals alike. Yet, LLMs' greatest untapped potential lies in advanced AI reasoning for enterprise-level data.

AI reasoning enables LLMs to assist in context-aware recommendations, data insights, process optimizations, compliance, and strategic planning.

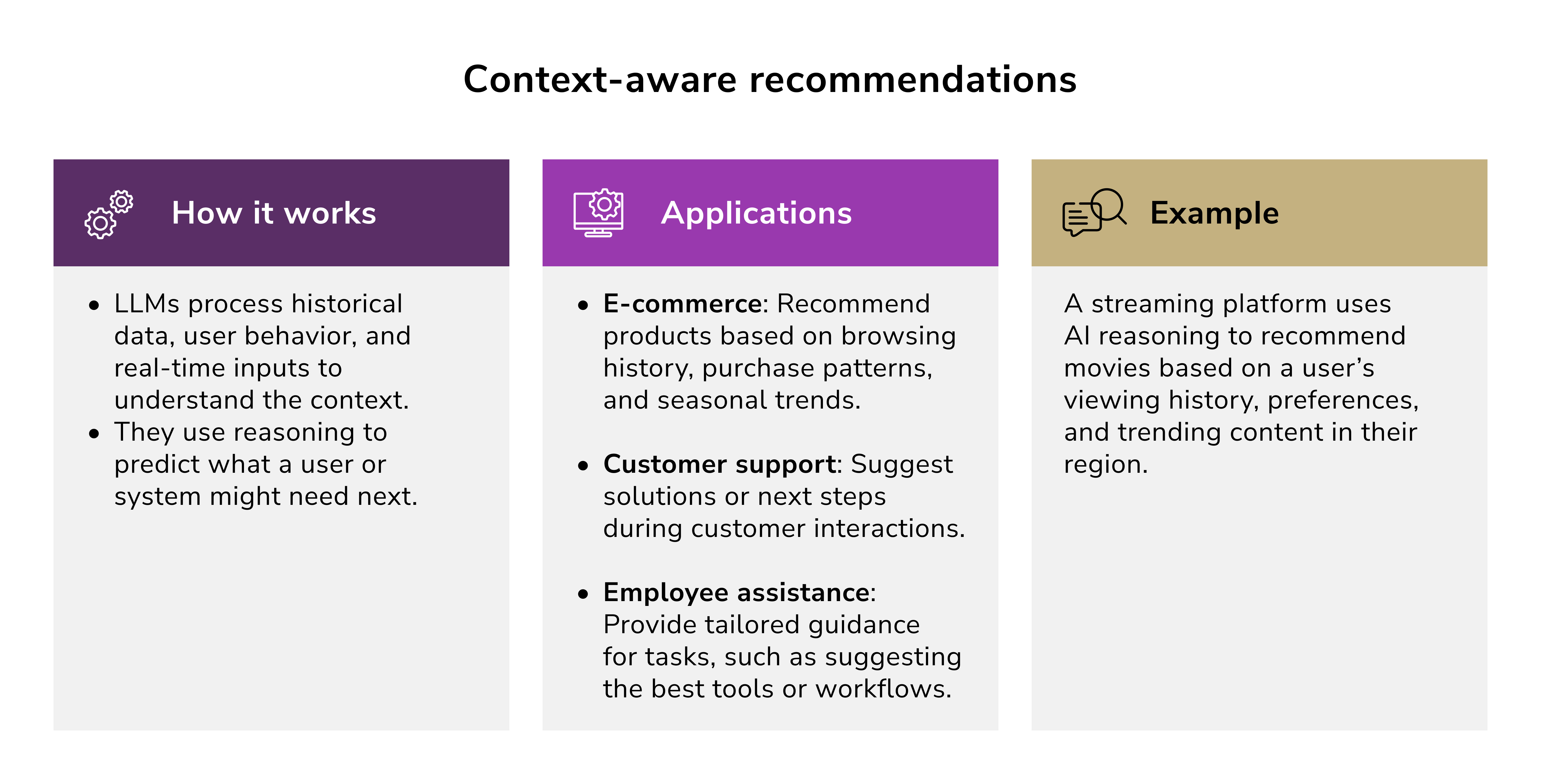

Context-Aware Recommendations

LLMs access a company's product catalog, customer profiles, and interaction history. The system uses inductive reasoning to identify patterns and analogical reasoning to suggest similar products or services. For example, if a customer buys a smartphone, the LLM might recommend accessories like cases or screen protectors.

The business value it brings:

- increases customer satisfaction and retention

- boosts cross-selling and upselling opportunities.

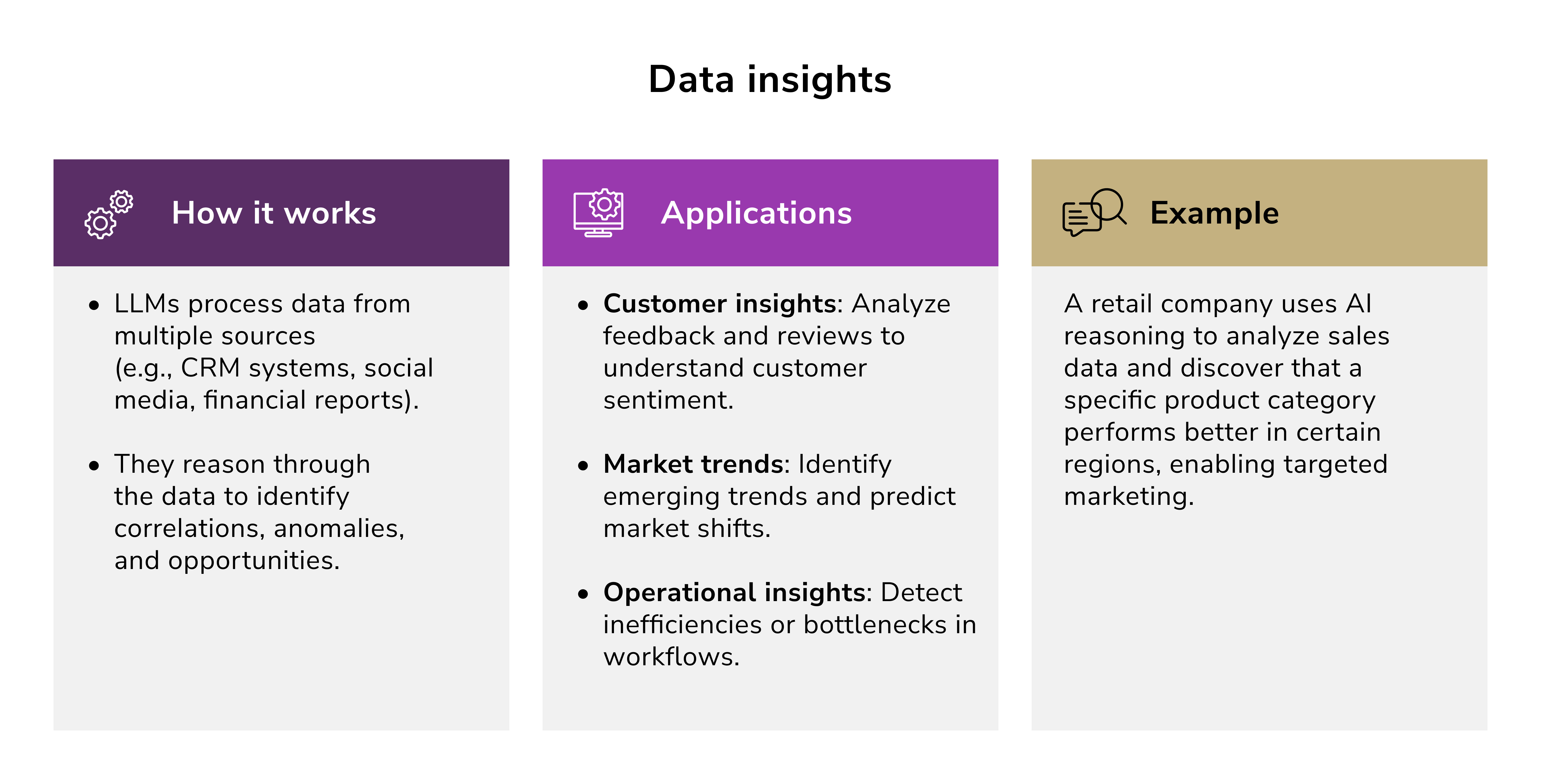

Data Insights

LLMs process data from multiple sources (e.g., sales reports, customer feedback, market trends). Using deductive reasoning, the system identifies correlations, trends, and anomalies.

For instance, marketing can use an LLM to analyze campaign performance. The system might identify that email campaigns with personalized subject lines have a 20% higher open rate.

The business value it brings:

- enables data-driven decision-making

- identifies opportunities for growth and areas for improvement.

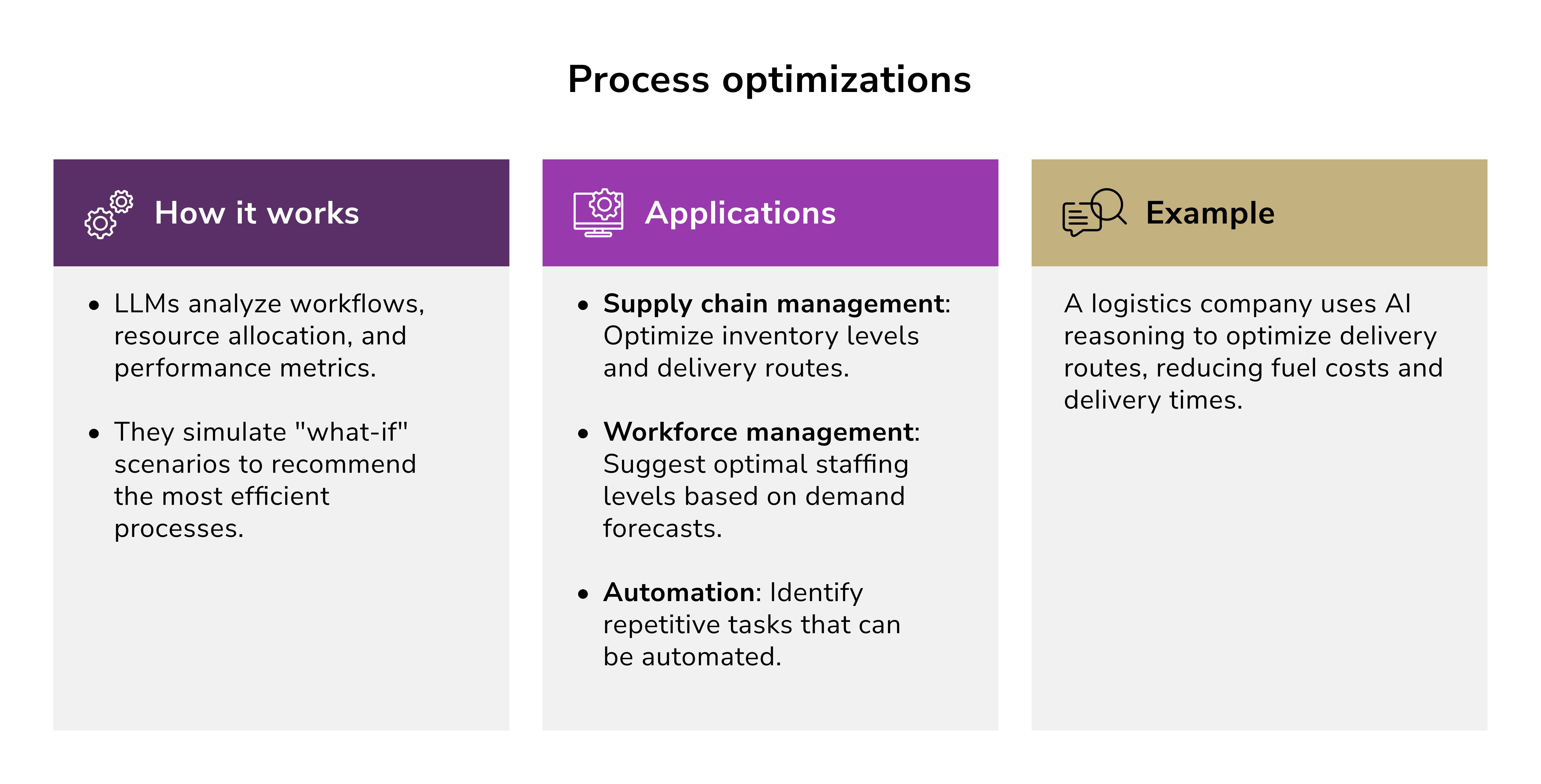

Process Optimizations

LLMs analyze existing business workflows and identify bottlenecks. The system then uses abductive reasoning to hypothesize the root causes of inefficiencies and suggest solutions.

For instance, a logistics company can use an LLM to optimize delivery routes. The system identifies routes that are prone to delays and suggests alternative paths.

The business value it brings:

- reduces operational costs

- improves productivity and efficiency.

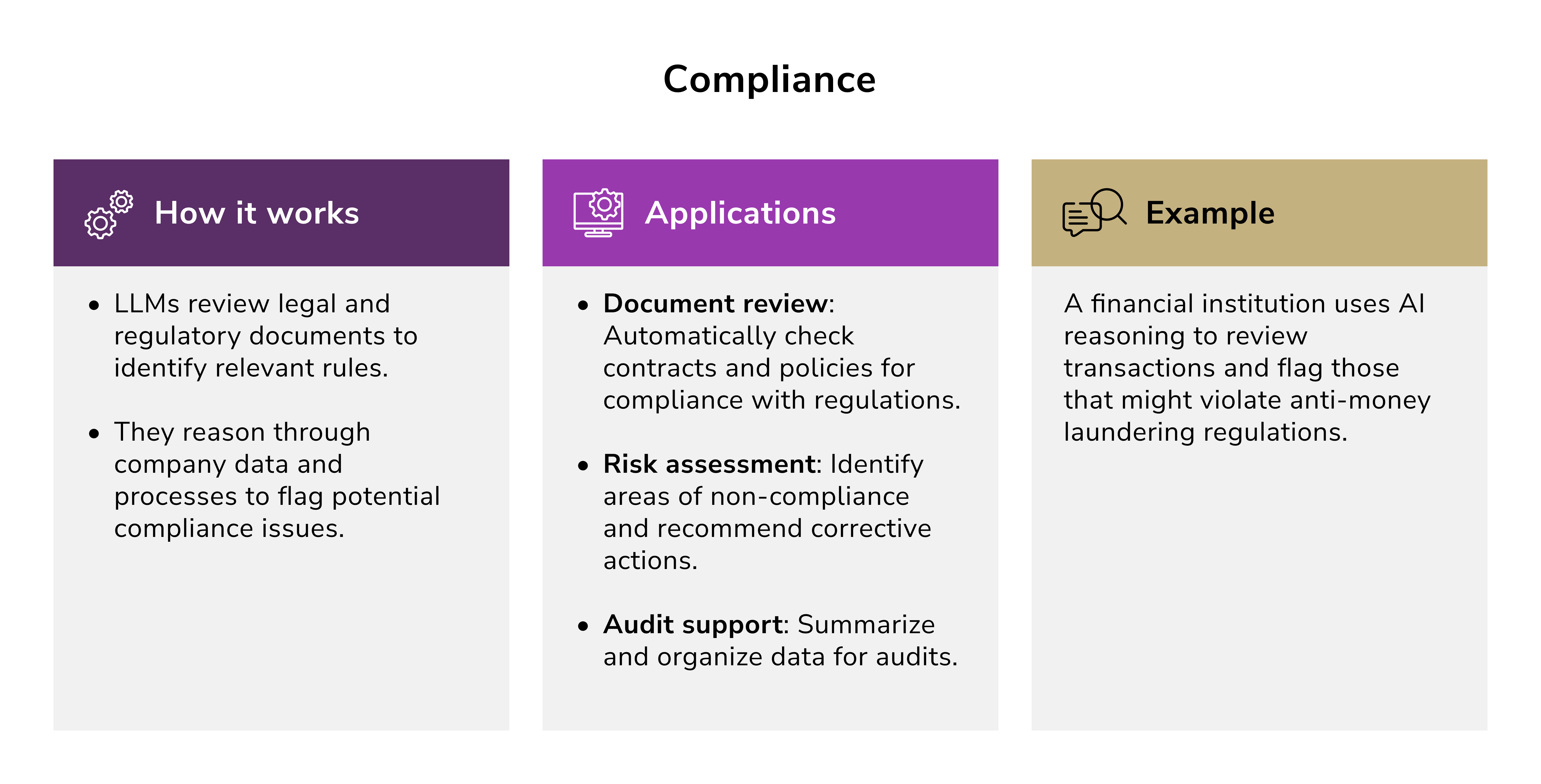

Compliance

LLMs analyze legal documents, industry standards, and company policies. The system uses rule-based reasoning to ensure that actions and processes comply with regulations. Let's say a financial institution uses an LLM to review transactions for compliance with anti-money laundering (AML) regulations. The system flags suspicious activities for further investigation.

The business value it brings:

- minimizes legal and financial risks

- ensures adherence to industry standards and regulations.

Strategic Planning

LLMs simulate various scenarios based on historical data and market conditions. The system uses probabilistic reasoning to predict outcomes and recommend strategies. Let's say a company uses an LLM to plan its market expansion. The system analyzes competitor performance, customer demographics, and economic conditions to recommend the best regions for growth.

The business value it brings:

- enhances decision-making with data-backed strategies

- reduces uncertainty in planning and forecasting.

Example of AI Reasoning Applied in Fintech

Let's explore how AI reasoning is applied in a credit risk analysis scenario. Let's say a bank receives a loan application from a client. The applicant provides the following details:

- Age: 35

- Income: $60,000/year

- Credit score: 720

- Employment status: full-time

- Loan amount requested: $20,000

- Loan purpose: home renovation

The AI system is tasked with determining whether to approve or reject the loan and assessing the associated credit risk.

The inference engine applies reasoning to evaluate the applicant's credit risk.

As per rule-based reasoning:

- Credit score: 720 = low risk.

- Employment status: full-time = low risk.

- Loan purpose: home renovation = medium risk.

Probabilistic reasoning works as follows:

- The system calculates the debt-to-income ratio. Debt-to-income ratio = (loan amount / annual income) × 100 = ($20,000 / $60,000) × 100 = 33.3%. The debt-to-income ratio is below the 40% threshold, which means low risk.

- Bayesian analysis combines these factors to estimate the probability of default. In this case, the probability of default = 5% (based on historical data for similar profiles).

Now, the AI system combines the results of rule-based and probabilistic reasoning to make a decision. Since the overall risk level for this applicant is low, the system will recommend approving the loan.

The system output may look as follows:

- Offer a standard interest rate of 5%.

- Monitor repayment behavior for the first 6 months.

The bank can then track the applicant's repayment behavior. If the applicant repays on time, the system updates its knowledge base to reinforce the decision-making process. If the applicant defaults, the system adjusts its models to improve future risk assessments.

Business Benefits of AI Reasoning

Let's explore the key business benefits of AI reasoning across various applications.

Faster Decision-Making and Responses

AI reasoning systems process vast amounts of complex data in a fraction of the time it would take a human. Businesses can rely on these systems to quickly adapt to changing market conditions, meet evolving customer needs, and address operational challenges. By reducing the time spent on manual analysis, companies can focus on strategic initiatives without compromising accuracy.

Enhanced Predictive Accuracy and Risk Assessment

One of the standout benefits of AI reasoning is its ability to deliver superior predictive capabilities. By analyzing large datasets and recognizing patterns, AI models improve decision-making and help businesses

- anticipate shifts in market trends

- evaluate and mitigate potential risks

- refine operational strategies with confidence.

Applications of AI in financial forecasting, healthcare, and logistics demonstrate how predictive analytics can accurately forecast demand, detect fraud, or personalize patient care plans.

Operational Efficiency and Workflow Automation

AI reasoning streamlines repetitive and time-intensive activities. Through business process automation, businesses can consistently produce high-quality results, redirecting employee focus toward creative and strategic work. For instance:

- Logistics and supply chain optimization improve delivery times and reduce costs.

- Scheduling automation ensures better allocation of resources.

- Decision-support systems reduce errors in financial or operational tasks.

These applications lead to improved productivity and enhanced consistency across various business functions.

Scalable and Cost-Effective Solutions

AI reasoning enables organizations to grow without adding proportional costs. Adaptive AI models continuously improve outcomes by learning from new data. It ensures efficiencies are sustained as businesses expand. By minimizing waste, AI-driven problem-solving fosters sustainable growth.

Scalable platforms power everything from customer service automation in retail to predictive maintenance in manufacturing, showing their versatility across industries.

Compliance and Governance Improvements

Complying with complex regulatory standards becomes more manageable with AI reasoning. These systems evaluate large regulatory datasets to help organizations structure operations to meet industry standards. Examples include:

- AI-powered legal assessments to minimize financial or reputational risks.

- Automated checks in healthcare to ensure patient data privacy.

- Predictive compliance tools in finance to prevent violations and penalties.

With these tools, businesses can maintain integrity while staying agile in an evolving regulatory landscape.

Customer Engagement and Service Optimization

By interpreting user behavior and preferences, AI recommends personalized solutions and facilitates real-time issue resolution. Specific applications include:

- Virtual assistants and chatbots powered by AI can manage customer queries with efficiency and empathy.

- Sentiment analysis tools allow businesses to better understand customer emotions, improving overall service quality.

- Automated recommendations drive engagement and customer loyalty.

Bias in AI Reasoning

AI bias represents one of the most pervasive and dangerous ethical challenges facing modern organizations. All AI learning systems acquire knowledge from datasets, and when these datasets contain biased information, AI amplifies and perpetuates existing discrimination.

The problem is that if historical hiring data shows a preference for certain demographics, the AI system will likely replicate these patterns. For instance, a hiring algorithm trained on resumes from a male-dominated field could unintentionally discount female candidates. Similarly, a facial recognition system trained predominantly on lighter-skinned faces may inaccurately identify individuals with darker skin tones. These biases often stem from datasets that lack diversity or balance. But they can also emerge from feature selection, model optimization strategies, or the way a problem is framed during the development process.

The effects of bias in AI are particularly concerning in high-stakes scenarios. For example, a loan approval system might use zip codes as a proxy for creditworthiness. If historical lending data reveals lower approval rates in neighborhoods with higher minority populations, the AI could inadvertently perpetuate discriminatory practices akin to redlining.

Likewise, predictive policing tools trained on data influenced by historical over-policing in certain communities could reinforce biased patrolling patterns, further marginalizing those groups. Real-world examples demonstrate the tangible harm bias can cause. A common issue is the perception that data is inherently "neutral," which leads developers to focus on metrics like model accuracy while neglecting fairness considerations.

Addressing AI Bias Effectively

Mitigating bias requires a proactive approach at various stages of development. The training data must be thoroughly audited to ensure balanced representation, incorporating diverse demographics, edge cases, and unbiased labels. Methods such as reweighting underrepresented groups or generating synthetic data can help address imbalances.

The design of the AI model plays a critical role. Developers may need to adopt fairness-aware algorithms or restrict the use of certain features (e.g., zip codes), that could embed or exacerbate discriminatory correlations.

Continuous post-deployment monitoring is also essential. For example, if a credit scoring model shows disparities in error rates across different income levels, it may require frequent retraining with updated data.

Note: Tools like IBM's AI Fairness 360 and Google's What-If Tool can assist in detecting and addressing biases, but no universal solution exists. Combating bias is both a technical and ethical challenge that demands collaboration among developers, domain experts, and impacted communities.

Retrieval-based Model for Unbiased AI

Retrieval-based AI relies on a predefined, carefully curated set of responses. This approach ensures that the AI operates within the boundaries of pre-approved content, reducing the likelihood of inaccuracies or biased outputs.

For instance, a retrieval-based AI used in healthcare might analyze symptoms and provide guidance drawn from a vetted collection of reputable medical sources. This ensures that the information shared is both accurate and reliable, steering clear of outdated or unverified content that might appear in a random online source. When reliability and trustworthiness are paramount, such as in healthcare-related applications, relying on proven responses rather than creating brand-new ones is often a safer and more effective path.

Lack of Explainability in AI Reasoning

Modern AI systems, particularly deep neural networks, make decisions through millions of interconnected calculations. While these systems can achieve remarkable accuracy, they cannot easily explain why they reached specific conclusions. This opacity becomes problematic when AI systems make high-stakes decisions affecting human lives.

Certain sectors require clear explanations for AI decisions. These include:

- Loan approval decisions must be explainable to customers and regulators. The Fair Credit Reporting Act (FCRA) requires institutions to provide reasons for adverse credit decisions.

- Medical professionals need to understand AI recommendations before making treatment decisions. Patients also deserve explanations for AI-driven diagnoses or treatment suggestions.

- Court decisions influenced by AI risk assessment tools must be transparent and subject to challenge.

Organizations can address explainability challenges through several approaches:

- Interpretable models ("white box" models) inherently provide more transparency, albeit at the expense of some accuracy.

- Post-hoc explanation tools ("black box" models) can analyze and explain AI decisions after they have been made.

- Hybrid approaches involve combining AI systems with human oversight to ensure that critical decisions receive human review and explanation.

- Documentation standards: maintain comprehensive documentation of AI system design, training data, and decision processes.

White Box Model for Unbiased and Explainable AI

A white-box AI model is a type of ML system where the internal logic and decision-making process are fully transparent and understandable to developers. Unlike black-box models, which obscure the reasoning behind their predictions, white-box models are specifically designed to provide clarity on how inputs lead to outputs. This transparency is typically achieved through simple structures like decision trees, linear regression models, or rule-based systems.

A white-box model, such as logistic regression, can show exactly how inputs like credit scores and debt-to-income ratios led to a loan rejection, allowing companies to meet these requirements with ease. Furthermore, white-box models enable domain experts, such as doctors or business analysts, to validate predictions against their expertise, fostering greater trust in AI systems and promoting collaborative decision-making.

This level of interpretability makes white-box models particularly suitable for scenarios where comprehending the "why" behind a prediction is as important as the prediction itself.

Andriy Lekh

Other articles