Where AI Can't Help You: Cases of AI Hindering Business Processes

Despite the remarkable advances in artificial intelligence, business leaders are discovering a critical truth: the most impactful work still requires distinctly human capabilities. While AI excels at processing data and automating routine tasks, it falls short in areas that define competitive advantage and sustainable growth.

Understanding these limitations isn't about dismissing AI's value but about recognizing where human skills remain irreplaceable. Companies that thrive will be those that strategically combine AI's efficiency with human judgment, creativity, and connection.

Let's examine the crucial areas where AI and generative AI integration simply cannot replace human expertise, and why these skills will become even more valuable as automation advances.

When AI Implementation Backfires: Case Studies Across Industries

Even well-established businesses have experienced AI efforts worsening their business processes. Here are some sector-specific examples that illustrate the AI system, the failure, and the outcome.

Retail: Target's Predictive Marketing Scandal

Target's predictive marketing analytics model sought to identify major life events (such as pregnancy) for the purpose of targeted marketing. One algorithm associated a teen's pregnancy with her shopping patterns, then sent targeted maternity ads to her house, disclosing sensitive information to her family. This triggered a public privacy and ethics controversy.

Consequences: Following this incident, the corporation reevaluated its approach to using customers' data to prevent trust breaches, closely monitoring and fine-tuning its personalization efforts.

Finance: Apple Card's Algorithmic Bias

The Apple Card's credit underwriting algorithm (developed by Goldman Sachs) aimed to automatically set and update Apple Cardholders' credit limits. After its release, one tech employee noticed that he had a significantly higher credit limit than his spouse, and his spouse even had a better credit score. The AI appeared to discriminate by gender, likely due to biased training data or flawed variables.

Consequences: The algorithm came under public and legislative scrutiny due to its biased lending practices, which resulted from poor model documentation.

Healthcare: IBM Watson at MD Anderson

MD Anderson Cancer Center partnered with IBM Watson to create an Oncology Expert Advisor. This is an AI tool that utilizes natural language processing to review patient records and suggest potential cancer treatments. Despite the hype, Watson's recommendations in practice were often not useful or accepted by physicians. The system struggled with the complexity and nuance of oncology. An internal audit found it hadn't met clinical goals, and physicians were hesitant to trust a "black box" tool. IBM's powerful AI ultimately proved no match for the messy reality of healthcare, revealing a fundamental mismatch between how machines learn and how doctors work.

Consequences: After four years and $62 million spent, MD Anderson canceled the project in 2016. This high-profile failure hurt IBM's reputation in health AI and underscored that without physician buy-in and clear efficacy, AI can become a costly dead-end.

Manufacturing: Tesla's Over-Automation Misstep

For the Model 3, Tesla integrated an advanced assembly line, utilizing cutting-edge robotics and AI to replace human labor. The AI business process automation system became so convoluted that it started to slow production. As CEO, Elon Musk declared, "excessive automation at Tesla was a mistake…Humans are underrated." While trying to automate every part of the line, Tesla lost sight of how to complete production.

Consequences: Tesla lost production targets, resulting in late deliveries. The company set the automation back and reintegrated humans to the line to prioritize production. The incident highlighted that blindly automating everything can hinder operational performance and that human skill remains crucial in many processes.

These cases illustrate how AI projects can fail by violating fundamental business realities, whether by neglecting privacy, embedding bias, lacking user trust, or overestimating technology. Each failure taught a lesson: the new technology should meet the actual needs of the business, the data and models must be aligned, and humans must be tied to the process.

Things AI Can't Do for Your Business

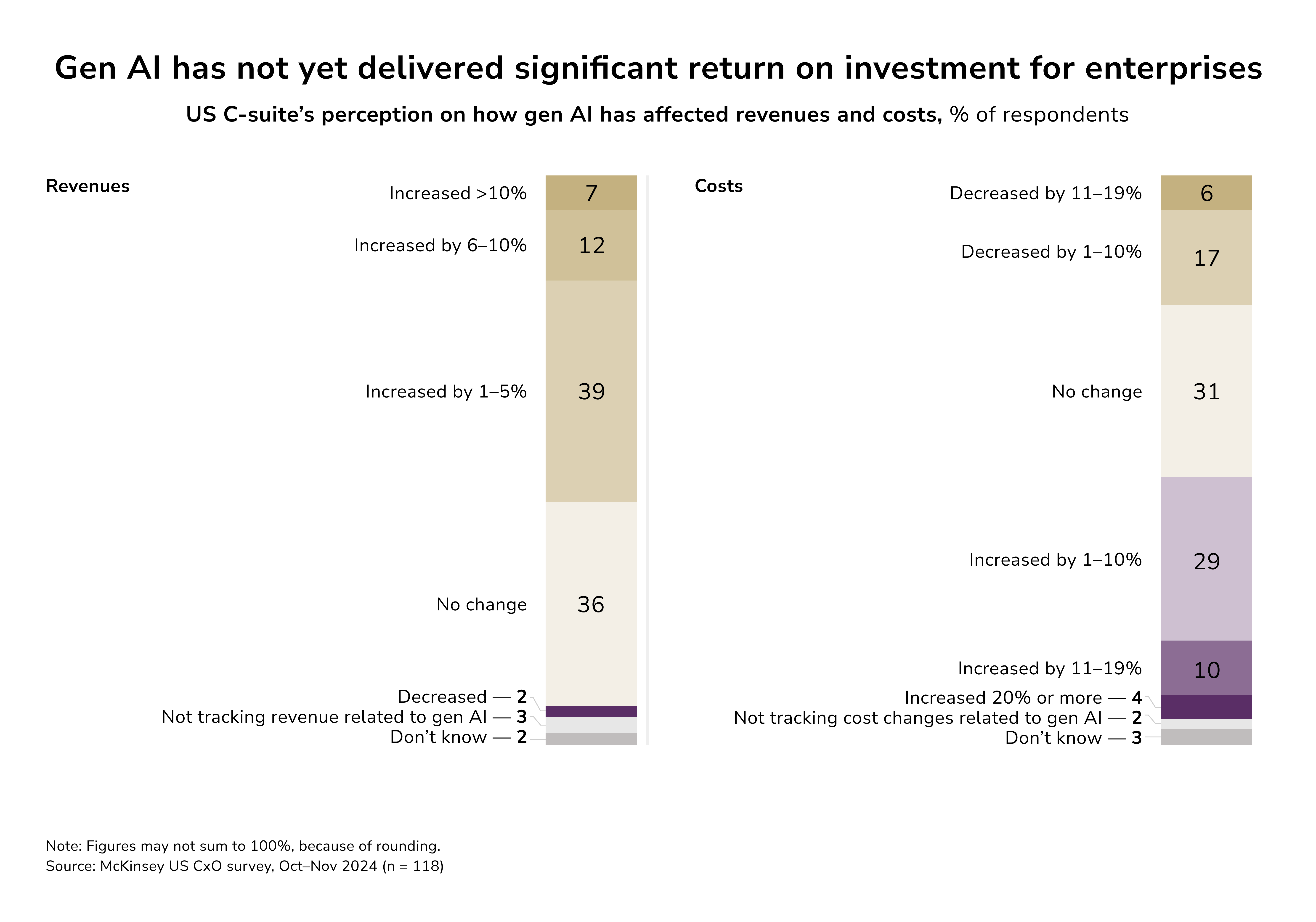

Organizations have poured billions into generative AI initiatives, yet a striking pattern emerges from the data: 95% are seeing no measurable returns on their investment. This stark reality reveals what many companies name the GenAI Divide—a phenomenon that affects businesses of all sizes, from large enterprises, SMBs, startups, and even established consulting firms. This divide emerges from a paradox: organizations are rapidly adopting generative AI tools, yet few are experiencing meaningful change.

The fundamental obstacle isn't what most assume. Infrastructure limitations, regulatory hurdles, and talent shortages aren't the primary culprits. Instead, the core challenge lies in how these systems learn, or more precisely, how they don't. Current generative AI implementations lack the ability to incorporate user feedback, adjust to specific contexts, or evolve their capabilities over time.

1. Community Building

Building authentic communities requires elements that no artificial intelligence can replicate: authentic human presence. Real community is not defined solely by standard social media engagement metrics. It demands what researchers call placebinding, that is, being fully present with others in shared physical and social spaces.

Successful community building requires leaders who can show up, listen deeply, and respond to their members' evolving needs. This means attending networking events, hosting face-to-face meetings, and creating spaces where authentic relationships can flourish. These relationships create spaces for customer loyalty that no AI-enabled chatbot will ever provide.

2. Giving Quality Feedback

While AI can identify grammatical errors and flag potential issues, meaningful feedback requires empathy, context, and forward-thinking insight, helping individuals grow while maintaining their confidence and creativity.

Artificial Intelligence feedback tools are trained on historical data and patterns. They are unable to forecast potential prospects and comprehend the singular vision a person has for their work. Human judgment, however, considers the potential value and human resources of creative work, action plans, and leadership decisions.

Effective feedback also requires reading between the lines—understanding what someone isn't saying, recognizing their aspirations, and connecting their current work to broader career goals. The automation of these nuanced conversations, which define professional growth, requires a balance that AI systems cannot achieve for now.

3. Professional Development

The kind of deep skill development that produces lasting expertise involves a kind of cognitive strain. AI tools can foster those through learning accelerators, but this also creates a dangerous dependency that impedes skill acquisition.

The formula for professional development is simple yet crucial: Natural Ability + Practice = Strength. When AI systems take over a larger share of the "practice" component, they deprive personnel of the opportunity to master real competencies. It's best to leave routine tasks to AI while your team develops expertise that will advance your company.

4. Professional Network Building

Tools for digital connection have improved the efficiency of network establishment, but they've also contributed to the loneliness epidemic that affects both private and professional relationships. The most important professional networks, however, are built through real connections, not LinkedIn automation.

Here's why face-to-face networking remains critical:

- builds trust through shared experiences and mutual vulnerability

- creates memorable interactions that lead to lasting partnerships

- allows for spontaneous conversations that often yield unexpected opportunities

- develops the social skills necessary for leadership and collaboration

Highly successful business professionals know that networking is not just about the accumulation of contacts, but rather a value of deep, organic relationships, active concern, and trust. These relationships provide a bedrock for referrals, collaborations, and opportunities that enhance and sustain business for years.

5. Understanding Nuance, Ethics, and Context

AI’s most significant weakness is its inability to grasp and navigate the contextual and ethical intricacies of responsible business leadership. While AI can and will automate tasks involving huge data sets, it will still not help with the intricate and complex decision-making needed in the business.

Here are the key reasons why AI systems lack judgment:

- Lack of context: AI systems lack understanding of the broader context embedded in a business decision. They overlook the cultural nuances, historical precedents, and industry-specific issues and intricacies that business leaders appreciate and understand intuitively.

- Bias risks: As of today, AI systems are trained on biased data and, thus, could implement biased and discriminatory practices with no human in the loop. Even when biased decisions are statistically reasonable, human judgment is critical in the context of business to ensure the ethical execution of inclusive practices.

- Security blind spots: AI could establish defensive measures against a set of known patterns, but is unable to mitigate the risk of social engineering and novel attack vectors. So, human skills are vital to anticipating and understanding an attacker's psychology for adopting a proactive posture.

- Human emotion and empathy: Business decisions involve and impact human beings, whether they are employees, customers, or community members. Understanding the emotional consequences embedded in a decision is crucial, and human algorithms lack the capacity for this sensitivity.

How AI Hinders Business Processes

AI isn't always the silver bullet businesses think it is. In some instances, traditional methods involving human interaction and rule-based systems produce improved outcomes with less risk, greater reliability, and lower costs. So, there is value in recognizing when to pass on AI and knowing when to apply it.

For now, let’s focus on cases when it can do more damage than good.

False Positives Overwhelm True Results

Overwhelming false positives drown accurate identifications, which is one of the critical and most overlooked obstacles to AI deployment. It creates a cascade of inefficiencies that can paralyze operations rather than streamline them.

To illustrate this, let's consider a customer service abuse monitoring system that aims to detect customer service agents who shout at customers. The AI system designed to detect abusive agents may misidentify legitimate cases of loud communication as abusive. See, AI struggles to distinguish the desired speech from unwanted background sounds, which is probably the major issue for voice AI development right now. This leads to customer service managers devoting inordinate amounts of time to reviewing false positive alerts instead of focusing on real abuse cases, which is the whole point of having AI in place.

As a result, the hidden costs of false positives include:

- Increased manual review, which negates the AI increase in operational efficiency.

- Alert fatigue leads to the overlooking of real problems.

- Discrimination against legitimate business functions.

- Diminished trust in automated systems across the business.

Better alternative: Apply threshold-based monitoring with random auditing to minimize false positives while maintaining the desired level of supervision.

Zero Error Tolerance Makes AI Unusable

In certain industries where extreme precision is needed, AI’s unpredictability becomes a liability rather than an asset. This is especially true in the preparation of legal documents.

When lawyers use AI to prepare court documents, every single citation, every regulation, and every legal precedent must be checked and approved. Courts and attorneys cannot afford to submit incorrect legal citations, case histories, or fabricated documents. This mistake becomes a potential malpractice case, risking far more than the time saved.

Here are the high-stakes scenarios requiring perfect accuracy:

- Medical diagnoses and treatment recommendations.

- Financial regulatory compliance reporting.

- Cybersecurity system controls.

- Legal document preparation for court submissions.

Strategic Approach: Use AI only for research and draft generation, and always use a human check and approval workflow. Use AI as a starting point, not a final product.

When Simple Automation Achieves Better Results

Many organizations fail to recognize when advanced technology truly adds value, as evidenced by the use of complicated AI systems to solve problems that basic automation can resolve more efficiently.

Generating contracts is a good example. A law firm may decide to implement an AI system designed to create NDAs, when in reality, a simple decision tree could accomplish the task. The old-fashioned approach has the added advantage of no hallucination risk, predictable outcomes, lower costs, and easier upkeep.

Here are some indicators that simple automation is sufficient:

- There are simple, straightforward rules that dictate decision-making.

- A small number of variables contribute to determining an outcome.

- Conditions for an output are consistent with little change.

- High reliability requirements with no tolerance for unexpected results.

Implementation strategy: Assess the task complexity to determine if an AI solution is appropriate. If the problem fits the if-then logic, you are likely to solve the problem with simple automation.

Human Connection Still Defines Success

Authenticity and genuine human connection remain irreplaceable in many business contexts. AI-generated content often lacks the emotional intelligence and personal touch that builds trust and rapport.

Using AI to create a CEO's monthly video message to employees exemplifies this problem. While AI can generate the script efficiently, employees can often detect the lack of authenticity. They sense the CEO is devoid of personal investment, and this, after all is said, undermines the message that is meant to build leadership trust.

Here are some situations where human authenticity is critical:

- Constructing and cultivating a culture of communication.

- Managing customer relations for high-value accounts.

- Managing communication during crises.

- Creatively resourcing work that brings out the brand and is reflective of its principles and values.

Balanced approach: Use AI for research and initial drafting, but ensure human leaders personally review and modify content to reflect their authentic voice and perspective.

Basic Rules Outperform Complex Algorithms

Rule-based systems work best in situations with definable rules and unambiguous decision-making criteria. In such settings, the systems are often more reliable, transparent, and cost-efficient than the AI options.

Let’s consider the case of validating discounts in e-commerce. An AI system could evaluate customer behaviors, past purchases, market conditions, and other variables to decide on discount eligibility. In contrast, more reliable and easy-to-understand outcomes can be achieved with a rule-based system that only checks basic criteria such as purchase value, customer status, and promotion codes.

Other advantages of rule-based systems include:

- Transparency in the decision-making process.

- Consistency and predictability of outcomes.

- Lower computational costs and faster processing times.

- Ease of troubleshooting and maintenance.

- Support regulatory requirements with audit trails.

- Regulatory compliance through clear audit trails.

Decision framework: Choose rule-based systems when business logic is stable, well-understood, and unlikely to require frequent complex modifications.

Data Quality Undermines AI Effectiveness

The massive effort required to achieve data quality slows down AI implementation.

But there's more to this. See, AI systems are only as good as the data on which they're trained. Data that is insufficient, unbalanced, or of poor quality will yield results worse than those produced by traditional analytics.

Consider the case of a recruiting firm's AI analysis of resumes. If past discriminatory hiring practices are incorporated into the historical hiring datasets used to train the AI system, the AI will reinforce these practices. The system might systematically disadvantage qualified candidates from underrepresented groups, creating legal liability and missing valuable talent.

Here are data quality red flags:

- A lack of sufficient historical data to use for model training.

- Data sets with documented bias.

- Changing business conditions make historical data irrelevant.

- Discrepancies in data collection methods during different time frames.

Alternative approaches: Implement statistical analysis, structured scoring systems, or blind review processes that acknowledge limitations while maintaining objectivity.

Explainability Requirements Exceed AI Capabilities

Regulatory environments and high-stakes decisions often require clear explanations for automated choices. A common issue with AI, especially with deep learning algorithms, is the lack of explainability and transparency, i.e., the block boxes.

A good example would be AI systems used by banks to automate loan approvals. This is a highly regulated business area, where compliance means explaining a loan denial, which AI can't do.

These are examples from various sectors:

- Patient treatment recommendations.

- Financial forecasting for lending decisions.

- Insurance claim processing.

- Criminal justice risk assessments.

- Employment: screening processes.

Alternatives: A compliance-friendly approach would be to use explainable AI methods, such as decision trees, logistic regression, or tools specifically designed for explainable AI, such as SHAP and LIME.

When Implementation Costs Exceed Value Generation

AI implementation costs tend to be higher than companies expect. In addition to licensing software, organizations have to consider the expenses of preparing data, training models, monitoring them, educating personnel, and managing compliance.

Small-scale operations particularly suffer from this cost-benefit imbalance. For instance, a company with ten job applications per position will gain little to no value from an AI resume screening system. A human review takes a couple of minutes and provides better insight into the candidates.

Here’s where the AI implementation costs hide:

- Preparing and cleaning the data.

- Hiring and training specialized staff.

- Model monitoring and maintenance.

- Compliance and audit documentation.

- Integration with existing business systems.

ROI evaluation framework: Calculate the full implementation cost against current process costs. Include time-to-value considerations and opportunity costs of staff time diverted from core business activities.

If AI Introduces Unacceptable Business Risks

AI systems can generate brand new risks not associated with traditional business processes. These risks can outweigh the benefits, especially in reputation-sensitive sectors.

Take a biometric-based hiring assessment, for instance. Using AI systems to assess candidates based on voice tone, facial recognition, or body language poses significant risks to regulatory compliance and potential litigation for discrimination. The reputational risk of discrimination can be higher than the efficiency gained from these AI systems.

Here are the major AI risk categories:

- Bias-related discrimination.

- Accompanying fines for data privacy issues.

- Declining efficiency due to model drift.

- System compromise due to adversarial attacks.

- Lack of human supervision due to automation.

Risk mitigation strategy: Avoid implementing new systems without performing due diligence on generative AI security risks. Study older techniques’ results that might be considerably less risky.

Red Flags: Signs Your Business Isn't Ready for AI

Assessing your organization's position in the AI readiness continuum is crucial for developing a successful AI approach. To facilitate this evaluation, note the signs that your organization lacks readiness for AI.

Your Data Exists in Silos

Disconnected systems are one of the largest hurdles to adopting AI. When your CRM, finance, and operations systems do not communicate with one another, you create data silos, which are detrimental to the success of AI initiatives. In fact, 57% of organizations say their data is not “AI-ready," and those without ready data will likely fail to achieve AI objectives.

To function properly, AI models need consolidated and easily accessible data. If customer data is kept in one system, transaction data in another, and operational metrics in a third, your AI projects will likely face challenges right from the beginning. So, data silos become the greatest barrier to businesses becoming data-driven.

The answer lies in data integration. Organizations must dismantle data silos and connect disparate systems to create a single source of truth. Modern integration solutions such as AWS Glue, Fivetran, and Matillion are making this possible for even smaller businesses. AI systems will be unable to deliver reliable results without access to the clean, integrated, consolidated data that is essential.

You are Still Dependent on Spreadsheets

Spreadsheets are widely considered a technology of the past and a poor foundation for any AI initiatives. MarketWatch reports that as many as 88% of spreadsheets have some form of inaccuracy. Back in 2012, JP Morgan suffered a huge loss of over $6 billion due to a simple copy-and-paste error in Excel. If important business functions are poorly automated and driven by Excel, the introduction of AI will only automate the inefficient processes and errors that currently exist.

The Financial Conduct Authority highlighted specifically the risks of uncontrolled spreadsheets within regulated industries. A single misplaced formula can lead to flawed insights at the enterprise level, especially with AI amplifying these mistakes within automated workflows.

Organizations must locate high-value activities that are currently within the confines of spreadsheets and reengineer them to higher-value systems. This means moving the data into structured databases, building reports on business intelligence tools like Power BI or Tableau, and teaching the business to control the data flow from source systems all the way to storage. This is the data infrastructure needed to support AI models.

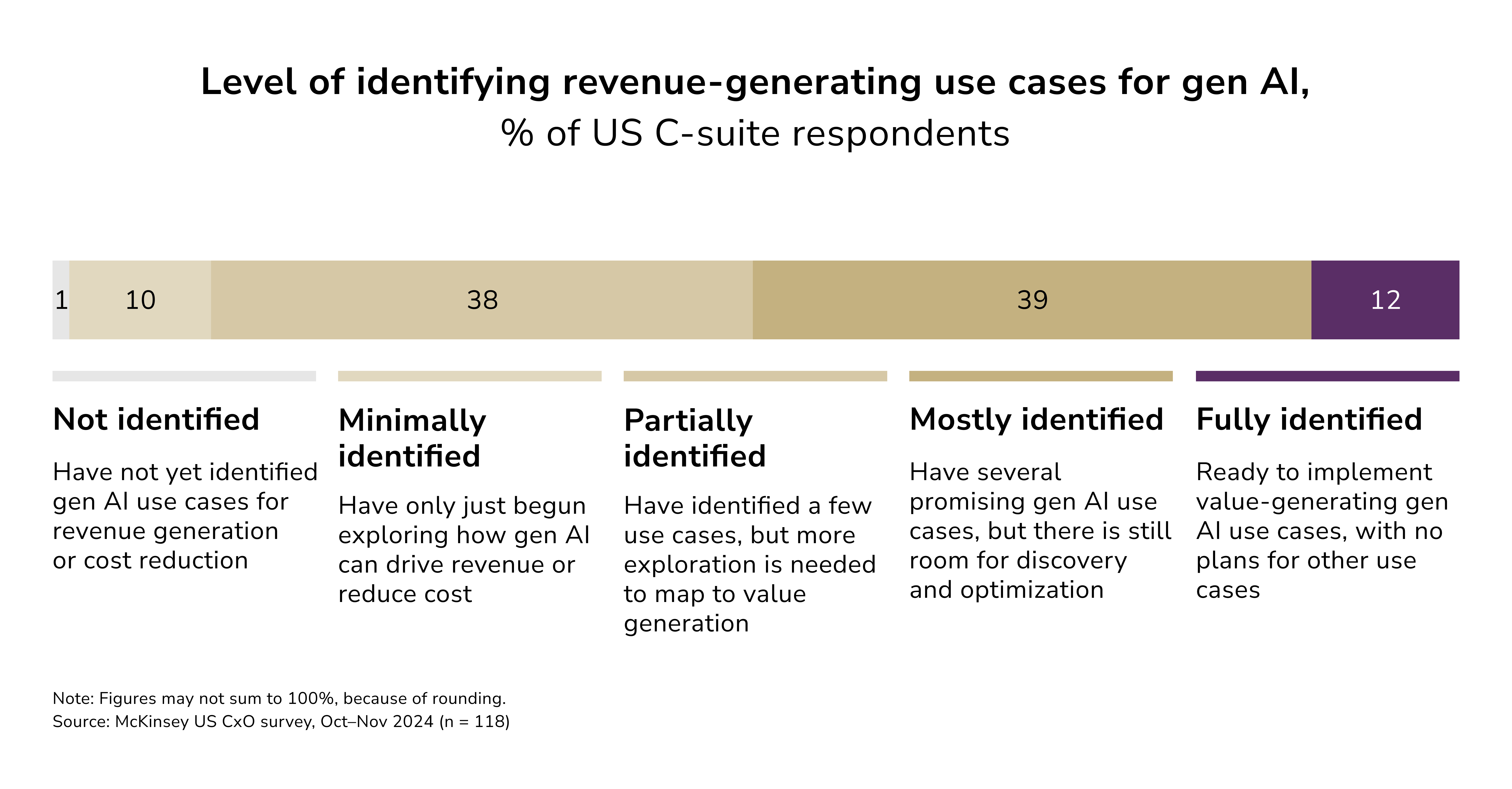

No Clear Use Case or Strategy

If you cannot name a specific problem AI is meant to solve, it's too early to start. Pursuing AI just because “everyone else is doing it” is the surest way to waste funds. Research by PwC indicates that although 86% of executives consider AI to be a mainstream technology, fewer than 20% have adopted it at scale within their business.

The absence of well-defined, actionable use cases is a primary reason for the gap.

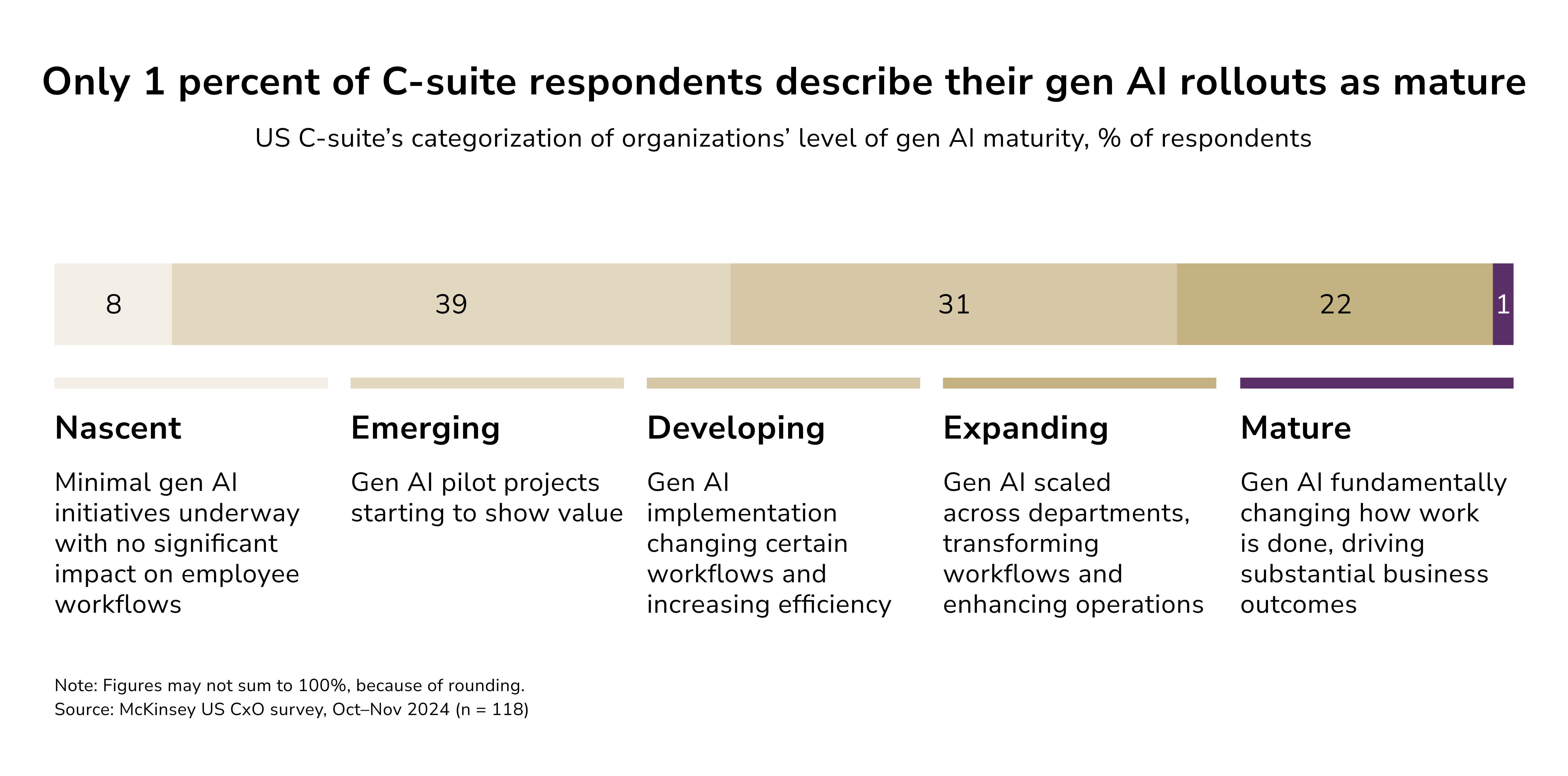

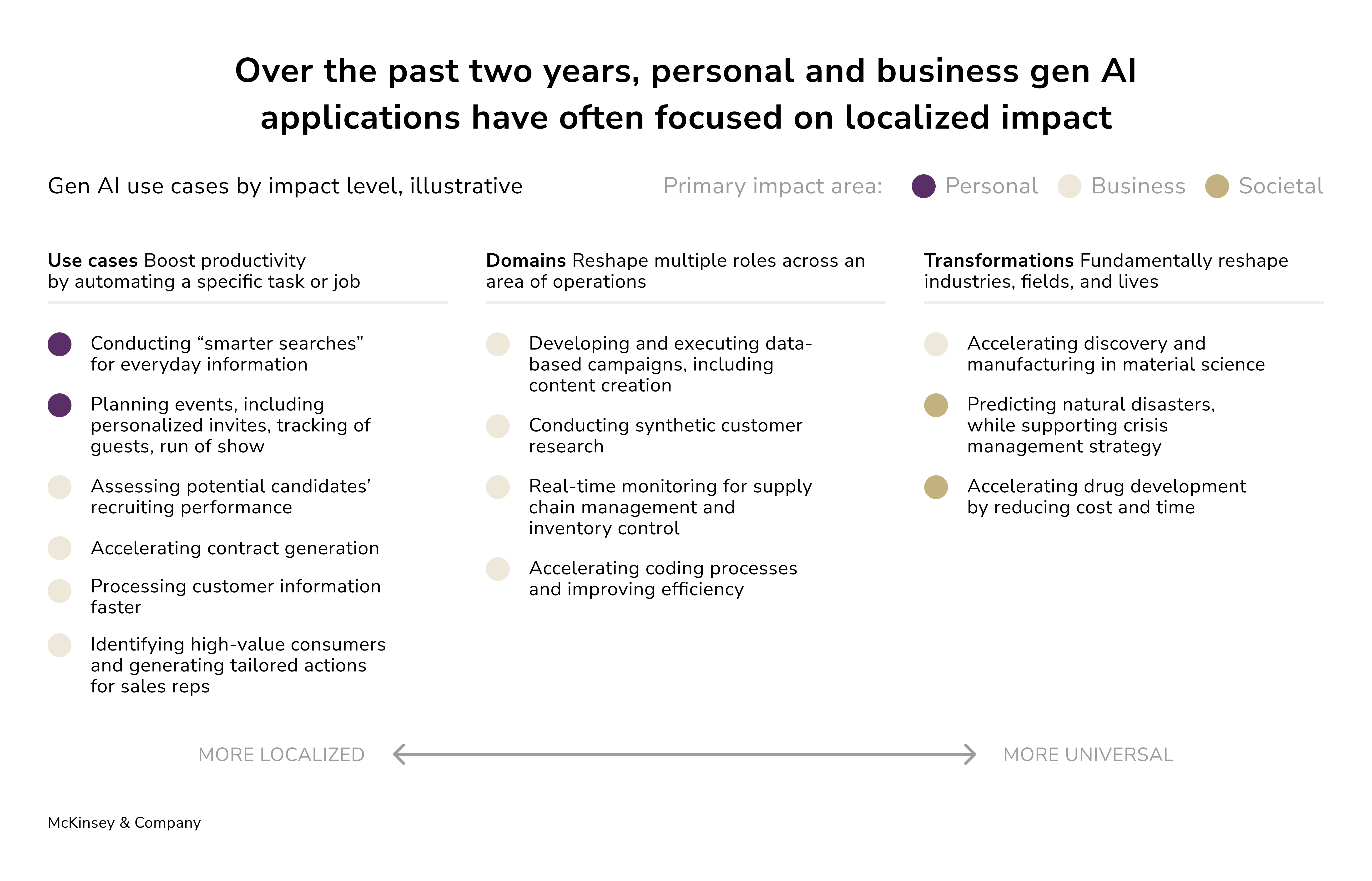

When organizations initiate an AI pilot, they need to anticipate what specific business outcomes they want to achieve; otherwise, they risk losing steam. This is why businesses mostly focus on localized impact.

Companies should conduct an AI opportunity discovery and value assessment to prioritize AI use cases. In order to prove business value and demonstrate ROI, organizations need to set outcome metrics before an AI implementation project starts. This also facilitates the required incremental AI investment strategy to get the solution to the broader use cases envisioned.

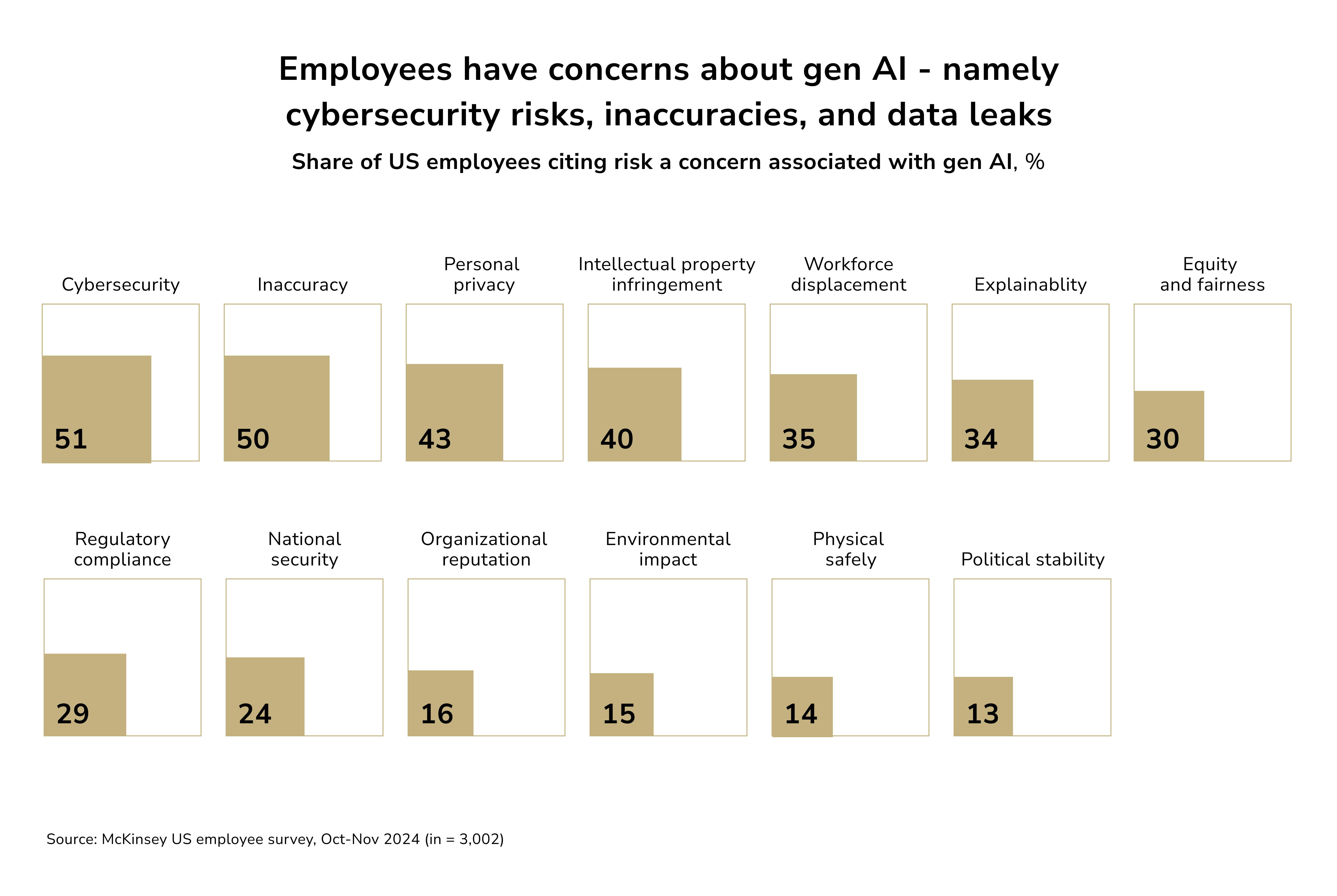

Cultural Resistance to Change

AI adoption has major people-related issues. The lack of reskilling and upskilling is culturally one of the most pressing AI adoption challenges. Resistance to AI adoption or AI training programs among employees stems mostly from fear of job displacement or losing control over advanced systems.

In this case, leadership buy-in means everything. Start with small AI experiments contained in the sandbox of your organization and never reach the outside world.

Lack of Basic Analytics and Automation

If a business has not automated its basic processes or mastered business intelligence, introducing AI is likely to be ineffective. Companies that pursue advanced AI without a foundation of automated processes and analytics often become “paralyzed,” according to a Harvard Business Review article. In this situation, organizations tend to become burdened by expensive black-box systems that cannot be utilized effectively.

An organization that isn’t already using analytics to drive decisions or is still relying on manual processes will certainly struggle to capture AI’s value. A clear sign of this uncaptured value is when businesses pursue AI simply for AI’s sake, without having optimized more basic digital processes.

Skill Gaps and Talent Shortage

Every successful AI implementation needs data scientists and engineers, or at least people trained to use AI and machine learning tools. An unskilled and untrained workforce indicates that the business is not prepared to leverage AI. Even the companies that are pioneering self-service AI tools are struggling to recruit enough AI talent and to build AI competency within the organization. This scenario is compounded by the fact that spending on AI infrastructure is not accompanied by investment in training the human workforce to use the tools. A warning sign is not having data science personnel on staff, or a coordinated plan to get that expertise, or to create partnerships to get that expertise.

Inadequate Technology Infrastructure

High-performance AI systems require advanced computing and seamless system integrations with other cloud systems. For AI tools to be implemented and scaled, there must be modern cloud infrastructure, modern robust systems, no legacy systems that hinder AI foundational work, and advanced GPU computing resources. Dismissing weak data privacy or poor cybersecurity practices is also unwise. AI will amplify previously contained risks.

Red flags in this case are when the IT department experiences friction with a current data volume or software, but has no plans to scale its infrastructure or implement manual processes to secure data for AI projects.

Should one or more of these red flags seem familiar to you, take a moment to build more solid ground. Experts agree that only about a quarter of organizations have actually achieved tangible value from AI so far. The remaining ones are likely still trying to figure out the value it can bring them, which, as mentioned above, likely stems from the gaps we've described. The following section provides a roadmap to help you work on exactly that.

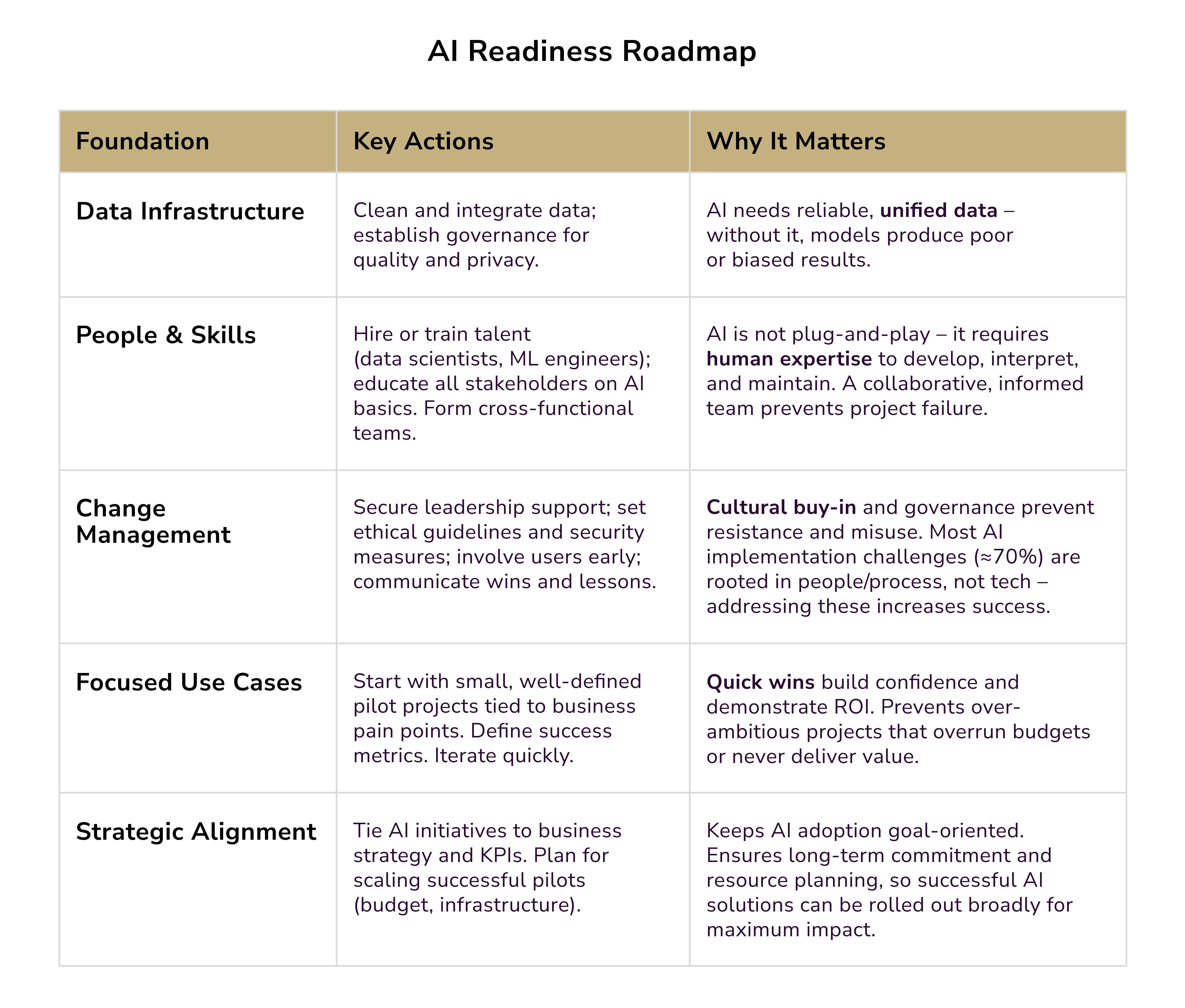

Building AI Readiness: A Strategic Foundation

So far, business leaders struggle to claim the value AI has to offer. However, after the first wave of AI hype, the focus has turned to value and edge, which is the most important thing to any business.

We’ve researched important aspects to keep in focus when pursuing successful implementation.

1. Data Must Accurately Reflect Your Process

If you want to predict parts of a business process, you need a complete dataset that reflects the whole process. Suppose you have a dataset on the performance of your best employees, and you want to use it to enhance your hiring decisions. This idea has a major flaw. Your hiring procedure involves sifting through 1,000 applications, from which you identify 100 candidates. You then assess these 100 for the high-performance criteria.

The problem is that the high performers you analyze do not form the whole dataset. You have only analyzed candidates who passed your screening process. The data you have totally ignores 900 candidates who did not make it and might have had outstanding potential.

Solution: For any decision requiring AI implementation, strive to collect data representing your complete decision-making framework, including both selected and rejected alternatives. This includes customer segmentation, vendor selection, geographic location planning, and hiring decisions. Base future selection decisions on more than data from only the outcomes that were selected. Otherwise, you will be laying a weak and biased foundation.

2. Comprehensive Data Doesn't Guarantee Meaningful Patterns

Abundant representative data may simply constitute extensive noise rather than valuable insight. Just because you have a large data set, it does not mean you can apply advanced techniques and obtain actionable intelligence. Organizations are often tight-lipped about the numerous failures that accompany their successful machine learning projects. Data mining earns its name precisely because not every data source contains valuable insights.

For instance, employee evaluation programs might seem ideal for ML-based analysis. They offer potential correlations between individual characteristics and innovation success. However, a multitude of case studies have shown that no matter how rigorous, the analysis often surfaces no meaningful patterns. Success or failure often hinges on random, unobservable variables, like the mood of the contributor or evaluator.

Solution: Focus on domains where decision quality varies significantly between experts and beginners. If an experienced senior manager has repeated success with, for example, a pricing strategy or supplier selection, or hiring, this indicates a pattern that might be useful for your organization. Human decision-makers also rely on pattern recognition. This algorithm is simply the mind, and the data set is experience.

Another option is to work with academic researchers. Most academics are not under the immediate pressure of profit. They are paid to identify curious patterns in data, and they are able to conduct a wider range of unprofitable searches than most businesses are willing to allow. This is often a valuable opportunity for training doctoral students, and can be a win-win situation for the students and the researchers.

3. Patterns Must Remain Consistent Over Time

Every machine learning algorithm assumes tomorrow's world will resemble yesterday's environment. While ML can surprise us by revealing stable patterns invisible to human perception, this stability isn't guaranteed. If there is too much volatility in a process, extensive historical data will be of no use, as it will not aid pattern detection. Let's consider AI in wealth management, taking into account patterns of multinational corporations between 2000 and 2015. While historians and researchers might appreciate the data, it is unreasonable to consider it a predictor of corporate behavior in 2019, given the significant global shifts.

Solution: Prioritize projects in relatively stable domains or those changing in predictable ways. When uncertain about stability, rely on external exploration or segment data into smaller periods with greater consistency, frequently retraining your models.

4. Patterns Shouldn't Perpetuate Problematic Practices

Amazon used machine learning algorithms to recruit candidates, but it had to abandon its approach due to the gender biased recommendations it suggested. The recruiting algorithms turned out to be biased and gender discriminatory due to the social biases that the algorithms learned and then replicated. Ironically, this case reflected the gender discriminatory recruitment patterns in the historical data used to train the algorithms. The situation highlights the need for responsible AI development; however, the system cannot improve itself if employees use a systematic pattern of discriminatory practices.

Solution: Strategically designed algorithms can provide more accurate insights to HR executives when managers themselves are unaware of discriminatory practices. In such situations, it is more useful to improve the algorithms by adding bias assessments than to abandon the algorithms altogether. The absence of bias in ML applications using personnel data should be the industry standard. This means that human processes themselves need to eliminate prejudice from all aspects of their lives.

5. Not All Pattern-Based Predictions Generate Equal Value

When it comes to machine learning, success can be defined in many ways, including the accuracy of the predictions. One straightforward application involves building systems matching human accuracy at significantly lower costs—essentially automation where all previous principles apply.

In the most ambitious implementations of machine learning, the goal is to gain a competitive edge by outperforming human predictors in accuracy. However, pursuing this path isn’t always the most worthwhile, even if it is possible. Not every gain in accuracy is valuable, as the context of the accuracy determines the value. For example, a company that forecasts the weather and improves its predictions by 2% is unlikely to sell its system for a premium. However, a 2% improvement in predictions by a search engine like Google would likely lead to the company dominating the market.

Solution: Seek value-accuracy relationships where the slope is steep. Apply machine learning where slight accuracy adjustments through algorithmic decision-making provide large rewards—situations where the business faces “increasing returns” scenarios. The challenge is to pinpoint business contexts where accuracy improvements would provide steep returns.

Final Word

As you evaluate AI tools for your business, ask yourself: Does this technology free me to focus on work that requires human judgment, creativity, and connection? If the answer is yes, you're on the right path toward leveraging AI while preserving the human elements that drive lasting success.

The future doesn't belong to humans or machines; it belongs to those who understand how to combine both effectively while never losing sight of what makes us irreplaceable.

Roman Zomko

Other articles