Trends in Machine Learning 2024

Machine learning's ability to adapt and improve over time is revolutionizing industries, making it essential for improving efficiency, personalization, and problem-solving across numerous domains, ultimately driving progress and shaping the future of technology and business. This technology finds applications in diverse fields, from healthcare and finance to autonomous vehicles and natural language processing. It helps uncover valuable insights from vast datasets, streamlines processes, enhances decision-making, and powers innovations.

But what awaits machine learning in 2024? What are the trends in AI and machine learning for the next year? Here’s our version!

Low-Code and No-Code Machine Learning

Low-code and no-code machine learning promises to simplify the process of developing and deploying machine learning models, making AI more accessible to a broader audience. Hovewer, it is worth noting that they might have limitations regarding model complexity and customization.

Low-code machine learning platforms provide a visual interface and pre-built components, allowing developers with some coding skills to streamline the development process. These platforms reduce the need for writing extensive code, making model development faster and more efficient.

No code machine learning tools take accessibility a step further. They require little to no coding expertise, making AI and machine learning accessible to individuals without programming backgrounds. Users can build models through drag-and-drop interfaces, enabling quick prototyping and deployment.

Low-code and no-code machine learning not only democratizes AI but also enables quick model prototyping and reduces the need for highly specialized data scientists and developers. Another advantage of low-code and no-code machine learning tools is that they facilitate innovation, enabling businesses to integrate AI into their operations for improved decision-making, automation, and efficiency.

The introduction of low-code and no-code machine learning represents a significant shift in the AI landscape, empowering a wider range of users to leverage the benefits of machine learning for a variety of applications.

Tiny ML

What is Tiny ML? Tiny Machine Learning, or TinyML, is an emerging field that focuses on running machine learning models on small, resource-constrained devices, such as microcontrollers, embedded systems, and Internet of Things (IoT) devices, without the need for a constant connection to the cloud or powerful servers.

TinyML is being used in a wide range of applications, from predictive maintenance in industrial equipment to health monitoring in wearable devices and even in autonomous drones. Because TinyML aims to operate on devices with limited processing power and energy resources, it is suitable for battery-powered and remote sensors, wearables, and other edge devices.

In order for models to run on resource-constrained devices efficiently, engineers develop custom-designed hardware accelerators and neural processing units. These models are also optimized to perform inference directly on the device and not to rely on cloud-based processing. This improves privacy and reduces latency.

There are a few challenges that engineers need to solve to release the potential of Tiny Machine Learning. These challenges include limited memory, processing power, and the need for energy-efficient algorithms.

Nevertheless, TinyML machine learning trend has the potential to transform various industries by enabling real-time decision-making and autonomous operation of edge devices, contributing to the growth of the Internet of Things and smart, connected systems.

MLOps

MLOps (Machine Learning Operations) is a set of practices and tools that aim to streamline and automate the end-to-end process of deploying, managing, and monitoring machine learning models in a production environment. It brings together elements from machine learning, software development, and operations. It helps to create a more efficient and collaborative workflow for data scientists, engineers, and other stakeholders.

So what do you need to know about introducing MLOps?

- MLOps emphasizes version control for machine learning artifacts, including code, datasets, and models, ensuring reproducibility and tracking changes over time.

- It automates the model training, deployment, and monitoring process, reducing manual errors and improving deployment speed.

- MLOps borrows CI/CD principles from software development to enable automated testing, validation, and seamless model deployment.

- MLOps encourages cross-functional collaboration between data scientists, data engineers, and operations teams, fostering a more integrated workflow.

- It incorporates monitoring solutions to track model performance, detect drift, and ensure models continue to perform as expected in real-world scenarios.

- MLOps enables efficient resource allocation and scaling of computing resources as needed for model training and deployment.

The adoption of MLOps platforms and practices is driven by the need for organizations to operationalize their machine learning models, ensuring they are reliable, scalable, and maintainable in production environments. It helps bridge the gap between data science and IT operations, ultimately improving the success rate of machine learning projects and reducing the time-to-market for AI-based solutions.

Natural Language Processing (NLP) and Generative Pre-trained Transformer (GPT)

Natural Language Processing (NLP) is a subfield of artificial intelligence (AI) and natural language processing (NLP) that focuses on enabling computers to comprehend and interpret human language in a manner that is contextually relevant and semantically accurate. NLP goes beyond basic speech recognition (converting speech to text) to understand the meaning and context of spoken language.

Applications of Natural Language Processing are wide-ranging, from virtual assistants like Siri and Google Assistant to customer service chatbots, transcription services, and voice-controlled smart devices. It plays a pivotal role in making human-computer interactions more natural, efficient, and user-friendly.

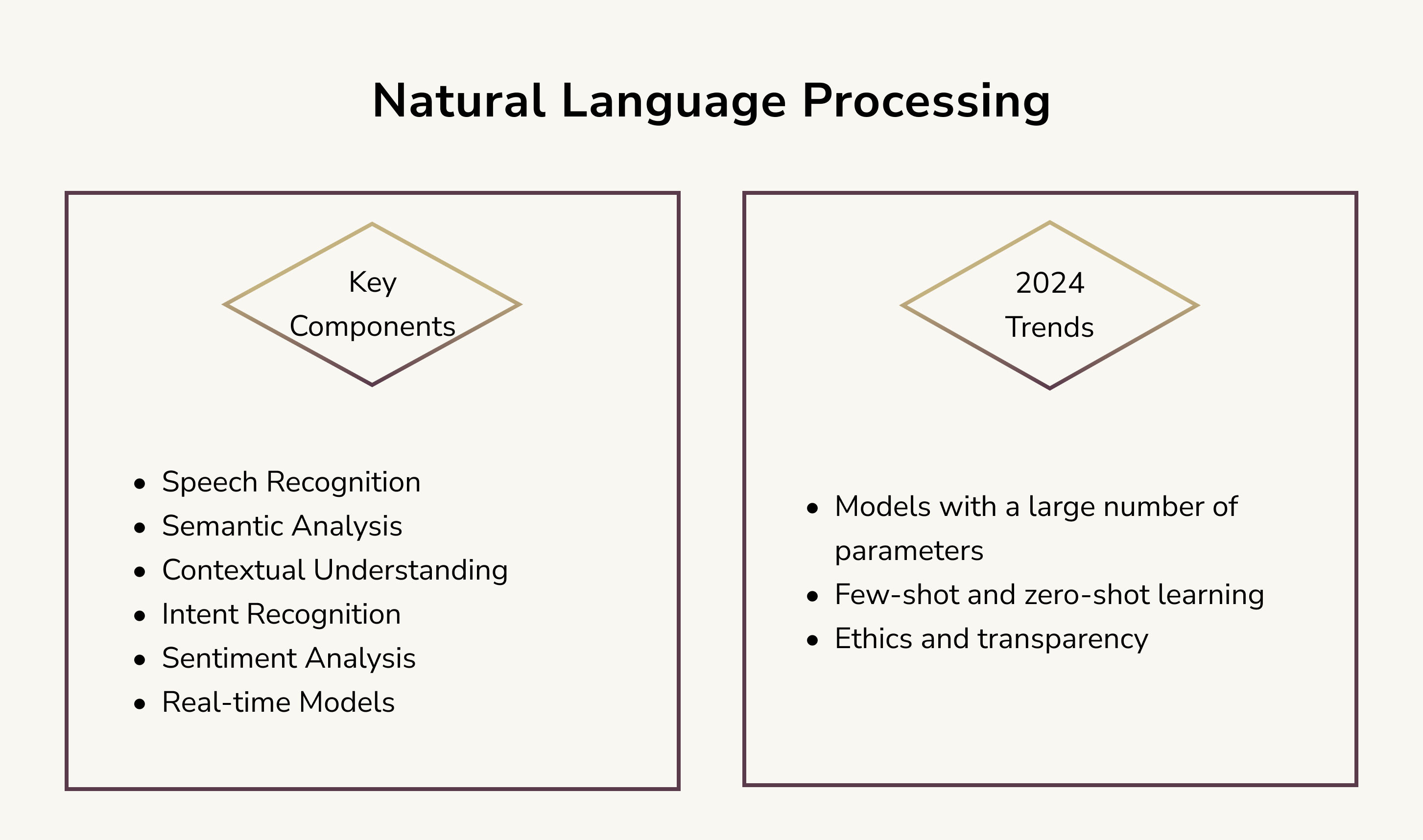

When talking about Natural Language Processing machine learning trend, it is necessary to mention these key components:

- Speech Recognition. The process begins with converting spoken language into written text using speech recognition technology.

- Semantic Analysis. NLP involves deep semantic analysis of the transcribed text to understand the underlying meaning, including context, intent, and sentiment.

- Contextual Understanding. It takes into account the broader context of the conversation, including previous exchanges and user-specific information, to provide more meaningful and contextually relevant responses.

- Intent Recognition. NLP identifies the user's intent, helping systems respond appropriately to user queries or commands.

- Sentiment Analysis. Understanding the emotional tone or sentiment in speech is crucial for applications like customer support and chatbots.

- Real-time Models. DistilBERT and TinyBERT are the simplified versions of big models. Their emergence allows the deployment of NLP models in real-time on mobile devices or within a limited computing environment.

2024 trends for NLP include:

- Models with a large number of parameters. Modern NLP models (such as GPT-3 and BERT) have billions of parameters. This allows them to store a huge amount of information but also requires large resources for training.

- Few-shot and zero-shot learning. Models like GPT-3 can handle tasks with few learning examples, or sometimes none at all, due to their power and prior learning.

- Ethics and transparency. Just like with the other fields of ML, issues related to bias in NLP models, data privacy, and ethical use of AI are among the burning topics for the ML community.

Natural Speech Understanding continues to advance with the integration of machine learning, deep learning, and increasingly sophisticated NLP techniques, making it a critical component of modern AI systems.

GPT, while being a concept related to NLP, is a different thing. GPT is a text-generating model that has been pre-trained on a large corpus of data. This unsupervised training allowed the model to gain a general understanding of the language. After that, the model can be fine-tuned for specific NLP tasks, such as text classification, text generation, etc.

GPT belongs to the models based on the transformer architecture, which was introduced in 2017 via Google's article "Attention Is All You Need". The main feature of transformers is the attention mechanism, which allows the model to focus its attention on relevant parts of the text during processing. Widely-known OpenAI has developed several versions of GPT, up to the most recent, GPT-4.

AutoML

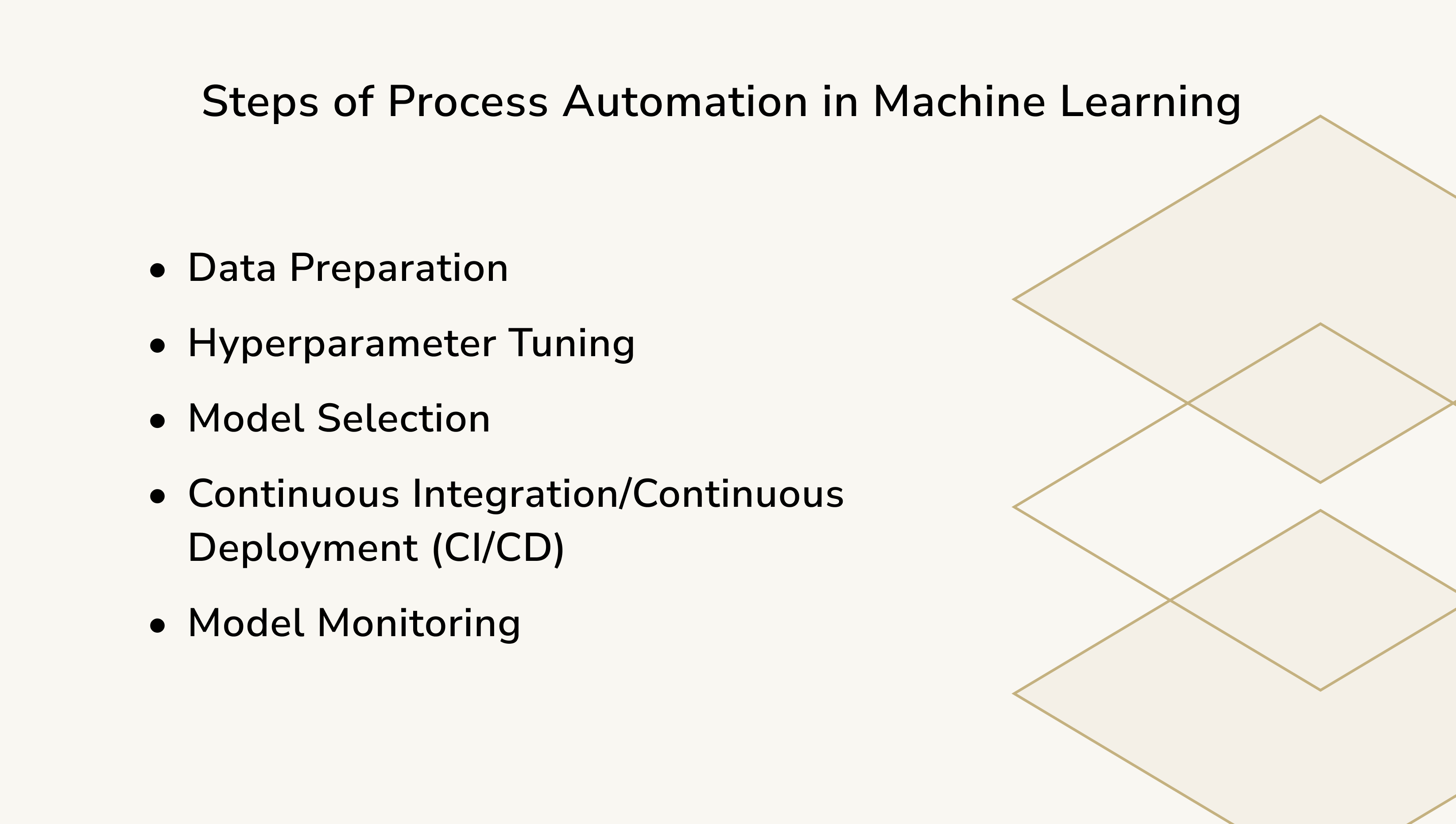

Automated Machine Learning (also known as AutoML) is a cutting-edge technology that simplifies and accelerates the machine learning model development process. Process Automation refers to the use of automated workflows and tools to streamline and optimize various stages of the machine learning lifecycle, from data preprocessing and model development to deployment and monitoring. It aims to reduce manual labor and potential errors hence making the end-to-end machine learning process more efficient, reproducible, and manageable

The steps of Process Automation in machine learning include:

- Data Preparation. Automated data preprocessing tools ensure that the data is ready for modeling by cleaning, transforming, and normalizing data.

- Hyperparameter Tuning. AutoML platforms automate the search for optimal hyperparameters, improving model performance without extensive manual tuning.

- Model Selection. Automated processes can select the most suitable machine learning algorithms and architectures for a given task, saving time and resources.

- Continuous Integration/Continuous Deployment (CI/CD). Automation helps in deploying models seamlessly, integrating them into production systems, and monitoring their performance.

- Model Monitoring. Automated tools can continuously track model performance, detect anomalies, and retrain models when necessary, maintaining their accuracy and reliability over time.

Automated machine learning tools and platforms, such as Google's AutoML, H2O.ai, and Auto-Sklearn, are becoming increasingly popular, aiding organizations in extracting insights, improving decision-making, and creating predictive models for a wide range of applications.

Process Automation in machine learning not only accelerates the development and deployment of AI solutions but also enhances collaboration among data science teams and IT operations. It plays a crucial role in making machine learning more accessible to organizations, as it reduces the expertise required and ensures that machine learning models remain effective and dependable in real-world applications.

Challenges in Machine Learning

This article on machine learning trends would not be complete without mentioning the challenges faced by engineers who contribute to the development of machine learning. It is also important to keep those challenges in machine learning in mind as they are somewhat set directions for future developments of this technology.

- Transparency. One of the key machine learning challenges because complex models are often referred to as black-box models — they are hard to interpret, it might be difficult to explain their decisions to non-experts, and there’s a risk of data bias leading to unfair outcomes.

- Security. Among the main possible risks are adversarial attacks that manipulate models, data breaches compromising sensitive information, and the potential misuse of AI for malicious purposes.

- Ethics. The few major challenges of machine learning here include discriminatory outcomes, bias in training data, invasion of privacy, and the responsible use of AI. It is crucial to address bias, develop ethical guidelines, and ensure transparency and fairness.

- Costs. The expense of training complex models as well as high computational and infrastructure requirements calls for efficient resource allocation and budget-conscious decision-making in machine learning projects.

- Noise. While ML models extract patterns they might be prone to overfitting, where they memorize noise in the training data rather than genuine insights. This emphasizes the importance of data preprocessing, feature selection, and model regularization.

Machine Learning Use Cases in Various Industries

Last but not least, we would like to give you examples of how AI and ML might change almost every industry. Although in some cases it is debatable whether the changes these use cases of machine learning are for better or for worse, we have to be prepared for the future influenced by technology.

Here are a few examples of how machine learning is used or might be used in different fields:

- Healthcare. One of the machine learning use cases in healthcare example is using patients’ medical data and genomic analysis allows to detect diseases early and provide an individual approach to each patient thus making the treatment times more effective.

- Smart Cities. ML might assist with traffic optimization, smart lighting, or waste management systems that automatically respond to the needs of the city’s inhabitants.

- Entertainment. Scripts and music for TV shows and movies could be automatically generated according to general public preferences. Also, deepfake technology (which at the moment has mostly negative connotations) could be successfully used for cinematic purposes.

- Ecology. With the state of our planet being the question of the utmost importance, machine learning can become an integral part of automated systems for monitoring climate change or detecting damaging activities such as illegal logging and deforestation.

- Education. As a part of the evolving edtech industry, AI and ML might be useful for systems that adapt educational material to the individual needs and pace of the student.

- Energy. Forecasting renewable energy production based on weather conditions and optimizing energy use in networks based on consumption data.

- Science. ML, obviously, has a wide application in science. We will use astronomy as an example. ML can analyze large sets of astronomical data, revealing previously unknown patterns, new galaxies, or even signs of life.

- Agriculture. ML might revolutionize agriculture and food production with smart machines that automatically harvest, determining the optimal time for harvesting or automatically detect and treat diseased plants.

Conclusion

The evolving landscape of machine learning continues to shape the future of technology and our world at large and aforementioned machine learning industry trends are the proof. From the rise of TinyML and NLP to the practical applications of AI in healthcare, finance, and more, the possibilities are both exciting and transformative. Embracing these advancements, we are entering a new era of discovery, innovation, and positive change across industries and society as a whole.

However, the ethical usage of machine learning is one of the essential questions to consider when talking about this technology. Ensuring ethical ML helps prevent biased decision-making, discrimination, and privacy breaches. It promotes fairness, transparency, and responsible use, which are critical for trust, legal compliance, and the positive societal impact of machine learning applications. The journey of machine learning is far from its final destination, and the path ahead is filled with endless opportunities to harness its power for the betterment of our world.

Anton Vorontsov

Other articles