How-To: Developing Data Processing Software

Market Sand Markets research shows that the global big data market is estimated to reach $273 billion by 2026. Besides, the segment will experience a compound annual growth rate of 11% between 2023 and 2026. In such a case, data processing is crucial to big data. Respectively, if the big data market expands, the data processing market is also expected to grow.

What is data processing software? Data processing software enables businesses to harness the power of data by collecting, analyzing, and transforming raw data into meaningful insights. Understanding the crucial features of data processing software and the types of data output is essential for CEOs, CTOs, Development Team Leads, and startup owners looking to make informed decisions and drive business growth. This article briefly overviews data processing software, highlights its importance, and explores various data output types to cater to different needs.

The importance of this topic cannot be overstated, as data processing software plays a pivotal role in empowering organizations to make data-driven decisions, optimize processes, and uncover new opportunities. The target audience for this article will benefit from understanding the critical aspects of data processing software, enabling them to select the best solution for their specific needs and make the most of their data assets.

What is Data Processing?

So, what is the data processing? Data processing is the collection, manipulation, transformation, and analysis of data to convert it into meaningful information that can be used for decision-making, reporting, or further processing. It involves several steps, including data input, processing, storage, and output.

When it comes to examples of data processing, these come to mind:

- Calculating the average temperature from a week's worth of temperature data.

- Filtering a list of emails to display only the unread messages.

- Analyzing customer purchasing data to identify trends and preferences.

- Generating financial reports from transaction data.

- Processing images to identify objects and patterns.

Besides, data processing is used in various fields, such as:

- Business. For analyzing sales data, financial transactions, and customer demographics.

- Healthcare. Processing medical records, diagnostic test results, and patient data.

- Education. For tracking student performance, attendance, and enrollment data.

- Government. Analyzing census data, tax records, and public services usage.

- Research. For processing and analyzing large datasets to identify patterns and trends.

Finally, to fully grasp data processing, let’s discuss data processing systems. These are software applications, hardware devices, or a combination of both, designed to manage, manipulate, and analyze data. These systems are built to handle different data types, ranging from structured (e.g., spreadsheets, databases) to unstructured (e.g., text documents, images, audio files). Some common types of data processing systems include:

- Database management systems (DBMS). Software applications that enable database creation, maintenance, and manipulation.

- Data warehouses. Large-scale storage solutions that collect, store, and analyze data from various sources for business intelligence and decision-making.

- Big data processing systems. Frameworks and tools like Hadoop and Spark are designed to process, analyze, and manage large volumes of structured and unstructured data.

- Data analytics tools. Software applications that enable the analysis and visualization of data, such as Tableau, Power BI, and Google Analytics.

- Machine learning and artificial intelligence platforms. Systems that use algorithms and statistical models to process data, identify patterns, and make predictions or decisions.

Data processing software is used in various fields and comes in different shapes and sizes. Yet, the key to the phenomenon is always about the potential benefits it can bring.

Importance of Data Processing

Developing data processing software is a direct path toward reaping various benefits. To name a few of them, keep an eye on these advantages:

- More Informed Decision-Making. Data processing allows organizations to analyze and interpret raw data, providing insights that inform strategic decisions. A retail company analyzes sales data to identify the best-selling products and allocates resources accordingly to maximize profits.

- Increased Efficiency. By automating repetitive tasks and streamlining data handling processes, data processing software saves time and resources. Automating data entry and validation in a supply chain system reduces manual errors and accelerates order processing.

- Enhanced Customer Experience. Data processing helps organizations better understand customer behavior, preferences, and needs, enabling them to improve their products and services. A streaming service analyzes user data to recommend personalized content, improving engagement and satisfaction.

- More Targeted Marketing. Analyzing customer data through data processing enables businesses to create more focused and effective marketing campaigns. An e-commerce company segments customers based on purchase history and demographics, tailoring marketing messages to each segment.

- Improved Risk Management. Data processing allows organizations to identify patterns and trends that can signal potential risks, helping them to take proactive measures. A financial institution processes transaction data to detect unusual patterns, flagging potential fraud cases for further investigation.

- Increased Performance Monitoring. By processing and analyzing performance data, organizations can identify areas for improvement and track progress toward goals. A manufacturing company monitors production data to identify bottlenecks and optimize processes, increasing overall efficiency.

- Greater Competitive Advantage. Organizations that process and analyze data effectively can uncover new opportunities, driving innovation and staying ahead of competitors. A tech company processes user feedback data to identify unmet needs, developing new features that give them a competitive edge.

- Better Compliance and Reporting. Data processing helps organizations maintain accurate records, generate reports, and ensure compliance with industry regulations and standards. A healthcare provider processes patient data to generate reports for regulatory bodies, demonstrating compliance with data protection regulations like HIPAA.

Tapping into data processing benefits requires an in-depth understanding of the data processing phenomenon. As a starting point in offering such an understanding, looking into several data processing types is crucial.

Data Processing Types

Data processing comes in different shapes and sizes. However, when narrowing down the number of data processing kinds, it is crucial to mention the following:

Real-Time Processing

Real-time processing involves immediate data computation as it is generated or received. This distributed processing approach enables the capture and analysis of incoming data streams in real time, facilitating prompt action based on the insights provided by the analysis. Although real-time processing is similar to transaction processing in providing real-time output, the two differ in handling data loss. Real-time processing prioritizes speed, computing incoming data rapidly and disregarding errors before moving on to the following data input. GPS-tracking applications are a prime example of real-time data processing.

In addition, real-time processing may be preferred over transaction processing when approximate answers are sufficient. Stream processing, popularized by Apache Storm, is a common real-time data processing application in data analytics. It analyzes incoming data, such as IoT sensor data or real-time consumer activity tracking. Cloud data platforms like

Google BigQuery and Snowflake employ real-time processing, allowing data to be processed through AI and machine learning-powered business intelligence tools for valuable insights that influence decision-making.

Transaction Processing

Transaction processing is a data processing approach that manages 'transactions' — events or activities that require recording and storage, such as sales and purchases in a database. This form of data processing is essential in mission-critical situations where disruptions could significantly impact business operations, such as processing stock exchange transactions.

In transaction processing, system availability is the top priority, and factors like hardware and software reliability influence it:

- Hardware. A transaction processing system should have hardware redundancy to ensure continuous operation, allowing for partial failures. Redundant components are designed to automatically take over, maintaining the smooth running of the computer system.

- Software. Transaction processing systems should be equipped with software that can recover rapidly from failures. To achieve this, transaction abstraction is typically employed. In the event of a failure, uncommitted transactions are aborted, enabling processing units to reboot and resume operations quickly.

Transaction processing systems are designed to maintain data integrity and ensure critical business processes continue without interruption. They are commonly utilized in finance, retail, and logistics, where the accurate and timely recording of transactions is crucial for smooth business operations. By implementing a robust transaction processing system, organizations can minimize the risks associated with system failures, enhance operational efficiency, and improve their overall performance.

Batch Processing

Batch processing, as the name implies, refers to the analysis of data accumulated over a period of time in groups or batches. This data processing type is essential for business owners and data scientists who must analyze large volumes of data for in-depth insights. Sales figures, for instance, typically undergo batch processing, enabling businesses to utilize data visualization tools such as charts, graphs, and reports to extract valuable information from the data. Due to the substantial volume of data involved, batch processing can be time-consuming. However, processing data in batches conserves computational resources and ensures accuracy.

Batch processing is often preferred over real-time processing when focusing on precision rather than speed. This approach suits applications requiring in-depth analysis, such as financial reporting, inventory management, or customer behavior analysis. The efficiency of batch processing can be measured in terms of throughput, which represents the amount of data processed per unit of time.

By implementing batch processing, organizations can optimize their data analysis processes, reduce the strain on computational resources, and generate accurate insights that inform decision-making. This data processing type is widely used across various industries, including finance, healthcare, and retail, where precision and comprehensive analysis are crucial to driving business success.

Distributed Processing

Distributed processing is a computing approach in which operations are divided across multiple computers connected through a network. This type aims to deliver faster and more reliable services than those achievable by a single machine. Distributed processing is advantageous when dealing with large datasets that cannot be accommodated on a single device.

By breaking down sizable datasets and distributing them across numerous machines or servers, distributed processing enhances data management. This technique relies on the Hadoop Distributed File System (HDFS) and boasts high fault tolerance. In case of a server failure within the network, data processing tasks can be swiftly reallocated to other available servers, minimizing downtime and maintaining efficiency.

Distributed processing also offers cost savings, as businesses can avoid investing in expensive mainframe computers and their maintenance. Instead, they can leverage the power of multiple interconnected systems to achieve their data processing goals.

Typical distributed processing applications include stream and batch processing, providing scalable and efficient solutions for handling large-scale data processing tasks. Organizations can streamline their data management, improve system reliability, and reduce operational costs by adopting distributed processing, ultimately driving better business outcomes across various industries.

Multiprocessing

Multiprocessing is a data processing approach that involves two or more processors working on the same dataset simultaneously. While it may seem similar to distributed processing, there is a crucial distinction: in multiprocessing, the processors are located within the same system and, consequently, in the exact geographic location. This means that component failures can slow down the system.

In contrast, distributed processing involves servers independent of one another and can be located in different geographic areas. As most modern systems have parallel data processing capabilities, multiprocessing is widely used across various data processing systems.

Multiprocessing can be considered an on-premise data processing system. Companies dealing with highly sensitive information may opt for on-premise data processing over distributed processing, such as pharmaceutical firms or organizations in the oil and gas extraction industry. This approach allows for greater control over data security and compliance.

However, the primary disadvantage of on-premise multiprocessing is the cost. Building and maintaining in-house servers can be prohibitively expensive. Despite this drawback, multiprocessing remains a popular choice for organizations prioritizing data security and control, providing efficient parallel processing capabilities to support their data-driven decision-making processes.

Data Processing Methods

What are data processing methods? In a nutshell, there are three key data processing methods to consider. Respectively, there are manual, mechanical, and electronic methods to point out.

Manual

Manual data processing involves the human effort to collect, organize, and analyze data without using automated tools. It is time-consuming and prone to errors but may be suitable for small-scale tasks or when technology is unavailable.

Mechanic

This method uses automated devices like punch card machines or typewriters to process data. It is faster than manual processing but still slower and less efficient than electronic methods.

Electronic

Electronic data processing utilizes computers and software applications to process, store, and analyze data. It is the most efficient and accurate method, capable of quickly handling large volumes of data and complex operations with minimal errors.

Stages of Data Processing

What are the stages of data processing? The stages of data processing describe the steps taken to convert raw data into meaningful information. These stages may vary depending on the specific processing task, but generally, they include:

Step 1. Converting

Transforming raw data into a suitable format for further processing involves changing the structure or presentation of data to make it compatible with the processing system and, for example, converting temperature measurements from Fahrenheit to Celsius or converting a CSV file to an Excel spreadsheet.

Step 2. Validating

Ensuring the data is accurate and complete requires checking for inconsistencies, missing values, or errors. This may involve comparing the data against predefined rules or reference data. For example, validating that email addresses follow the correct format or that all mandatory fields are filled in a form.

Step 3. Sorting

Organizing the data in a specific order makes it easier to process and analyze. For example, sorting a list of products by price, from lowest to highest, to identify the most affordable options, or sorting customer records by last name in alphabetical order.

Step 4. Summarizing

Reducing extensive data sets into smaller, more manageable pieces helps provide an overview and identify patterns. For example, calculating the total sales revenue for a specific month or finding the average age of participants in a survey.

Step 5. Aggregating

Combining related data points or records creates a summary or higher-level view of the data. For example, aggregating daily sales data to calculate monthly sales totals or grouping customer feedback by product category to identify areas for improvement.

Step 6. Analyzing

Examining the data to identify patterns, trends, or relationships can provide valuable insights. For example, analyzing customer purchase data to identify the most popular products or examining website traffic data to determine the most effective marketing channels.

Step 7. Reporting

Presenting the results of the data processing and analysis in a clear, concise, and visually appealing format helps communicate the findings to stakeholders. For example, creating a dashboard with interactive charts and graphs to display sales performance metrics or generating a report with tables summarizing survey results.

Step 8. Classifying

Categorizing or grouping data based on specific criteria or attributes helps simplify the data and identify patterns. For example, classifying customer complaints by type (e.g., product quality, shipping issues, customer service) to determine the most common problems or segmenting customers based on their purchase behavior to target marketing efforts.

Crucial Features of a Data Processing Software

After covering all the stages needed to develop data processing software, it is important to explore some crucial features data processing software must have to reap the advantages of data processing.

Integration with Data Sources

A crucial feature of data processing software is integrating with various data sources. This allows users to import data from databases, files, and applications such as spreadsheets, CSV files, or APIs. Good software should be able to handle different formats and make it easy to connect to multiple data sources.

Data filtering and sorting

Effective data processing software must include advanced filtering and sorting capabilities. This enables users to analyze and manipulate data efficiently, focusing on specific data subsets or arranging data according to specific criteria. It should offer options like search, filter, and sort, making it easier for users to refine and analyze their data.

Reporting Options Depending on the Data Output Type

Data processing software should offer flexible reporting options tailored to the specific needs of different data output types. This includes generating customizable reports, exporting data to various formats (e.g., PDF, Excel, CSV), and creating interactive dashboards for real-time data monitoring. Offering different output options ensures users can generate actionable insights from their data.

Visualization

Visual representations of data are essential for understanding complex datasets and making informed decisions. Good data processing software should have robust visualization capabilities, allowing users to create charts, graphs, and other visualizations to help them identify data trends, patterns, and relationships. The software should also offer various visualization options, such as bar charts, pie charts, scatter plots, and heat maps, to cater to different analysis needs.

Sharing Options

Collaboration and sharing are crucial aspects of modern data processing. Data processing software should offer sharing options that enable users to collaborate effectively with colleagues and stakeholders. This could include real-time collaboration, the ability to share reports and visualizations through links or embedding, and the option to set access permissions for different users.

Scalability and Performance

Data processing software should be designed to handle large datasets and complex operations without compromising speed or performance. The software should be able to scale as the volume and complexity of the data increase, ensuring that users can continue to derive insights from their data efficiently.

Data Protection and Security

Data processing software should prioritize security and protection. This includes data encryption, user authentication, and access control to protect sensitive information and ensure that only authorized users can access the data. Additionally, the software should comply with relevant data protection regulations, such as GDPR or HIPAA.

Customization and Ease of Use

A user-friendly interface and intuitive design are essential for data processing software. Users should be able to navigate the software quickly, and it should offer customization options to accommodate different user preferences and requirements. This can reduce the learning curve and increase user adoption.

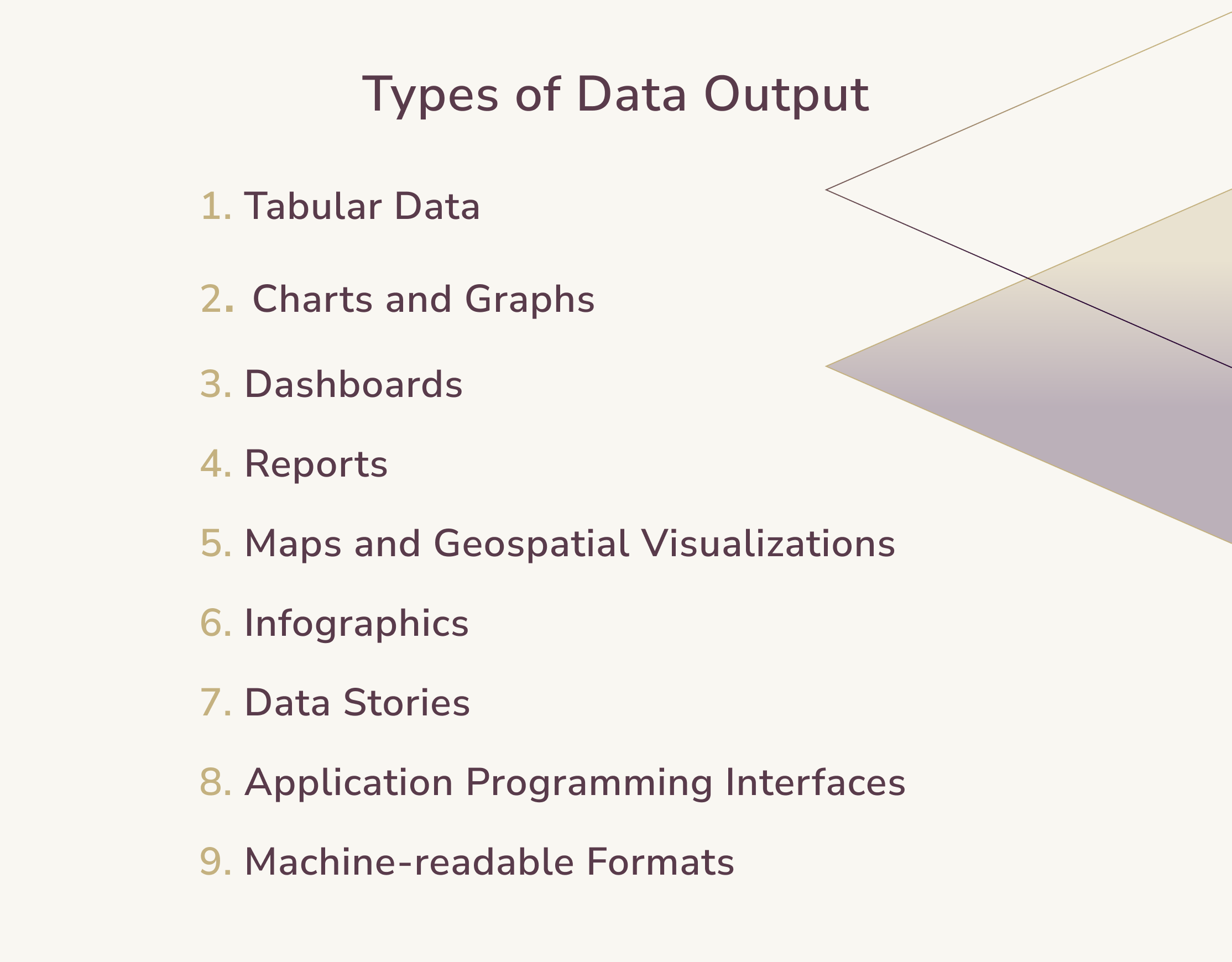

Types of Data Output

Data output refers to the various ways processed and analyzed data can be presented or shared. Different types of data outputs cater to diverse needs, ensuring the information is accessible, understandable, and actionable. Here are some common types of data outputs:

- Tabular Data. Tabular data, or structured data, is presented in rows and columns, forming a table. It is a common way to represent data, especially with large datasets. Tabular data can be exported in CSV, Excel, or TSV.

- Charts and Graphs. Visual representations of data, such as bar charts, line charts, pie charts, scatter plots, and histograms, help users identify trends, patterns, and relationships in the data. These visualizations are particularly useful for conveying complex information quickly and clearly.

- Dashboards. Interactive dashboards comprehensively view key performance indicators (KPIs) and other relevant data points. They often include multiple visualizations and real-time data, allowing users to monitor and explore data more efficiently.

- Reports. Reports provide a structured and organized data analysis presentation, often including textual explanations, charts, graphs, and tables. Reports can be generated in various formats, such as PDF, Word, or HTML, and customized to meet specific needs.

- Maps and Geospatial Visualizations. Maps and Geospatial Visualizations are valuable tools for data with geographical or spatial components. These visualizations can include heat maps, choropleth maps, or point maps, helping users to understand location-based trends and patterns.

- Infographics. Infographics are visual representations of data that combine graphics, images, and text to convey complex information in an easily digestible format. They are handy for presenting data to non-experts or for marketing and communication purposes.

- Data Stories. Data stories combine visualizations, narrative, and context to help users understand the significance of the data and its implications. Data stories can be presented in various formats, such as blog posts, articles, or presentations, and can be interactive or static.

- Application Programming Interfaces (APIs). APIs enable data exchange between different applications or software systems. Users can integrate the data into other applications by providing access to processed and analyzed data through APIs, allowing further analysis or visualization.

- Machine-readable Formats. In some cases, data outputs must be machine-readable, allowing other software or algorithms to further process and analyze the data. Common machine-readable formats include JSON, XML, and RDF.

The type of data output chosen depends on the target audience, the data analysis's purpose, and the users' specific needs. Selecting the appropriate data output type is essential to ensure the data is accessible, useful, and actionable.

Conclusion

Data processing software is invaluable for companies and startups leveraging data for strategic decision-making. By understanding the crucial features of data processing software and the various types of data output, CEOs, CTOs, Development Team Leads, and startup owners can make informed choices about the software solutions they implement.

Ultimately, selecting the right data processing software and output types can help organizations harness the full potential of their data, driving innovation, efficiency, and growth. As the world becomes increasingly data-driven, staying ahead of the curve by adopting the correct data processing tools is essential for the success of businesses in the competitive landscape.

Andriy Lekh

Other articles